I believe the parallelization could be achieved if the final ranking didn't have to occur inside the private auction. One thing we've talked about internally is having a final opaque function that could run in the browser, that takes opaque winner but also other winners, and then turn out the final winner. We could add additional opaque callbacks to minimize the bit leaks, so something like "onPrivateWin" that handles FF rendering, "onOtherWin", etc.

We have tried to test an approach in which PAA auction is initiated by the publisher - using modified fledgeForGPT prebid module and componentAuctions received from RTB House bidder.

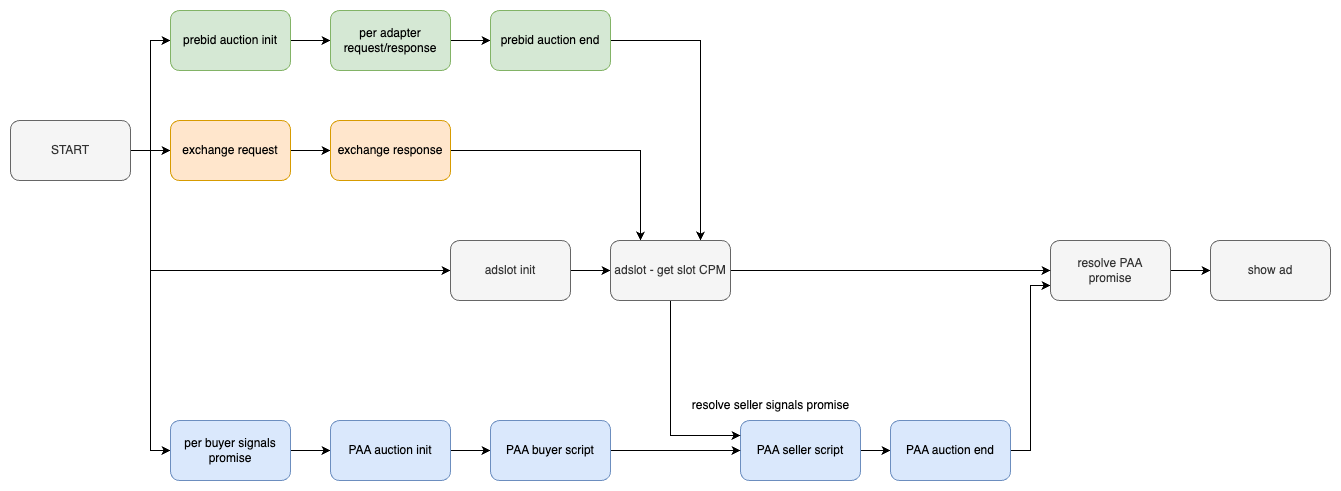

Our test setup - with standalone PAA auction - can be described as follows:

For more detailed information about our experiment, please see: https://github.com/grupawp/PAapi

Experiment conclusions:

Our experiment shows that this integration option may result in a significant revenue drop for the publisher.

The revenue drop reasons are:

PAA auction always extends the time needed to receive and render the ad. Our statistics show that:

median duration time of empty PAA auctions is 52ms (161ms for the 80th percentile)

median duration time of PAA auctions that return URN / fenced frame config is 733ms (1773ms for the 80th percentile)

Additional significant delay caused by a PAA auction (especially for non-zero results) decreases adslot viewability – reducing ad slot value and negatively impacting user experience – affecting user retention and overall business success. Please see: https://web.dev/fast-ads-matter/ for more information about the impact of ad latency on publisher’s revenue.

Solution:

Running PAA auction in parallel to Header Bidding and publisher’s adserver would be possible if PAA auction would return comparable ad value (with precision preserving k-anonymity). Parallel architecture would minimize PAA impact on ad latency and would not force the publisher to choose demand sources randomly.