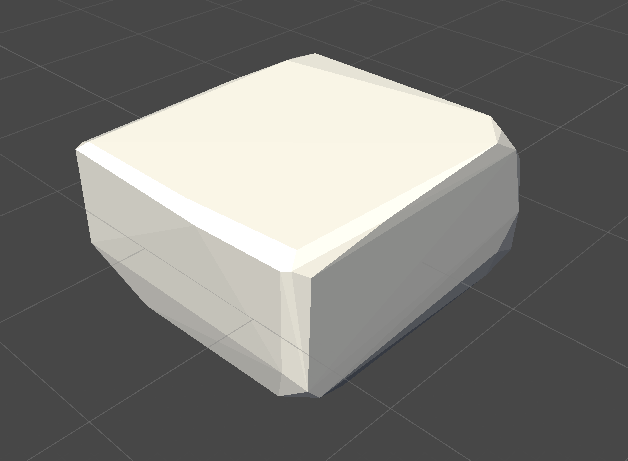

I think I've figured it out. I had to reduce the Aggressiveness, not increase it. The tooltips for the LOD Generator Helper seem to be off, which threw me off at first. Max Iteration Count tooltip seems to be wrong in general, it seems like it might be referring to Vertex Link Distance, although that one doesn't mention it being the squared distance. Then maybe the second sentence for the Aggressiveness tooltip is for Max Iteration Count? It seems like increasing Aggressiveness does not, in fact, lead to a higher quality, but rather changes the heuristic for collapsing edges?

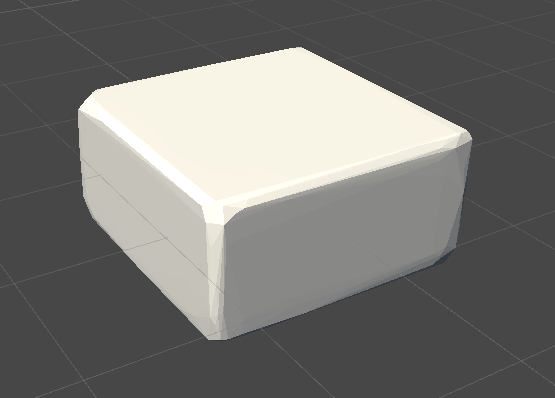

I'm trying to simplify a ~500 triangle mesh that has a topology similar to a decimated mesh.

But I seem to lose volume quickly as I get down toward my goal of 100 tris.

If my mesh is a little more rounded to begin with, though, it maintains its volume much better. Any suggestions? 100 tri result:

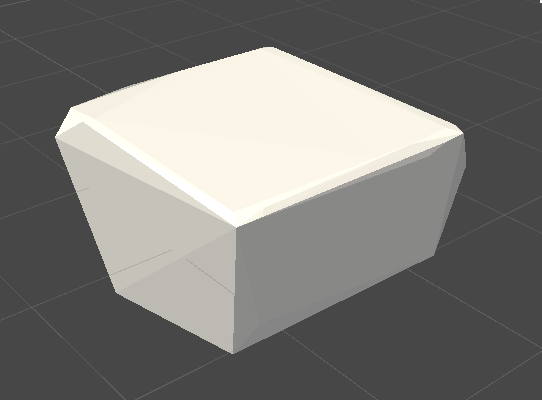

100 tri result:

Why doesn't the algorithm lead to simplifying this to a ~2x1x2 cube?