Hello @jacopoabramo ,

Have you solved it? Could you explain it please?

Bests,

Open jacopoabramo opened 2 years ago

Hello @jacopoabramo ,

Have you solved it? Could you explain it please?

Bests,

Hi @Ba1tu3han, I haven't been working on this for a long time now so I really couldn't say.

Greetings,

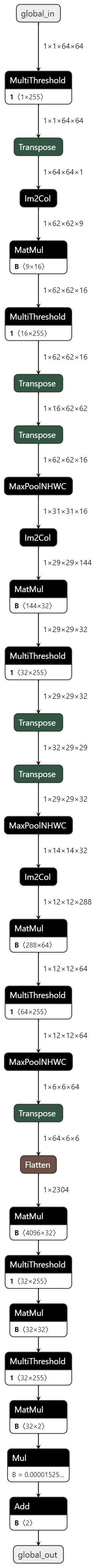

I'm currently trying to compile the following model:

After some tidy-up transformations, this is the output of the streamlining step:

Afterwards, I try to apply the transformations step for creating a dataflow partition as follows:

This causes the following exception:

I checked the existing issues and this exception is mentioned in issue #337 but for a different transformation step.

FINN branch: main (v0.8.1) OS: Windows 10 (WSL2 Ubuntu 18.04) Python: 3.8