The rest of the code, for if it's needed:

import logging

import math

import random

from pathlib import Path

import random

import numpy as np

import bpy

import zpy

from mathutils.bvhtree import BVHTree

log = logging.getLogger("zpy")

def rotation_matrix(axis, theta):

"""

Return the rotation matrix associated with counterclockwise rotation about

the given axis by theta radians.

"""

axis = np.asarray(axis)

axis = axis / math.sqrt(np.dot(axis, axis))

a = math.cos(theta / 2.0)

b, c, d = -axis * math.sin(theta / 2.0)

aa, bb, cc, dd = a * a, b * b, c * c, d * d

bc, ad, ac, ab, bd, cd = b * c, a * d, a * c, a * b, b * d, c * d

return np.array([[aa + bb - cc - dd, 2 * (bc + ad), 2 * (bd - ac)],

[2 * (bc - ad), aa + cc - bb - dd, 2 * (cd + ab)],

[2 * (bd + ac), 2 * (cd - ab), aa + dd - bb - cc]])

def rotate(point, angle_degrees, axis=(0,1,0)):

theta_degrees = angle_degrees

theta_radians = math.radians(theta_degrees)

rotated_point = np.dot(rotation_matrix(axis, theta_radians), point)

return rotated_point

I've created this Blender scene where I have a dice, and I'm using ZPY to generate a dataset composed of images obtained by rotating around the object and jittering both the dice position and the camera. Everything seems to be working properly, but the bounding-boxes generated on the annotation file get progressively worse with each picture.

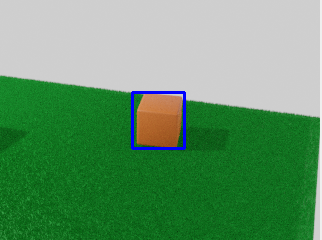

For example this is the first image's bounding-box:

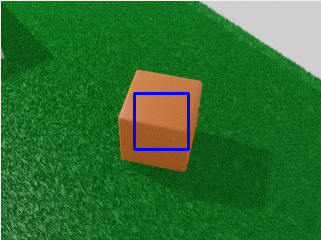

This one we get halfway through:

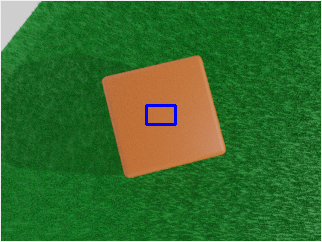

And this is one of the last ones:

This is my code (I've cut some stuff, I can't paste it all for some reason):

Is this my fault or an actual bug?