Hello @backwardsEric , I have found

- first failed build: https://github.com/angband/angband/runs/1857808479?check_suite_focus=true

- latest successful build: https://github.com/angband/angband/runs/1835133558?check_suite_focus=true

From Set up Job step, you can see that both builds are run on 20210131.1. It means that both (successful and failed) builds were run on identical VM with identical configuration and software set. The issue is not related to Hosted VM.

Also you can compare logs of Initialize containers step, as I can see docker image itself was not updated too and can't be root cause of the issue.

Also, actions/checkout action was not updated from November 2020 (https://github.com/actions/checkout/releases)

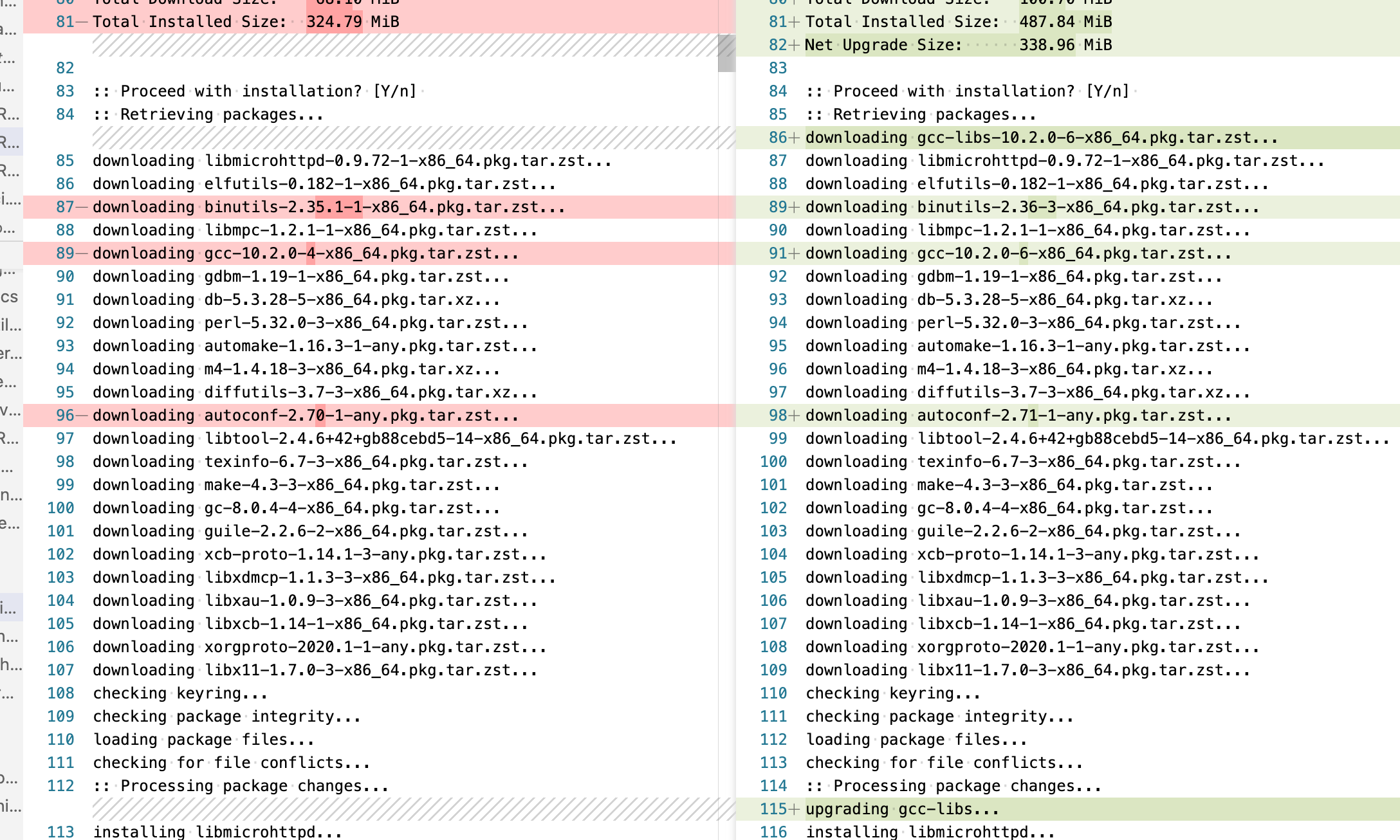

The single difference between builds is Install Build Dependencies step (successful build on left, failed on right):

I guess one of these updated dependencies have broken your build.

I guess one of these updated dependencies have broken your build.

Description

Test cases on the Angband project, running using the ubuntu-latest runner and the archlinux container, ran successfully up to February 4, 2021 (last working example is here, https://github.com/angband/angband/pull/4631/checks?check_run_id=1835133558 ). Some time after that, the runs began to fail in actions/checkout@v2 with a message like, "/__e/node12/bin/node: /usr/lib/libc.so.6: version `GLIBC_2.33' not found (required by /usr/lib/libstdc++.so.6)" (the first example seen of that is here, https://github.com/angband/angband/pull/4632/checks?check_run_id=1850279958 ). Performing a system update in the container (pacman -Syu) or updating glibc in the container resolves that, but introduces other problems: running autoconf fails with "This script requires a shell more modern than all the shells that I found on your system.", pacman -Qi glibc fails with the updated glibc, and trying to determine the source of the autoconf problem with this workflow, https://github.com/backwardsEric/angband/blob/test-docker-failures/.github/workflows/broken-shell.yaml , indicates that [ -x $SHELL ] fails in the container after updating glibc (sample output of that workflow for the test with the updated glibc is here https://github.com/backwardsEric/angband/runs/1857512456?check_suite_focus=true ).

A bug posted on the Arch Linux tracker, https://bugs.archlinux.org/task/69563?project=1&order=dateopened&sort=desc , seems to point at the interaction between the host and the container as the source of the latter problems: specifically how the host handles faccessat2 operations used by glibc 2.33.

Area for Triage:

Servers Containers

Question, Bug, or Feature?:

Bug

Virtual environments affected

Image version 20210131.1

Expected behavior Either actions/checkout@v2 works from the archlinux container without updating to glibc 2.33 from 2.32 or, with an update to glibc 2.33 in the container, a file access check like [ -x $SHELL ] succeeds.

Actual behavior actions/checkout@v2 fails with the stock glibc in the container: "/__e/node12/bin/node: /usr/lib/libc.so.6: version `GLIBC_2.33' not found (required by /usr/lib/libstdc++.so.6)". With the updated glibc (2.33-3), actions/checkout@v2 works but the tests run by https://github.com/backwardsEric/angband/blob/test-docker-failures/.github/workflows/broken-shell.yaml report

[] on /bin/bash +f /usr/bin/test on /bin/bash +x+f

after running

echo "[] on $SHELL "

[ -x $SHELL ] && echo +x``[ -L $SHELL ] && echo +L``[ -f $SHELL ] && echo +fecho "/usr/bin/test on $SHELL "`/usr/bin/test -x $SHELL && echo +x/usr/bin/test -L $SHELL && echo +L/usr/bin/test -f $SHELL && echo +fRepro steps

For the failures with cloning the repository:

For the file access issues: