Can you show some cropped in views of the subject at full resolution? To me it looks like the photos taken from the side do not have enough depth of field and Meshroom was unable to get enough features out of the specimen itself to work out the camera positions. In the image view there is an icon with 3 dots that shows the features that were detected in the image. Take a look to see what Meshroom detected on the specimen.

Are you able to get closer to the specimen so it takes up half the image and use focus stacking to get enough of it in focus?

As to why you didn't get a mesh from the second attempt I do not know. The point cloud looks decent enough. Double click on the meshing node which should show the mesh in the viewer. How many triangles is it reporting?

Are you able to share the images so I can play around with them?

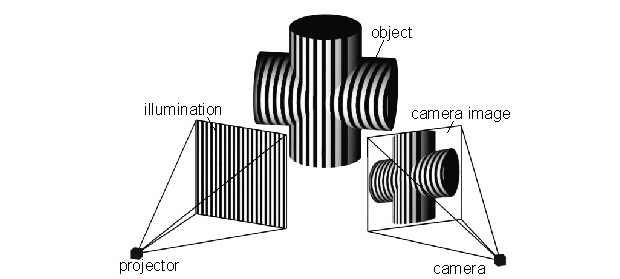

I have been having trouble getting Meshroom to properly compile images into a 3D model using photogrammetry. I have been taking pictures of several specimens using a Canon EOS 90D macro lens (so focal length and lens information are available for all photos). These specimens are fairly small, the largest is about 1.2 cm in diameter. There are about ~150 pictures taken in several rings all around the specimen at different orientation angles (see below for reconstructed cameras). The specimen remains in the same position on every image but has merely been rotated on a turntable at angles of about 8-9 degrees between each image. The specimen is also on a background with unique, non-repeating symbols or imagery to make image matching easier. The pictures are all very crisp and it is possible to make out details on them very easily, so in theory it should be relatively straightforward for Meshroom to match the images.

Nevertheless, despite this Meshroom has consistently been unable to produce models of these specimens. I have tried taking photos of these specimens on two different occasions, as well as tried to create models for multiple specimens, but have been unable to.

On the first attempt, despite the specimen being in focus and sharply defined in each photo, Meshroom simply failed to reconstruct cameras for about half of the total images.

This led to a lot of gaps in the model and a really distorted final product.

I tried taking pictures of the same specimen again from a different orientation and I did get all of the cameras (see picture below).

In practice, the StructureFromMotion model for this attempt looked better but when I opened the resulting mesh for this file in MeshLab it was completely empty and only had a few triangles (which I got out of the Texturing subfolder of the MeshroomCache folder). Additionally, I get an error saying "the following textures have not been loaded: texture_1001.exr", which suggests a texture file was not output from Meshroom.

I tried this again with a second specimen and got similar results. Again, in this case all 164 cameras were accurately reconstructed (see image) and the StructureFromMotion model would suggest the model would turn out relatively okay.

But once the process was finished when I opened the resulting model in Meshlab and there was nothing there but a few triangles.

I am unsure as to what is going wrong. I have been fairly diligent about doing things to improve mesh correlation and creation, but it doesn't seem to work. Notably, I've been able to get Meshroom to work with photographs of large objects from a distance and screenshots of a 3D model, but I haven't been able to get it to work with photos of these smaller specimens.

Desktop (please complete the following and other pertinent information):