-

I didn't use any smoothening, But if you combine the data from the two eyes you will get better accuracy, you can look at my vision_physiology repo for a simple example.

-

The nose is the reference point (0,0,0), and the Z-axis is the depth. Try looking at my points for refrence. And Cool thinking using blender. The eyeball center uses eye-physiology, the distance from the cornea to the eyeball Center, there is some great medicine papers about it. The distnce is practicly the same in most humans. you can try and use diffrent sets of points with different scaling.

Good luck

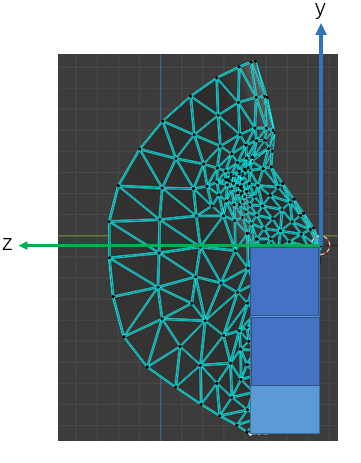

Then I verify the proportion in 2d plane with square, it seems its proportion is closer to 2.691

Then I verify the proportion in 2d plane with square, it seems its proportion is closer to 2.691

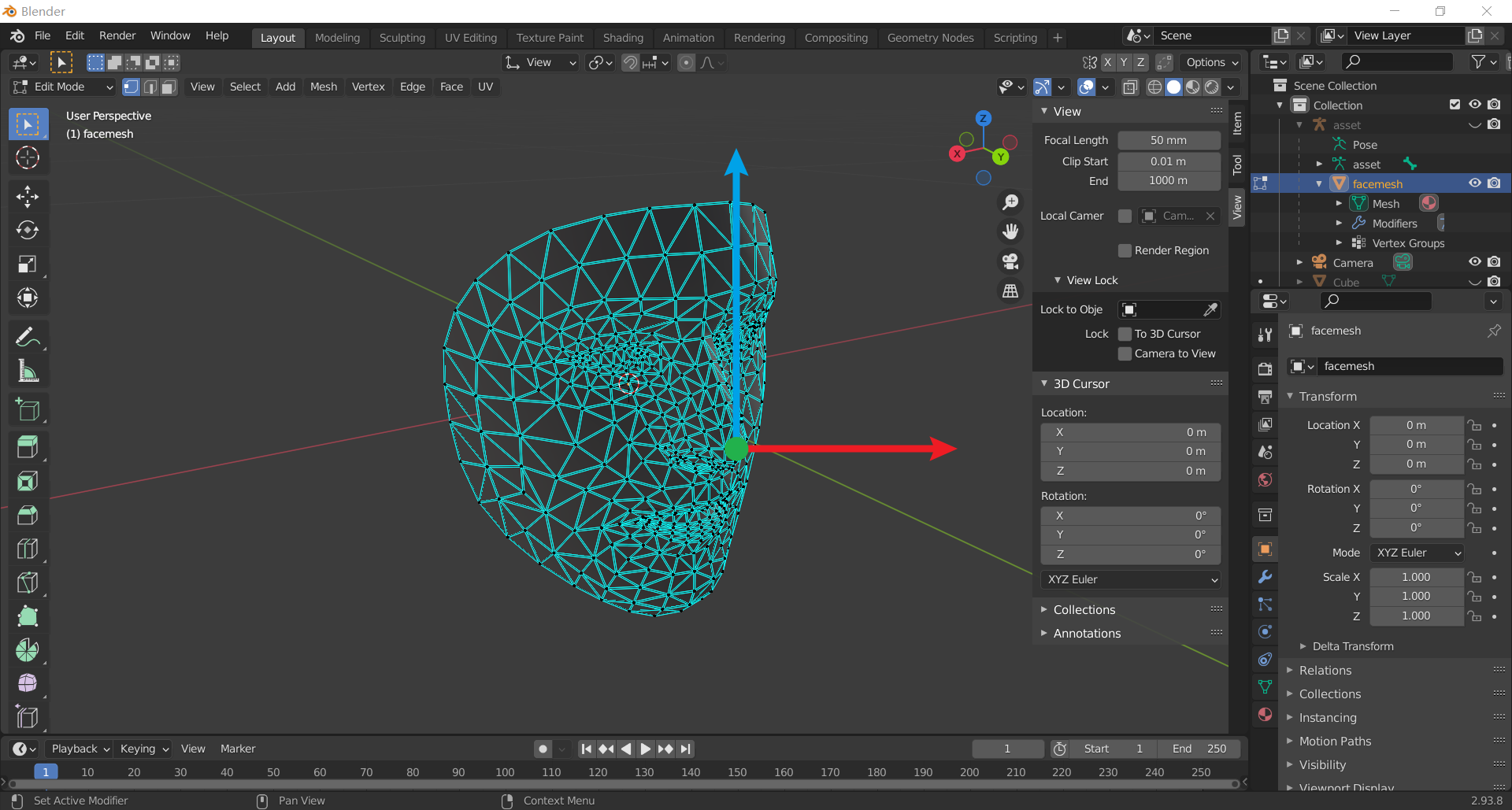

The model in blender I used is

The model in blender I used is

Hi, @amitt1236, your implementation of gaze estimation is the most accurate I have seen so far ! After studied the code, I have some questions:

I run the code on my computer, and output the result to a video below: https://streamja.com/49vwG As shown in the video, my thicker red line jitters a lot, so do you apply some smooth strategy to the gaze point ?

Your code have provided some standard face 3d modol points like (Actually this is the first time I use blender)

How can get the new coordiantes of these points ?

(Actually this is the first time I use blender)

How can get the new coordiantes of these points ?

model_pointsandEye_ball_center_left, how do you get these coordinates ? I am thinking if I change the coordinate system like rotate along z axis: