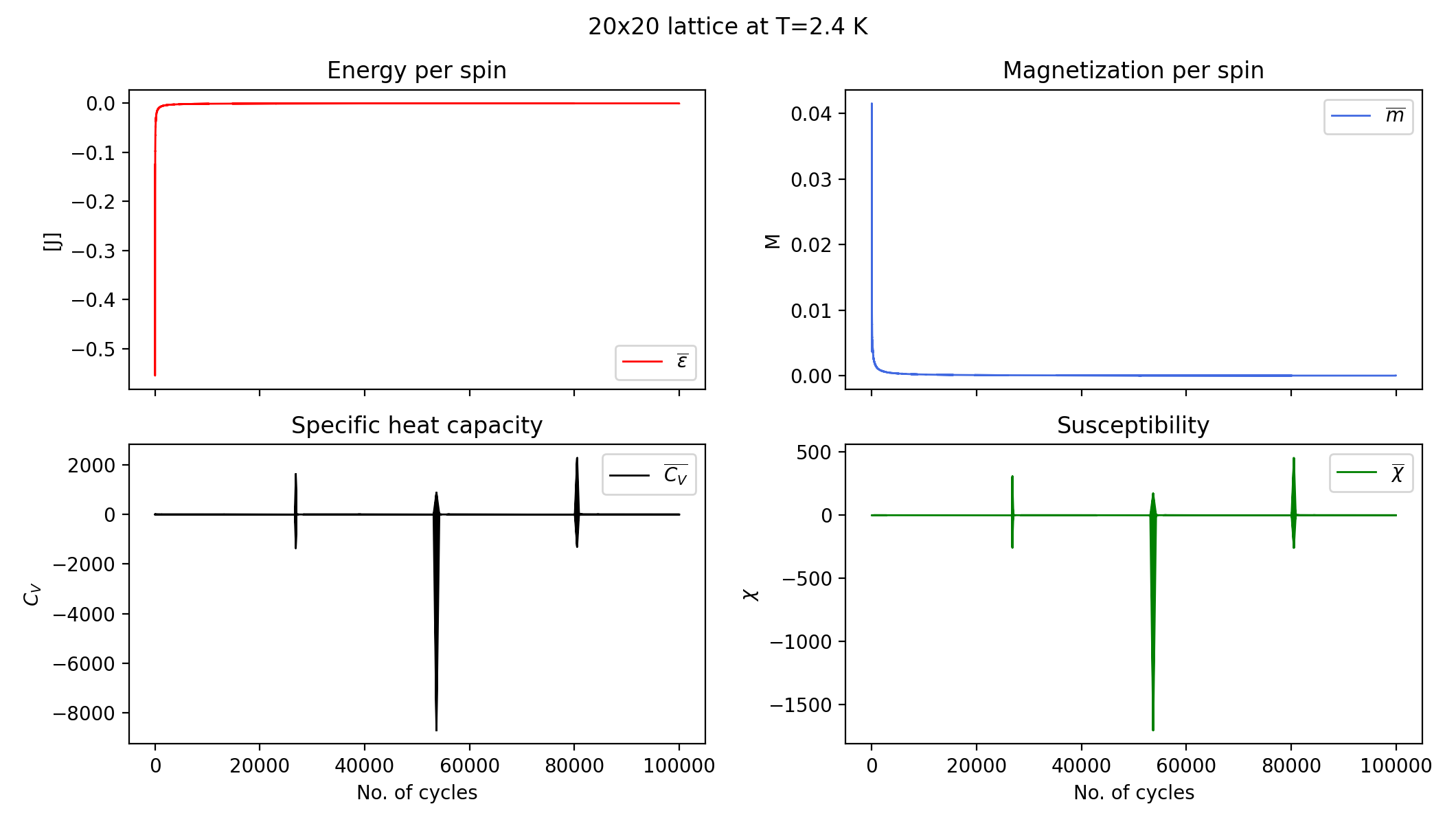

Never mind the plots. They are from a different run, I realized. The ones I get now are even worse...

Closed JohanCarlsen closed 1 year ago

Never mind the plots. They are from a different run, I realized. The ones I get now are even worse...

Hi @JohanCarlsen!

I haven't looked too long at this, but one thing that I noticed (that I don't think is the source of the problem): I assume the point of adding n_threads in seed + n_threads was to give the random number generator on each thread its own seed? But then you should add the unique thread number of the given thread, not the total number of threads (n_threads). See the example here: https://github.com/anderkve/FYS3150/blob/master/code_examples/random_number_generation/main_omp_2.cpp

Yes, I have done that already =)

In general I find the way the code above combines the contributions from different threads a bit confusing... Have you checked if the results make sense when you run it with a single thread? (export OMP_NUM_THREADS=1) Just as a check that the problem really is with the above OpenMP code, and not something else?

No, I have not. I copy pasted the code (that works) from my non-parallelized code and started implementing it.

I tried with one thread, for a 2x2 lattice with this code:

ouble E = 0;

double M = 0;

double sumE = 0;

double sumEE = 0;

double sumM = 0;

double sumMM = 0;

double heatCap = 0;

double X = 0;

double avgEps, avgEps_sqrd, avgM, avgM_sqrd;

mat lattice = initialize_lattice(L, T, E, M, ordered);

mt19937 generator;

unsigned int seed = std::chrono::system_clock::now().time_since_epoch().count();

generator.seed(seed);

// Start parallell region

#pragma omp parallel

{

const int n_threads = omp_get_num_threads();

const int threadID = omp_get_thread_num();

const double weight = 1. / n_threads;

mt19937 threadGen;

unsigned int threadSeed = seed + threadID;

threadGen.seed(threadSeed);

mat threadLattice = lattice;

// Variables to be calculated before averaged across threads

double threadE = E;

double threadM = M;

double threadSumE = 0;

double threadSumEE = 0;

double threadSumM = 0;

double threadSumMM = 0;

for (int n = 1; n <= nCyclesPerThread; n++)

{

mcmc(threadLattice, threadGen, L, threadE, threadM, T);

threadSumE += threadE / n;

threadSumEE += (threadE * threadE) / n;

threadSumM += fabs(threadM) / n;

threadSumMM += (threadM * threadM) / n;

// Average across threads

#pragma omp critical

{

sumE += weight * threadSumE;

sumEE += weight * threadSumEE;

sumM += weight * threadSumM;

sumMM += weight * threadSumMM;

avgEps = sumE / N;

avgEps_sqrd = sumEE / (N * N);

avgM = sumM / (N);

avgM_sqrd = sumMM / (N * N);

heatCap = N * (avgEps_sqrd - avgEps * avgEps) / (T * T);

X = N * (avgM_sqrd - avgM * avgM) / T;

outfile << setw(width) << n

<< setw(width) << avgEps

<< setw(width) << avgM

<< setw(width) << heatCap

<< setw(width) << X

<< endl;

}

}

}which resulted in this plot:

Then we at least know that the problem isn't only the parallelisation itself, since these plots don't make much sense :)

But I also noticed one potential issue with the parallelisation: I think there is a thread safety issue ("race condition") in the above code. The result you write to file will only make sense if e.g. the avgEps value really corresponds to the value after n cycles. But a problem is that you have n_threads different threads each running through their own cycle loop, possibly at different speeds. Each thread adjusts the common variables sumE and avgEps and writes these to file. But if different threads run at slightly different speeds, the values of the common variables sumE and avgEps won't be linked to the current cycle number n of the current thread.

So I'm afraid my suggestion is to first figure out why you get the strange plots above when running with a single thread, and afterwards reconsider the parallelisation a bit. Actually, I'm not sure it makes too much sense to parallelise this particular problem in a "fancy" way... What you are after here is to see the value of these four quantities for each specific number of cycles. All you would get from your current parallelisation attempt would be to rather get an average over threads of each quantity, at each number of cycles. But that you could also get "offline": So I'd suggest to rather run a series of single-thread runs, that each saves its own dataset. Then you could plot these runs as individual graphs in the same plot, and also plot the average graph. That would give you the same result as your current code is aiming for, with the additional info of seeing the variation between the individual runs.

I think I figured it out:

In the code, the call to mcmc() changes the arguments, i.e. the energy and magnetization of each thread. I was summing up these values double (at least). Now the plot look like this:

Which absolutely looks way better! =)

BUT I still wonder about the "race condition"; I follow your logic, but can I fix it within a single loop? Or... Does it matter now that my plot looks better?

That certainly looks better :)

BUT I still wonder about the "race condition"; I follow your logic, but can I fix it within a single loop? Or... Does it matter now that my plot looks better?

I'm calling it a night now, so not sure... :) But I think my recommendation to avoid the race condition issue would be to simply have the different threads run completely independent MCMC runs (i.e. like in parallelisation strategy 2 from the note in problem 7) and save their results independently. (That is, no weighting with 1/n_threads, no combination across threads during the loops, etc.) For instance, to save the average-energy-per-spin result you could simply have a common armadillo matrix of size (n_threads, nCyclesPerThread), so that during the loops each thread writes its values to its designated row in this matrix. Then after the parallelisation region you can use that matrix to do averages across threads if you want. But I would recommend to simply save that matrix to file, and then do the average across the different runs when plotting, as described above.

Hi! I'm trying to implement

OpenMP, but I'm struggling. Here's how my parallelized block looks like:The resulting plots are:

Obviously, there is something wrong in the "adding-up" part, since the energy is increasing, but I really can't seem to figure out where/why.