This old NDArray is based on the previous interface that I've been working on replacing. @mwillsey and I have been redefining NDArray to use TVM's underlying NDArray object based container. see #6408 for in progress work on this.

Open 12101111 opened 3 years ago

This old NDArray is based on the previous interface that I've been working on replacing. @mwillsey and I have been redefining NDArray to use TVM's underlying NDArray object based container. see #6408 for in progress work on this.

@12101111 @mwillsey can you land a quick hotfix?

@jroesch please followup to see if https://github.com/apache/incubator-tvm/pull/6563 fixed the issue

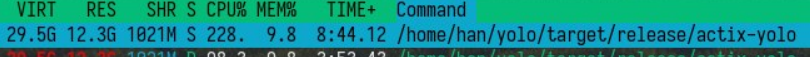

I got a SIGSEGV after update.

* thread #3, name = 'actix-yolo', stop reason = signal SIGSEGV: address access protected (fault address: 0x7fff69200000)

frame #0: 0x0000555555970a2f actix-yolo`core::ptr::write::hbe55587142e7e2c0(dst=0x00007fff69200000, src=0.501960814) at mod.rs:901:51

898 abort();

899 }

900 // SAFETY: the caller must uphold the safety contract for `move_val_init`.

-> 901 unsafe { intrinsics::move_val_init(&mut *dst, src) }

902 }

903

904 /// Overwrites a memory location with the given value without reading or

(lldb) bt

error: need to add support for DW_TAG_base_type '()' encoded with DW_ATE = 0x7, bit_size = 0

error: need to add support for DW_TAG_base_type '()' encoded with DW_ATE = 0x7, bit_size = 0

error: need to add support for DW_TAG_base_type '()' encoded with DW_ATE = 0x7, bit_size = 0

error: need to add support for DW_TAG_base_type '()' encoded with DW_ATE = 0x7, bit_size = 0

error: need to add support for DW_TAG_base_type '()' encoded with DW_ATE = 0x7, bit_size = 0

* thread #3, name = 'actix-yolo', stop reason = signal SIGSEGV: address access protected (fault address: 0x7fff69200000)

* frame #0: 0x0000555555970a2f actix-yolo`core::ptr::write::hbe55587142e7e2c0(dst=0x00007fff69200000, src=0.501960814) at mod.rs:901:51

frame #1: 0x0000555555895f75 actix-yolo`core::ptr::mut_ptr::_$LT$impl$u20$$BP$mut$u20$T$GT$::write::hce983f736b25e62d(self=0x00007fff69200000, val=0.501960814) at mut_ptr.rs:866:18

frame #2: 0x00005555558751d3 actix-yolo`tvm_rt::ndarray::NDArray::fill_from_iter::_$u7b$$u7b$closure$u7d$$u7d$::h678cc9af6dcf1abe(x=0.501960814) at ndarray.rs:312:13

frame #3: 0x0000555555885371 actix-yolo`core::iter::traits::iterator::Iterator::for_each::call::_$u7b$$u7b$closure$u7d$$u7d$::hac933476f2144489((null)=<unavailable>, item=0.501960814) at iterator.rs:646:29

frame #4: 0x0000555555882c4a actix-yolo`core::iter::adapters::copy_fold::_$u7b$$u7b$closure$u7d$$u7d$::hdab6231bbdf9896b(acc=<unavailable>, (null)=0x00007fffdd176420) at mod.rs:166:22

frame #5: 0x00005555558863f1 actix-yolo`_$LT$ndarray..iterators..ElementsBase$LT$A$C$D$GT$$u20$as$u20$core..iter..traits..iterator..Iterator$GT$::fold::_$u7b$$u7b$closure$u7d$$u7d$::hdfeb4d762a866e39(acc=<unavailable>, ptr=0x00007fffdd176420) at mod.rs:219:56

frame #6: 0x00005555558858d5 actix-yolo`_$LT$ndarray..iterators..Baseiter$LT$A$C$D$GT$$u20$as$u20$core..iter..traits..iterator..Iterator$GT$::fold::h026b07436c2a02f5(self=Baseiter<f32, ndarray::dimension::dim::Dim<ndarray::dimension::dynindeximpl::IxDynImpl>> @ 0x00007fffea3d8690, init=<unavailable>, g=closure-0 @ 0x00007fffea3d8470) at mod.rs:90:29

frame #7: 0x000055555588631b actix-yolo`_$LT$ndarray..iterators..ElementsBase$LT$A$C$D$GT$$u20$as$u20$core..iter..traits..iterator..Iterator$GT$::fold::h769d0092fb0ddaee(self=<unavailable>, init=<unavailable>, g=closure-0 @ 0x00007fffea3d8720) at mod.rs:219:18

frame #8: 0x0000555555887c0e actix-yolo`_$LT$ndarray..iterators..Iter$LT$A$C$D$GT$$u20$as$u20$core..iter..traits..iterator..Iterator$GT$::fold::he5a9afd704b7f214(self=(inner = ndarray::iterators::ElementsRepr<core::slice::Iter<f32>, ndarray::iterators::ElementsBase<f32, ndarray::dimension::dim::Dim<ndarray::dimension::dynindeximpl::IxDynImpl>>> @ 0x00007fffea3d88b8), init=<unavailable>, g=closure-0 @ 0x00007fffea3d8860) at mod.rs:392:37

frame #9: 0x0000555555882db4 actix-yolo`_$LT$core..iter..adapters..Copied$LT$I$GT$$u20$as$u20$core..iter..traits..iterator..Iterator$GT$::fold::hd820c195eaf254d1(self=Copied<ndarray::iterators::Iter<f32, ndarray::dimension::dim::Dim<ndarray::dimension::dynindeximpl::IxDynImpl>>> @ 0x00007fffea3d8990, init=<unavailable>, f=closure-0 @ 0x00007fffea3d8950) at mod.rs:202:9

frame #10: 0x000055555588237a actix-yolo`core::iter::traits::iterator::Iterator::for_each::hec458ad0d24e40a0(self=Copied<ndarray::iterators::Iter<f32, ndarray::dimension::dim::Dim<ndarray::dimension::dynindeximpl::IxDynImpl>>> @ 0x00007fffea3d8b90, f=closure-0 @ 0x00007fffea3d8a20) at iterator.rs:649:9

frame #11: 0x0000555555875149 actix-yolo`tvm_rt::ndarray::NDArray::fill_from_iter::h045c8fb277991bbf(self=0x00007fffea3d8d10, iter=Copied<ndarray::iterators::Iter<f32, ndarray::dimension::dim::Dim<ndarray::dimension::dynindeximpl::IxDynImpl>>> @ 0x00007fffea3d8d20) at ndarray.rs:311:9

frame #12: 0x00005555558753b6 actix-yolo`tvm_rt::ndarray::NDArray::from_rust_ndarray::h34c9a7a22d85e017(input_nd=0x00007fffea3d9870, ctx=(device_type = GPU, device_id = 0), dtype=(code = '\x02', bits = ' ', lanes = 1)) at ndarray.rs:350:9

......The problem happend in https://github.com/apache/incubator-tvm/blob/277bfc86aac94442625e8307aa55a9215e2fddd6/rust/tvm-rt/src/ndarray.rs#L350

I change it to the old one:

/// Converts a Rust's ndarray to TVM NDArray.

pub fn from_rust_ndarray<T: Num32 + Copy>(

input_nd: &ArrayD<T>,

ctx: Context,

dtype: DataType,

) -> Result<Self, NDArrayError> {

let shape: Vec<i64> = input_nd.shape().iter().map(|&x| x as i64).collect();

let mut nd = NDArray::empty(&shape, ctx, dtype);

//nd.fill_from_iter(input_nd.iter().copied());

use std::iter::FromIterator;

let mut buf = Array::from_iter(input_nd.into_iter().map(|v| *v));

nd.copy_from_buffer(

buf.as_slice_mut()

.expect("Array from iter must be contiguous."),

);

Ok(nd)

}And still got a memory leak

This new memory leak is caused by

The reference count of every NDArrayContainer is 1 after drop

@jroesch can you please confirm if the issue has been fixed?

I recently ran into SIGSEGV and SIGBUS, as well as general stability issues that I summed up here https://discuss.tvm.apache.org/t/deploying-opencl-on-apple-hardware-metal-sigsegv-and-sigbus/10642/6 . I'm hoping that this leak is what's causing that issue. Can we get an update here? Thanks!

Is this memory leak issue fixed ?

See https://discuss.tvm.apache.org/t/memory-and-vram-leak-using-rust-frontend/7990

I have found that All

NDArraycreated in rust isNDArray::Borrowed, so they are never freed.If I remove the check, the memory and vram leak won't happend.

cc @ehsanmok