Another possible avenue (as suggested by @fubhy) rather than magic .pre/.post properties could be to simply pass a resolver executer, and let the user take care of running validations before/after:

const permissionMap = {

Post: {

notes: (resolverExecuter, args, context) => {

if (!isLoggedIn(context.user)) return false;

// Execute the resolver

const data = resolverExecuter();

return isAuthor(data);

}

}

}resolverExecuter here is a closure around each resolver that takes care of passing the right variables to it and to return the unchanged data back to GraphQL. (otherwise the permissionMap could manipulate the data) It would also keep track of it's executed state, and if it's not been executed and the permission function returned true it'd execute the resolver itself, something to this effect:

let executed = false;

let data;

const resolverExecuter = () => {

data = field.resolver();

executed = true;

return data;

}

if (field.permission(resolverExecuter, args, context)) {

if (!executed) data = field.resolver();

return data;

}That way the developer has full control over when to execute which validations and which data they need, rather than relying on magic properties.

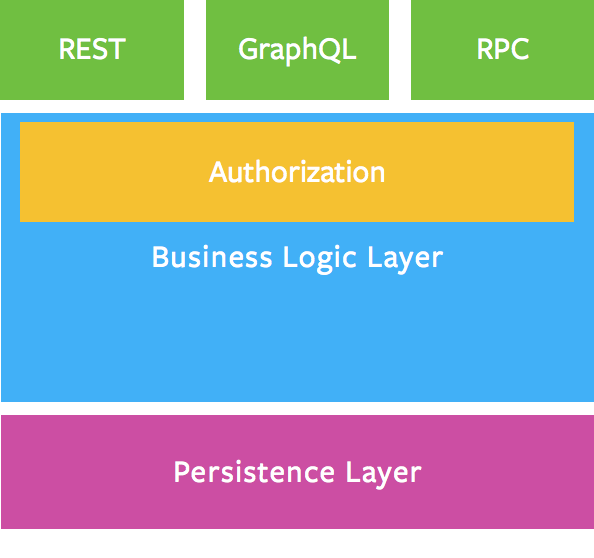

Authorization in GraphQL is (as noted by @helfer in this post) currently not a generally solved problem. Everybody has their own, custom solution that works for them but there's no general-purpose solution/library for it. (as far as I can tell)

I think

graphql-toolsis uniquely positioned to fill that hole in the community. Permissions are naturally bound to the schema of the requested data, so to me it feels like a natural fit forgraphql-tools. (note that this is just an idea, wanted to kick off a discussion)Imagine a simple type like this:

There's two types of permissions I want to enforce here. One of them I'm able to tell without running the resolvers first (only readable by the logged-in user), for the other I need the data from the database so they have to run after the resolvers. (only accessible by the author)

I could imagine an API like this working out well:

Similar to

resolversthispermissionMapwould map to the schema:Depending on if you return a truthy or a falsey value from these functions folks get access or a "Permission denied" error. The functions get the same information as resolvers. (i.e.

obj,argsandcontext)This works for the pre-database case (only logged-in users should be able to access), since I could do something like this:

Now the issue is post resolver permission functions. I don't have a good idea how to handle these right now, but maybe something like this could work where each "path" has two magic properties (

.pre,.post) which run those functions before and after the resolver:What do you think? Any previous discussions I'm missing?