Here is the cause, GDAX has a rate limit of 3 - 6 requests a second. The code in gdax.js, in the method getTrades ignores that and blasts threw that limit. When the api returns 429 (rate limit exceeded), the code retries the whole thing, thus it will never complete.

So uh. Is there any way to do this faster? :/

So uh. Is there any way to do this faster? :/

I'm submitting a ... [x] bug report

Action taken (what you did) Nothing happens when I try to import gdax data?

Expected result (what you hoped would happen) Import like any other exchange?

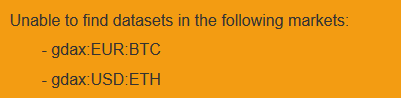

Actual result (unexpected outcome)

Other information (e.g. detailed explanation, stacktraces, related issues, suggestions how to fix, links for us to have context, eg. stackoverflow, etc) No idea