You seem to be opening recorders from all sound cards simultaneously. There will be a lot of thrashing and stalling between the sound cards, and delays will inexorably rise.

Try recording from one sound card at a time, and see if the issue persists.

Regardless, however, this is likely a configuration issue in pulse, not SoundCard.

The times stay about the same constantly but the headset is still delayed by 3s. Playing the audio through other channels does not delay the audio and (when using Panon) selecting only the headset also does not delay the audio.

The times stay about the same constantly but the headset is still delayed by 3s. Playing the audio through other channels does not delay the audio and (when using Panon) selecting only the headset also does not delay the audio.

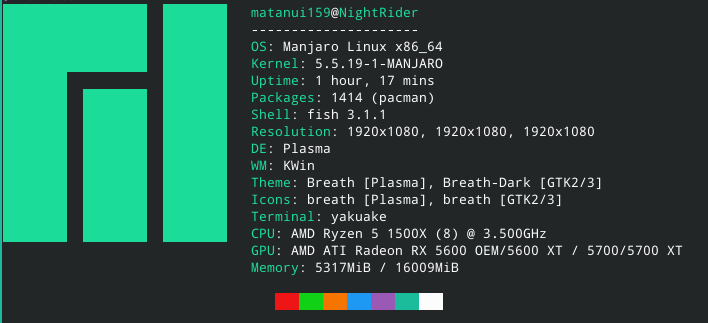

System:

Bug: Audio from bluetooth device is delayed by around 3 seconds. See https://github.com/rbn42/panon/issues/26.

Reproducible script:

Requires NumPy. Thanks to @rbn42 for creating the script.