That's an interesting idea! I'm not entirely sure how that would work, though. Would this feature be included when plot=true is set in the config or the audalign.plot() function is called? Or, would it be a separate function?

Can you share the code you used for the offset graphs or make a pr?

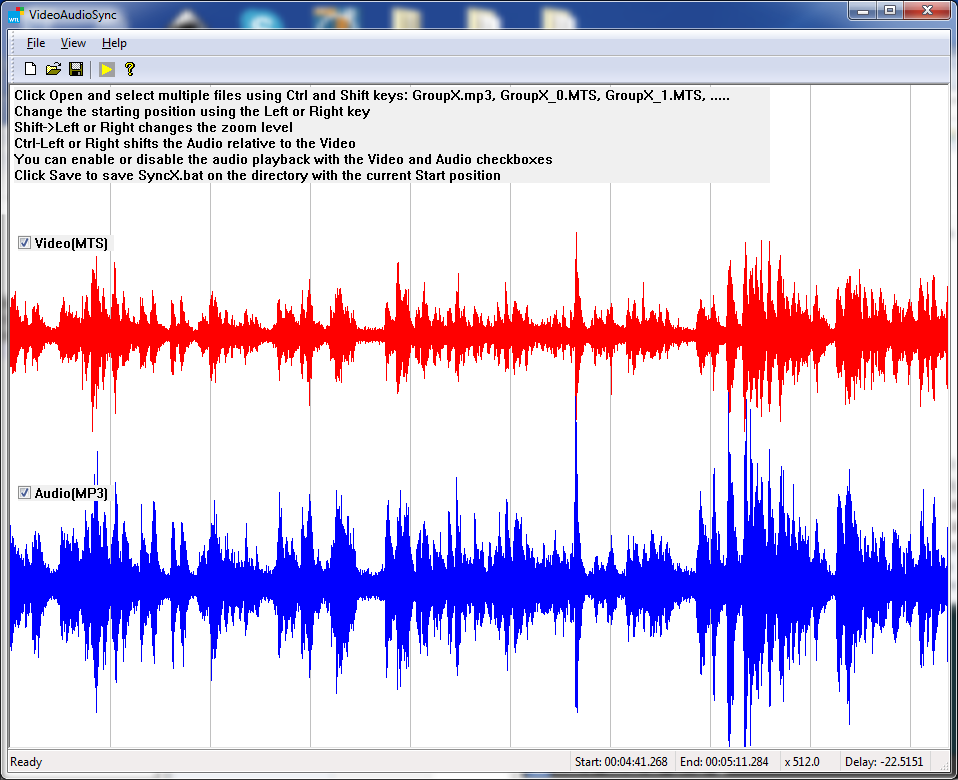

A graph of color-coded waveforms seems neat, too, but it seems like it would be tough to set defaults. Maybe that would be a lot easier to accomplish in a GUI application?

I would like to request a feature. It's nice to be able to easily align various audio files with adalign but it would also be nice to see how the files differ. When you explained the structure of the match dictionary to me I posted a screenshot of an offset graph. Something like this or a graph of wave forms with colored parts that match would be nice. I hope you get what I mean :D

Could you please incorporate something like this into audalign?