Could you please post some pictures of said back light? I would also be interested in images for the other walls because those don't look that great either. My first guess would be a bad intrinsic calibration. Please do your intrinsic calibration with Kalibr, then post the reprojection error plot. Also please state what camera and lens you are using. Is it a fisheye or a standard pinhole (rad-tan distortion) lens?

Hey I was trying to use tagslam for getting the camera trajectory. In our current environment there is a lot of ambient lighting due to window and due to auto exposure setting in the camera we are not able to detect the tags when we face the window. Do you have any suggestion for this issue how to resolve this or detect tags with not good exposure like over or under exposed images

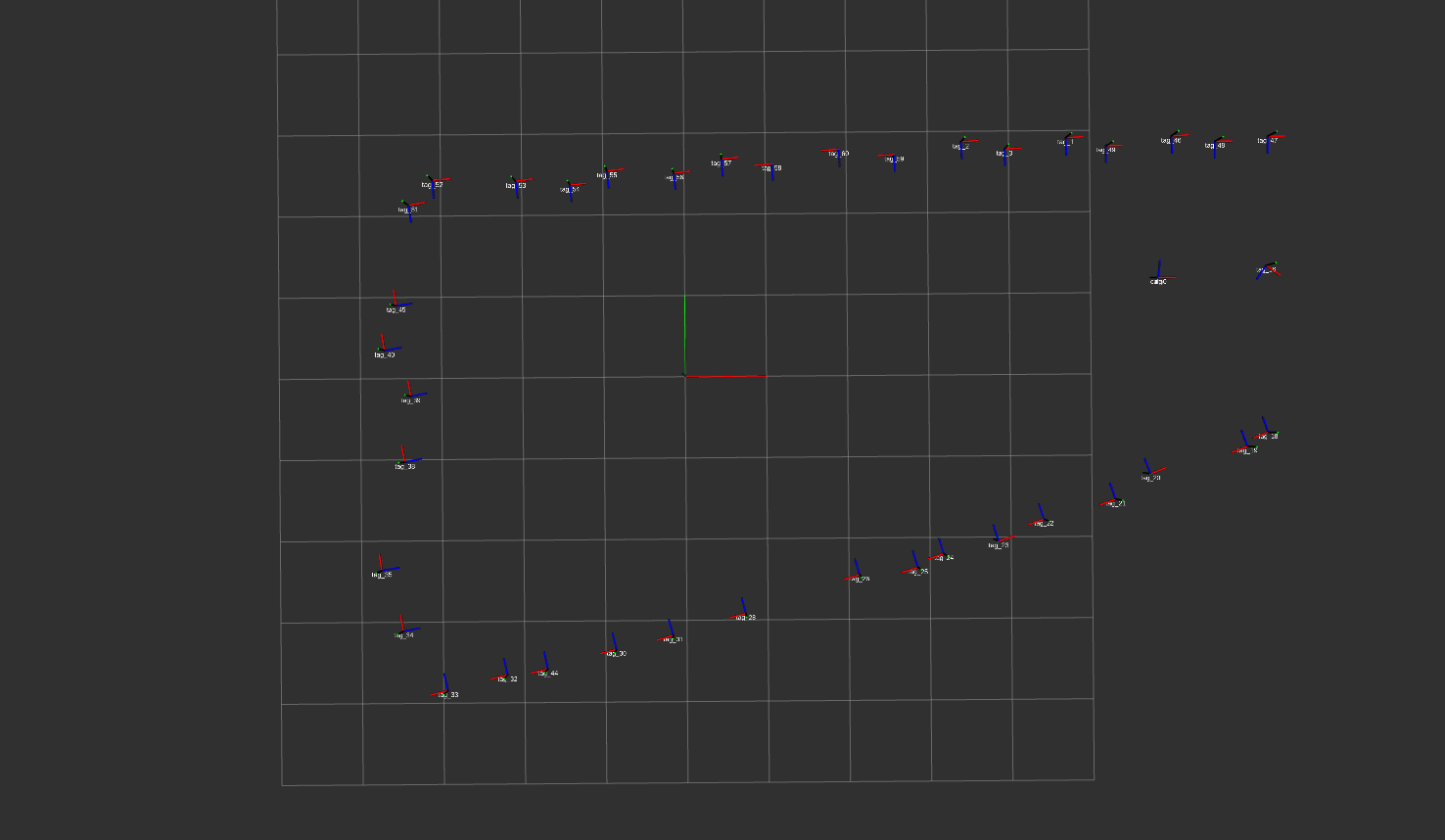

Also the trajectory i am getting is not correct rectangle it is always going diagonal as shown in the image

Is this happening because of auto exposure as this wall is where the window is or some other issue ?