You should probably pass preprocessed instead of images to vgg_19:

logits, _ = vgg.vgg_19(images, is_training=False)

should be

logits, _ = vgg.vgg_19(preprocessed, is_training=False)

Let me know if this solves it.

Closed dkarmon closed 7 years ago

You should probably pass preprocessed instead of images to vgg_19:

logits, _ = vgg.vgg_19(images, is_training=False)

should be

logits, _ = vgg.vgg_19(preprocessed, is_training=False)

Let me know if this solves it.

Still the same problem

Does it print the correct class: print(np.argmax(model.predictions(image))) (i.e. the one returned by imagenet_example()). I can't test it myself right now, sorry.

Are you setting the probability threshold to something so low on purpose: p=0.01 (that should only make it easier, of course, just curious why).

Maybe just try TargetClass instead of TargetClassProbability first. I will look more into it as soon as I am able to run it myself.

Please don't close this before I fixed the example at http://foolbox.readthedocs.io/en/latest/user/examples.html#tensorflow-vgg19

Does it print the correct class: print(np.argmax(model.predictions(image))) (i.e. the one returned by imagenet_example()). I can't test it myself right now, sorry.

yes

Are you setting the probability threshold to something so low on purpose: p=0.01 (that should only make it easier, of course, just curious why).

just for the purpose of making it the easiest task possible to test the tool

Maybe just try TargetClass instead of TargetClassProbability first. I will look more into it as soon as I am able to run it myself.

Unfortunately, I still get the same results...

btw, the concept of the tool is great! can't wait to use and develop some attacks of my own

Okay, that's weird. Let's go back to the basics: can you try Misclassification as the criterion and GradientAttack as the attack.

Also: what do you mean by the process never ends, i.e. how long did you let it run?

For the LBFGSAttack, you can also pass maxiter with a lower value (150 by default): adversarial = attack(image=image, label=label, maxiter=10)

Oh, and you can pass verbose=True: attack(image=image, label=label, maxiter=10, verbose=True)

Okay, that's weird. Let's go back to the basics: can you try Misclassification as the criterion and GradientAttack as the attack.

criterion = Misclassification()

attack = GradientAttack(model, criterion)

label = np.argmax(model.predictions(image))

adversarial = attack(image=image, label=label)

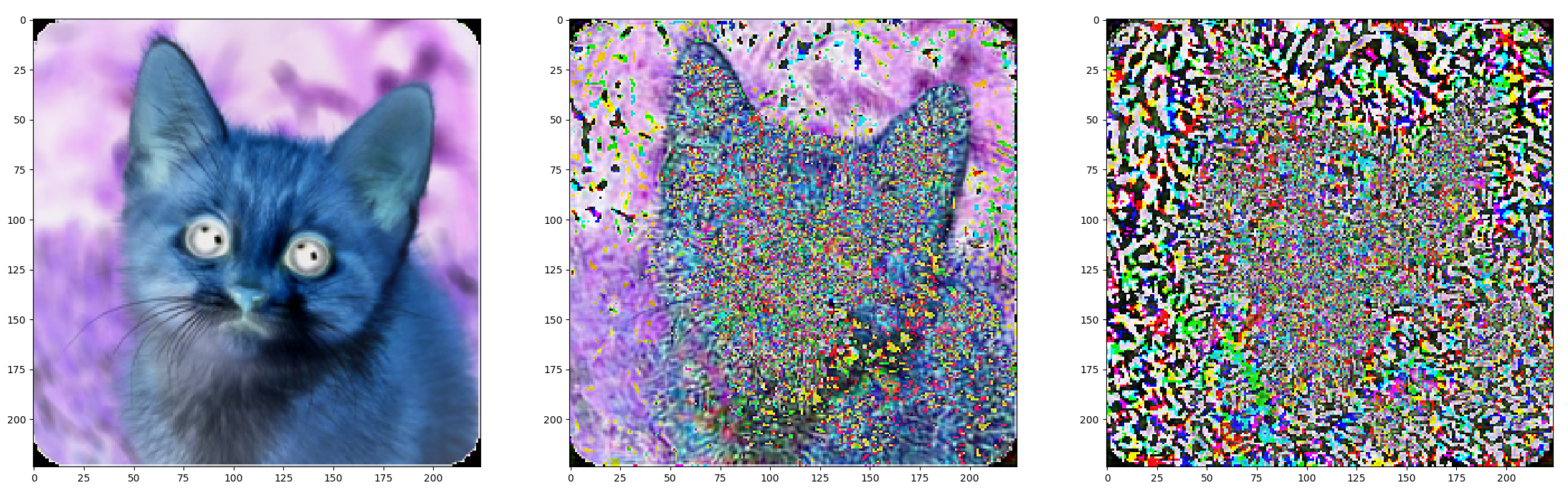

Works! although notice the noise is not transparent...

> Also: what do you mean by the process never ends, i.e. how long did you let it run?

More than 20 minutes

> For the LBFGSAttack, you can also pass maxiter with a lower value (150 by default): adversarial = attack(image=image, label=label, maxiter=10, **verbose=True**)

Same results. Also, the verbose attribute doesn't seem to enable to print any messages in the consoleThe images in your code are floats between 0 and 255. Matplotlib's imshow expects either integers between 0 and 255 or floats between 0 and 1.

Just divide by 255, then you see that the adversarial is actually very close to the original ;-)

plt.subplot(1, 3, 1)

plt.imshow(image / 255)

plt.subplot(1, 3, 2)

plt.imshow(adversarial / 255)

plt.subplot(1, 3, 3)

plt.imshow(scipy.linalg.norm(adversarial - image, axis=-1))

Regarding your issue with the LBFGSAttack. I now tried your code and for me it works well. Do you have a GPU? For me, it takes 6 seconds (maxiter 5), 30 seconds (maxiter 50), 75 seconds (maxiter 150 = default).

with foolbox.models.TensorFlowModel(images, logits, (0, 255)) as model:

restorer.restore(model.session, "/gpfs01/bethge/data/tf-model-checkpoints/vgg_19.ckpt")

print(np.argmax(model.predictions(image)))

target_class = 22

criterion = foolbox.criteria.TargetClass(target_class)

attack = LBFGSAttack(model, criterion)

label = np.argmax(model.predictions(image))

adversarial = attack(image=image, label=label, maxiter=5)

plt.subplot(1, 3, 1)

plt.imshow(image / 255)

plt.subplot(1, 3, 2)

plt.imshow(adversarial / 255)

plt.subplot(1, 3, 3)

plt.imshow(scipy.linalg.norm(adversarial - image, axis=-1))

print(np.argmax(model.predictions(adversarial)))outputs 281 and 22.

My best guess: you don't have a graphics card and therefore VGG is extremely slow

@dkarmon I just fixed the preprocessed vs. image mistake I pointed out earlier. From my side this issue can be closed. What about you? Still open questions?

btw, the concept of the tool is great! can't wait to use and develop some attacks of my own

Looking forward to your contribution ;-)

My best guess: you don't have a graphics card and therefore VGG is extremely slow

You are right, I do not have a GPU so it should be slower. However, in my experience, it shouldn't take that long... generating an adversary example on a VGG using a very naive code took me up less than one minute... nevermind, though :)

@dkarmon I just fixed the preprocessed vs. image mistake I pointed out earlier. From my side this issue can be closed. What about you? Still open questions?

All is good! thanks

FGSM (GradientSignAttack) should be fast in your case, too. But L-BFGS requires many evaluations and VGG is large, so it should really be run on a GPU.

Hi, I tried following the instructions mentioned in the tutorialand examples sections, and create an attack for a VGG19 model. Downloaded the model's checkpoint from here

The code "runs" however it doesn't seem to be able to generate an adversarial example - the process never ends... What am I doing wrong?

Please advise