Closed ropewalker closed 3 years ago

Just in case: I also tried to actually draw some sprites inside the window and can confirm that after switching to LogicalSize they were rendered scaled as well, as expected.

As a couple of reference points: 1) tried the same thing in ggez, it does render scaled window (at least by default); 2) Apple's own apps like Preview also display images scaled — so I would argue that this might be a good choice for default behavior.

Should this be labeled with “windowing”, as it has to do with winit?

Whatever the decision is made here, I think the bevy_ui Val enum (https://github.com/bevyengine/bevy/blob/1ebb7e44ff75640362b4b85f077b7b8d9b6dd3a4/crates/bevy_ui/src/node.rs#L11) should have a variant for both device pixel size, and device independent pixel size. I'd also encourage the device independent size to be the default in examples and code.

For example Val could change Px to mean device independent pixels, and add an additional sizing of PhysicalPx (I'm sure there's a more terse name) that is 1:1 with physical pixels.

I would reference CSS as a source that defaults to device independent sizes (1px is defined as 1/96th of an inch, rather than anything to do with the device's actual pixels. https://developer.mozilla.org/en-US/docs/Learn/CSS/Building_blocks/Values_and_units#Absolute_length_units).

I think this should actually be a pretty early consideration in the UI implementation work, as getting good device independent scaling right in the engine from the early stages (and making it the default behavior) will mean games and the Editor will work across a wide variety of devices.

I'm not sure if we want to scope this issue down to just the window size issue, but I think a broader conversation for bevy_ui device scaling is probably warranted.

Just in case: I also tried to actually draw some sprites inside the window and can confirm that after switching to LogicalSize they were rendered scaled as well, as expected.

However, scaling seem to switch back to physical pixels on window resize.

Proposed high-level solution from Discord chat (pending approval from @cart):

Val::Px to represent "logical pixels" in UI node styles.Val::Px to stretch::style::Dimension::Px (as is currently implemented).Val::Px node styles based on display PPI/DPR. ⬅️ solution neededVal::PhysicalPx (?) as a "escape hatch" to get back to the current behaviour. This is somewhat complicated because allowing physical pixels to mix with logical pixels in layout code results in some undesirable layout behaviours that could easily be missed by developers using monitors with 1:1 DPR (because it "works on my machine").I'm not sure what exactly we should aim for with logical pixels, whether it is simply a function of physical pixels multiplied by a "pixel ratio" or whether it's more complicated based on the MDN doc @ncallaway linked to.

Presumably we want the UI to follow the logical scale, but game rendering it's less clear for. Like the 2d rendering should default to rendering to the physical size, but 'as-if' the screen was the logical size. Maybe that even makes sense for 3d stuff as well, but I don't think PerspectiveProjection cares about the window size.

Yeah, so just to be clear, my comment pertains to the UI system only, not the other parts of the problem - maybe this need to be dealt with in a more integrated way and not just for UI.

Regarding units, I am convinced that pixels are an unreliable measurement of length. The pixel density of our screens increases every year. This makes the pixel size a variable that changes constantly and cannot be relied upon.

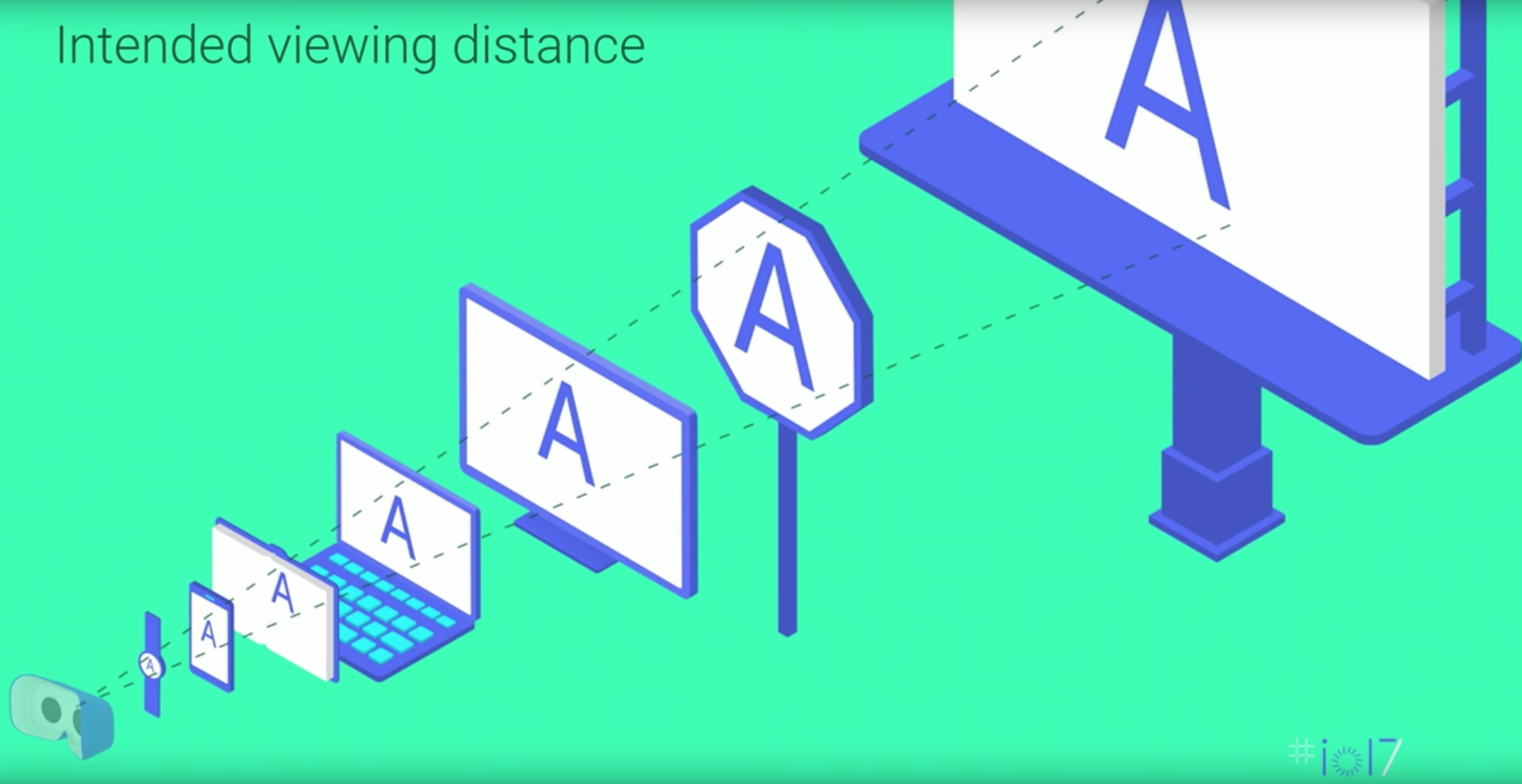

Instead, we should consider adopting a device-independent measurement, such as the dmm measurement proposed by Google. This measurement is independent of any past, current, or future pixel density of the screen. It focuses on what humans perceive instead of being based only on our current technical limitations. Furthermore, using this metric also would allow us to use commonly approved device-independent default sizes for text and graphics, as recommended by Google: Headline 40dmm, body 24dmm.

Although this unit was developed for virtual reality, it is in fact just based on the physical world, especially the properties of the human eye. This makes it suitable for any application, including games.

To implement the dmm metric though, the software will have to know the size of the screen and the distance to the user. This could be done by heuristically estimating the expected distance, e.g. using about 50cm for desktop applications and 30cm for mobile phone applications. The size of the screen can potentially be computed by querying the operating system for the pixel density and screen resolution. In the future, the distance to the user might be dynamically detected by mobile phones or other screens. Even when only estimated, the dmm metric will be much more reliable than a pixel-based metric.

Even for pixel-art games, the actual screen pixels usually don't matter, as larger virtual pixels are usually displayed. Using a new specialized metric instead of tampering with the "px" unit behind the developers back seems like a more obvious approach to me. The whole topic of logical versus physical pixels should really be an implementation detail that the developer should not need to worry about.

My previous post was working on the assumption that we will need to provide a physical pixel unit in the API as well as a DPR-aware unit and/or a DMM unit.

So with this in mind, we are really just talking about how we name these different units in the API.

Talking a while back with @ncallaway, we thought that if we were to provide a Px unit in the API that was not DPR-aware, this would lead some developers to building UI that is not DPR-aware by default, without realising – perhaps they are used to CSS and they are using a monitor with DPR of 1.0 so it appears to be working as expected. This was why we said we should probably just make the Px unit DPR-aware and provide a PhysicalPx escape hatch, because then the relationship between these units is immediately clear.

@johannesvollmer Specifically on the subject of DMM, it's unclear to me why the DMM unit would be desirable for most games outside of a VR/AR context. I think for UI elements that are "non-diegetic" from the users point of view, I would ideally want to scale to the device rather than to the viewing distance because I want to be able to account for screen real-estate and also the user's preferred UI size.

When setting the size of text in a user interface, what you really want is that text is readable by your users. If the text is too small, the user won't be able to read it.

If you specify the size of the text in pixels, the actual perceived text size will depend on the specific screen the user happens to use and the distance of the user to that screen.

Let's say you develop a game on a rather large 1080p screen. You adjust the text size to be readable on your monitor, which makes sense. Maybe you determined that a text height of 24 pixels looks fairly readable. Now, when someone plays your game on a rather small laptop with a 4k screen, a text with a height of 24 pixels will most certainly be too small. Screen resolutions increase over time, which means that soon a majority of your users might have even more pixels on their screens.

The human eye has a certain resolution, just like a camera. By specifying the text size in an Angular unit, the eye will always perceive it to be the same size, no matter what display they happen to use.

Of course, dmm units are only for absolute sizes. I'd still like to have the possibility of specifying relative sizes, like percentages or fractions, too. This way, I can build a layout that adopts to the current device used. When specifying text size only in terms of screen size, for example by using percentages, small screens will result in an inadequate text size. So there's a need for both relative and absolute sizing. In essence, just like we currently do in css already - but dmm instead of pixels.

Re: unit semantics — I don't think there's any reason to reinvent the wheel here. Google has been using dp (density-independent pixels, pronounced "dips") as the standard unit of measurement in their Material Design specs for a long time, so it should be relatively familiar to UI designers. It's also not hard to reason about — it's the exact same unit as CSS 'px' on the web, and 1:1 with physical pixels on most contemporary desktop displays, which saves users the trouble of having to convert to some bespoke abstraction that's specific to Bevy. In practice, the 'dp' unit serves the same purpose as the 'dmm', except that the dmm measures apparent size at variable distances in three dimensions (hence the relevance to VR) while dp measures apparent size at variable resolution density (which is the specific problem we're trying to solve here).

But to be honest, I'm not really seeing a use case to even support literal hardware pixels as a usable unit, so I would personally be comfortable following in CSS's footsteps and continuing to use "Px" as a shorthand for density-independent pixels. I don't really feel strongly about that either way though.

What I would like to see, as a UI designer/developer, is support for additional units that are relative and qualitatively different, like container-relative percentage, viewport-relative percentage, ems, etc. But I'm not 100% clear on whether that's in scope for this issue, or if this is just about pixel density.

ETA: To push past the bikeshedding — what is the actual actionable item/task here? Is this up for grabs, or is someone already working on it? And does it need further discussion/research, or is it ready to be worked on?

with the new release, https://bevyengine.org/news/bevy-0-4/#hidpi and https://github.com/bevyengine/bevy/pull/1061, bevy's Val::Px used for pixel in UI nodes is using logical pixels

Yup I think we can close this. Feel free to open new issues for more specific requests!

A window created using the following code has correct size in physical pixels, however it doesn't respect UI scaling, so it looks small on devices with scale factor different from 1.0:

Changing this line: https://github.com/bevyengine/bevy/blob/f539b6e7f631c48287bee445a3b969e07269d222/crates/bevy_winit/src/winit_windows.rs#L37 to

seems to help: see the screenshot below — both windows are created on MacBook Pro 13'' (with physical resolution 2560×1600 scaled to 1440×900) by running the code above, but window on the left was created before the change, and window on the right after the change:

I am not sure which behavior is “correct”, but it seems reasonable that it should be at least configurable.