I'm mesuring approximately 300K requests per second using the http_server_async example and utilizing 4 threads (as stated in the provided link). However, if you create one io_context per core and use individual acceptors (using SO_REUSEPORT option), you can anticipate around 150K requests per second for each additional core you put into your setup (as they work independently).

Regardless, I doubt this benchmark accurately reflects the usage of these libraries in real-world scenarios:

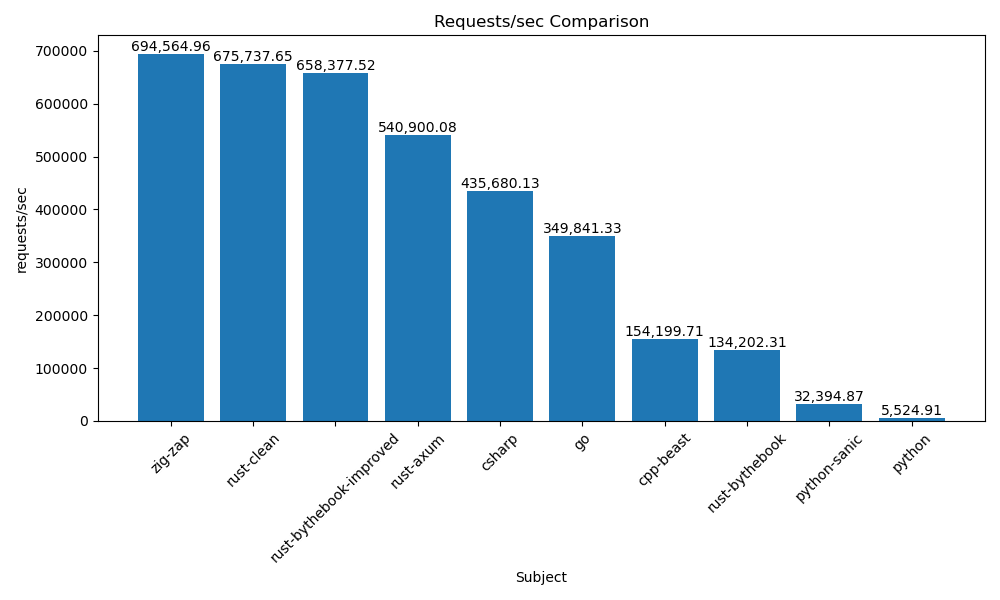

So, what exactly are these benchmarks testing? Simple HTTP servers that respond to GET requests with a constant, 17-byte-long message.

Version of Beast

347

Steps necessary to reproduce the problem

sudo snap install zig --beta --classiczigversion and it should be 0.12 nowgit clone https://github.com/zigzap/zap.gitcd zapgit checkout -b perf tags/v0.7.0wrk/csharp/Program.cs, change line 6 toapp.MapGet("/", () => "Hello from C#1234");, makes response body size (17 bytes ) the same between c# and c++ test code (little impact on result)../wrk/measure_all.sh "csharp cpp-beast"for more details, see https://github.com/zigzap/zap/blob/master/blazingly-fast.md#how

All relevant compiler information

Result

The perf test result: https://github.com/zigzap/zap/blob/master/blazingly-fast.md#the-computer-makes-the-difference

Beast's test code

https://github.com/zigzap/zap/blob/master/wrk/cpp/main.cpp

I like beast, it's hard to believe beast's performance is so poor, can anyone point out what's wrong or improve beast's test code? I tried to modify to beast's http server example to return a string-body response for the perf, but the result got worse. 😭