On Sun, Sep 2, 2018 at 3:58 AM Todor Arnaudov notifications@github.com wrote:

BTW, do you know about the numpy "fancy indexing" and conditional indices? They are supposed to be more efficient than normal iteration and shorter, too...

Maybe, but I don't think it makes much difference in speed, at this point consistent notation and easy debugging are much more important. Long term, I may switch to Julia: https://docs.julialang.org/en/v1/ , but I don't think it has good debugging tools yet.

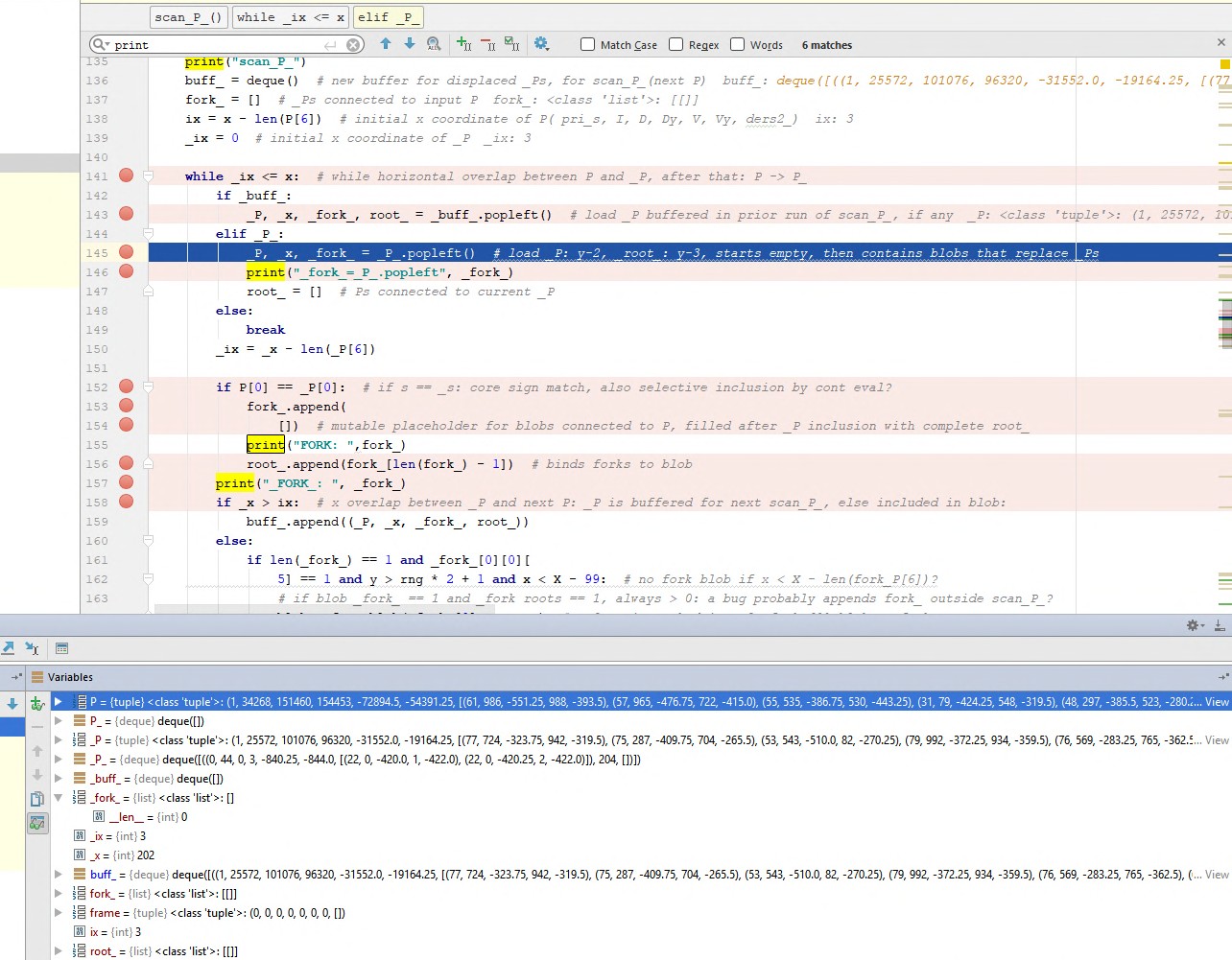

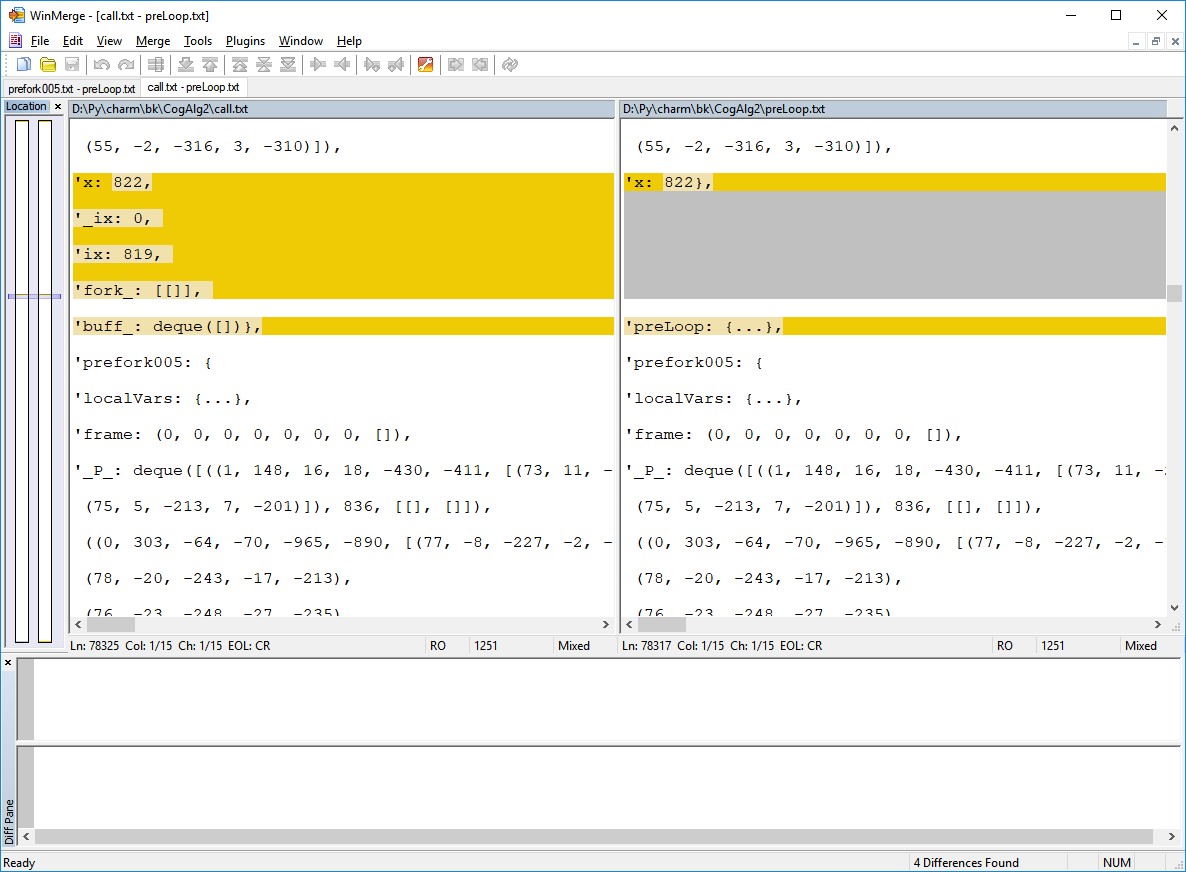

For example, there is a bug in frame_dblobs, scanP function: lines 148 and 155 are always false. Basically, "root" and "fork" length is always > 0, even though they are renamed "root" and "fork", which do have length == 0 instances. So, they probably get appended somewhere during transfers, for no good reason. I figured the way to track individual arrays would be to put a breakpoint on their object ID, but don't know how to do it.

But this is not a top priority either, I am still redefining core comparison by division. It seems to have at least three incrementally refining steps:

1: max / min -> ratio (div miss), new clean match = min / ratio: this is intuitively more accurate than basic clean match = min - |diff|. 2: max - ratio -> div match, clean match = div match / ratio: this represents additional magnitude compression but should also have much higher "ave" filter. 3: min multiple (integer part of ratio) -> minimally cleaned match, down-shifted (up-shifted fraction multiple) -> clean miss, (min * multiple) / clean miss -> refined clean match

Also, I am editing my comparison to CNN, their edge detection kernels are more like lateral rather than vertical comparison.

BTW, do you know about the numpy "fancy indexing" and conditional indices? They are supposed to be more efficient than normal iteration and shorter, too.

p[p>0] = p*1.6

https://www.numpy.org/devdocs/reference/generated/numpy.where.html#numpy.where

numpy.where(condition[, x, y]) Return elements chosen from x or y depending on condition.

"If all the arrays are 1-D, where is equivalent to:

[xv if c else yv for c, xv, yv in zip(condition, x, y)]"

np.where(x < y, x, 10 + y) # both x and 10+y are broadcast array([[10, 0, 0, 0], [10, 11, 1, 1], [10, 11, 12, 2]])

...

putmask(a, mask, values) Changes elements of an array based on conditional and input values.

Examples

...

A more complex one which sends functions as parameters:

class numpy.nditer[source] Efficient multi-dimensional iterator object to iterate over arrays.

https://www.numpy.org/devdocs/reference/generated/numpy.nditer.html#numpy.nditer

...

https://www.numpy.org/devdocs/reference/routines.indexing.html

Of course, for more meaningful transformations, some kind of nesting of such "fancy" operations or lambda functions and perhaps different data layout would be required.

E.g. more parallel process of pattern competion in pre-allocated slots in numpy arrays, and then wrapping them up, rather than sequentially one by one and doing allocations of new variables for each call. Where that's algorithmically possible; it may require intermediate steps and transformations.

https://github.com/boris-kz/CogAlg/blob/7ab8bf6e0d293e5695805c5def39d8998ecbfb0c/frame_draft.py#L482