@andir thank you for reporting this. does it show the same performance when using the parameter --framework terraform ?

Closed andir closed 3 years ago

@andir thank you for reporting this. does it show the same performance when using the parameter --framework terraform ?

@andir thank you for reporting this. does it show the same performance when using the parameter

--framework terraform?

Yeah, I must have forgotten that detail. It behaves the same from what I can tell.

I did some hacking on the code base on the parts that were highlighted in the last profiling runs. One of the bigger changes was getting rid of jmespath in the Terraform parser as it was consuming unreasonable amounts of time for the tasks it was given.

My current branch can be found here: https://github.com/bridgecrewio/checkov/compare/master...andir:random-hacking

The commits should detail a bit of why I did them and/or what the improvement was. This isn't really in a state where I would like this to get merged into checkov as I haven't verified that there was no functional change.

One of the takeaways from this journey is that checkov should probably be more strict on the types it is passing along and use more type annotations. Having to sprinkle isinstance everywhere isn't just slow (because of the amount of checks that are being done) but also kind of bad style as the code always contains one or more additional logical branches given on the runtime type. The burden of passing in the right types should be on the side of the caller not the called.

Update: Using the above linked branch and running it against the same TF repository I mentioned in the original post the runtime is now down to 30m from the 1h and 50min I observed previously. This still requires a ~5-10x improvement before it is remotely acceptable for our CI runners but I am confident that we might be able to get there.

@robeden wdyt on the jmepath changes?

@andir - Thanks for the report and apologies for the issues. I knew the current parser implementation isn't very efficient, but that's a good order of magnitude worse than I would have expected.

Avoidance of jmespath and usage of the lru_cache makes sense, but we can probably do better.

The problem is going to stem from the "looping parser" approach. The ultimate fix is to process as a graph, and fortunately that work is ongoing. That should make performance as efficient as possible. For the current implementation, I think we should be able to cut out some whole branches of work.

@andir, can I assume that in your repository you have modules loading other modules? We're probably hitting some M*N loop scenarios that we might be able to cut out.

@andir - Thanks for the report and apologies for the issues. I knew the current parser implementation isn't very efficient, but that's a good order of magnitude worse than I would have expected.

Avoidance of jmespath and usage of the lru_cache makes sense, but we can probably do better.

:+1:

The problem is going to stem from the "looping parser" approach. The ultimate fix is to process as a graph, and fortunately that work is ongoing. That should make performance as efficient as possible. For the current implementation, I think we should be able to cut out some whole branches of work.

Yeah, the graph library would be my next stop at seeing what can be optimized there. It didn't look particular efficient in execution. It seems to be doing a lot of type checking yet again (and probably other stuff that isn't great either).

@andir, can I assume that in your repository you have modules loading other modules? We're probably hitting some M*N loop scenarios that we might be able to cut out.

Yes, we have a lot of internal modules that are basically just instantiating other modules to deduplicate most of our logic.

Feel free to loop me in on any ideas you have towards improving the code. I am happy to spent some of my (evening) time on this :-)

I just pushed a few more changes to the mentioned branch. The changes I did was reducing the duplicate dpath lookups and replace one of them with a simple dictionary get() call. I am now down to 23min of execution time. We can probably make this faster by ditching dpath in general. It doesn't seem like the graph is the primary problem but the way the lookups are made.

I tested the lru_cache on find_var_blocks and I'm seeing unit test failures when that's added. Still trying to figure out if they're real problems or not...

I tested the lru_cache on

find_var_blocksand I'm seeing unit test failures when that's added. Still trying to figure out if they're real problems or not...

I could imagine there being failures in tests if the returned objects are being mutated somewhere. That would likely yield to the cache returning "dirty" results instead of what you would expect.

I could imagine there being failures in tests if the returned objects are being mutated somewhere. That would likely yield to the cache returning "dirty" results instead of what you would expect.

I don't think that should be the case... but it's possible. Sorry, haven't had time to dig into it more yet.

@andir @robeden please wait with new commits on this. @nimrodkor's team is leading a big performance change. The PR should be opened soon. I hope we will see different results after those changes. We did see a very big change on our own repos.

@andir - Nimrod reports run time reduction of about 60%, but I expect it to be much higher in your case. The iterative approach has a loop on variables and then a loop on modules (possibly at multiple levels) so I expect you’re seeing an “N * M” (at least) scenario.

Thanks for the work! I'll give this a try in a bit. It is a massive PR that must have been cooking for a while! Will have a look at it.

I just finished running this against the same repository as mentioned initially. The entire process now only takes about 4 minutes instead of the initial 1h 50min! I did not apply any patches to the code.

Not sure if it is new but I didn't managed to run this code without network access (anymore?). I had to set an invalid http proxy to get it going without network:

$ docker run -e https_proxy=http://localhost --network none --rm -v /my-code:/my-code-it checkov:local --no-guide -d /my-codehi @andir can you share the results with latest checkov version? did it improve the duration?

hi @andir can you share the results with latest checkov version? did it improve the duration?

I just ran the latest version from the master branch (99707fd10a5052b798314342f012ef4e139f92eb) against my repo.

Testing just our modules subdir is now taking only 19 seconds. Running it against all the workspaces at the same time takes about 2 minutes and 22 seconds.

A huge improvement from the initial results! Thanks for your work on this! This is already much closer to what I would consider production ready :-)

great @andir - i'm closing the issue for now. If you'd like to make further improvements, we would accept a PR.

Describe the bug

I've just tried checkov on a medium sized repository that contains ~70 modules and around 20 Terraform workspaces. Running checkov against the entire repository takes about 1 hour and 50 minutes on current gen desktop CPU. In contrast something like

tfseconly takes in the order of ~20s for the same code. My understanding is that both tools are primarily busy evaluating the logic of the Terraform code (substituting variables, instantiating modules, ...) and applying each rule set is the cheaper part of the execution. Correct me if I am wrong.clocsays the following about my Terraform folder:Running checkov against individual modules takes somewhere between a ~5 to 30s which is totally fine when looking at their complexity.

Having done some light profiling of the application it appears as if the (costly?) parsing of each HCL block in the Terraform files is done over and over again for each instance of a module.

I slapped some

@lru_cache(max_size=1024)annotation on to theparse_var_blocksfunction which resulted in a 13% reduction of execution time. Increasing the cache size to an unbounded limit had more influence on the performance but not to the point where the runtime is anywhere near what I would feel comfortable with in CI.Anything going from here seems to require more knowledge about the code base.

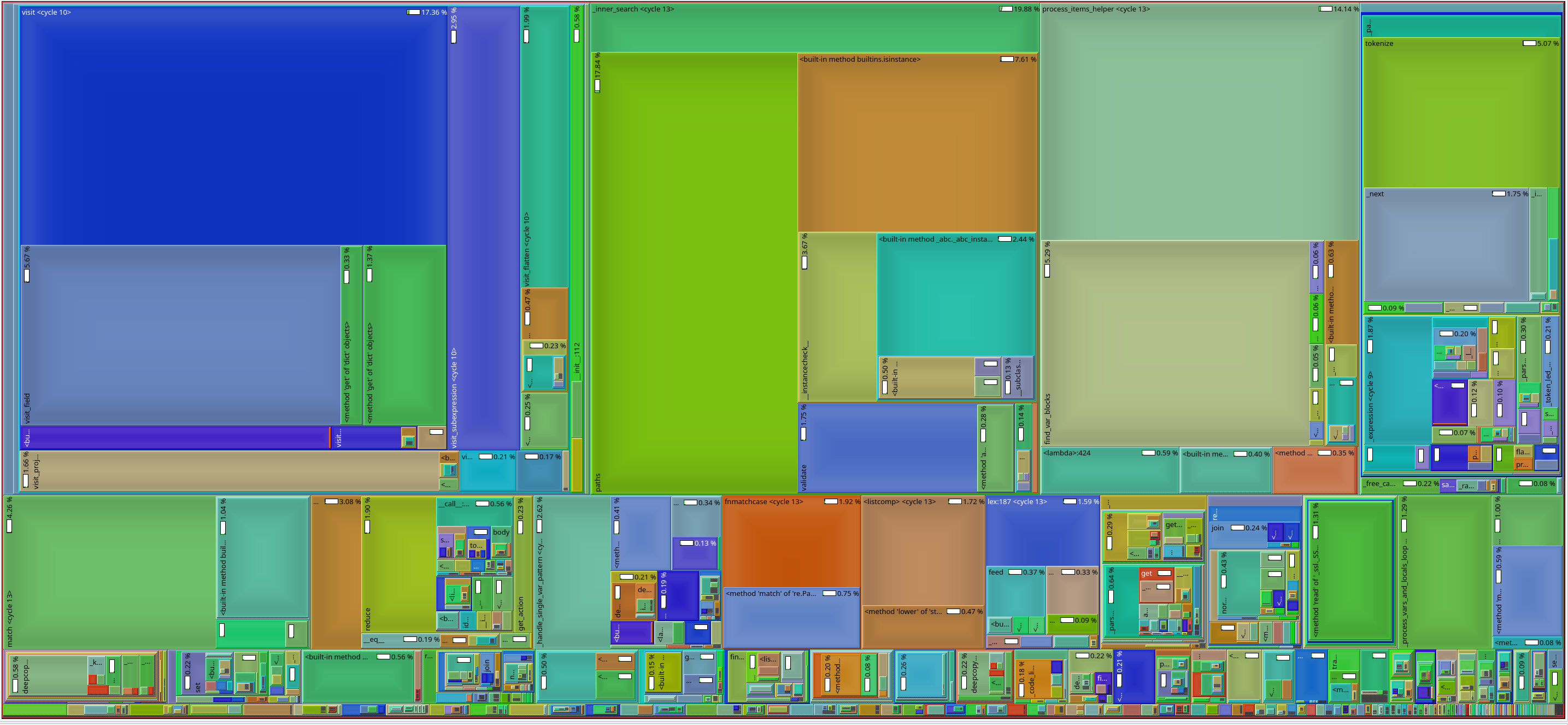

Profile results after applying the above caching patch:

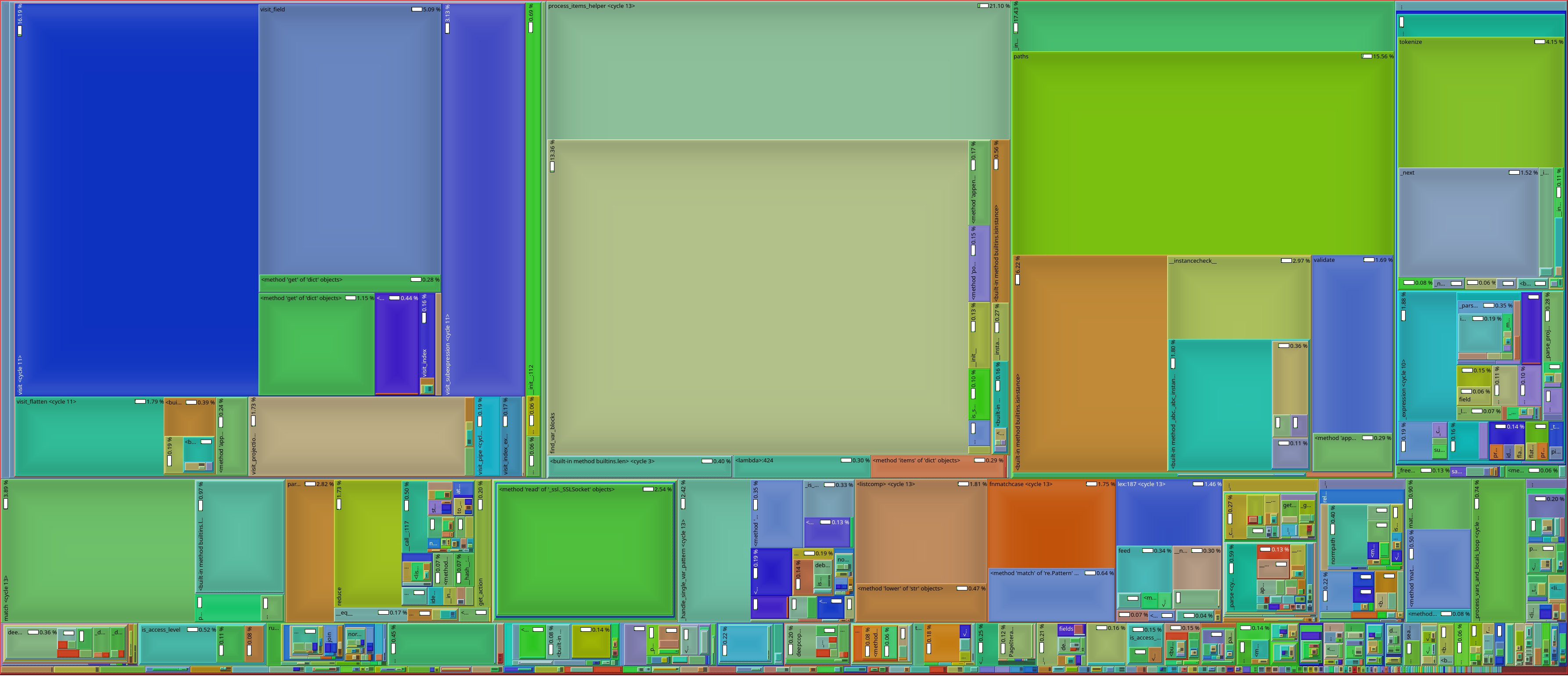

Profile results before applying the above caching patch:

Looking at the output of

strace -e openat bin/checkov ....I can see that checkov is reading some files as often as four times within one run.To Reproduce Steps to reproduce the behavior:

Expected behavior I would expect a runtime in the order of seconds or minutes but not tens of minutes or almost two hours. .

Desktop (please complete the following information):