Good morning :)

This package is implemented as a generic point cloud registration system that can be reconfigured at runtime using a pipeline specified in yaml files (and loaded into the ROS parameter server). This away, my end users can configure my pipeline to their specific needs without needing to implement application specific code.

You can see the listing of the available algorithms and parameters in the drl_configs.yaml file and for making its usage easier, I included in the begging of the readme links to repositories and launch files for the main use cases, along with videos.

For fitting a large set of use cases, there are many types of algorithms that can be added in each stage of the pipeline, shown in the diagrams in the beginning of the readme, of which, the several variants of the Iterative Closest Point (ICP) and Normal Distributions Transform (NDT) can be used for point cloud registration, along with Principal Component Analysis (PCA).

The operation of the pipeline is very different from AMCL, because it is not particle based. Instead, it uses feature matching for finding the initial alignment (in the case of robot localization it corresponds to the approximate robot position in the map) and then uses accurate point cloud registration algorithms for estimating the 3 DoF (x, y, yaw) or 6 DoF (x, y, z, roll, pitch, yaw) pose of the robot (or an object in the scene, for 3D perception).

As such, unlike AMCL, this approach does not require the robot to move around in the beginning for allowing the particles to converge to a single location. Moreover, it can be much more accurate and efficient at tracking, since it uses point cloud registration algorithms, which are better at finding the local optimum for aligning the sensor data to the map. You can find a comparison between this approach and AMCL in the links at the bottom of the readme, pointing to my dissertation and associated publications.

For 3 DoF localization using 2D lidars, I developed algorithms to deal with that particular use case, such as:

- Correct estimation of normals, which are critical for the initial alignment stage, since they are used in the keypoint detection and description phase

- Parsing of 2D maps

- Usage of point cloud registration algorithms tuned to 2D

- Implementation of lookup tables to significantly decrease the search of neighbors (when compared to k-d trees, comparison described in this paper)

- Among others, that I either implemented in this package or in my fork of the Point Cloud Library

The main reasons for this package expecting point clouds as input instead of laserscan data, are:

- A unified interface for both 2D and 3D data

- Most of our mobile robots are equipped with two lidars (one in the front and another in the back), and as such, I developed the laserscan_to_pointcloud package that receives data from both lasers and creates a single point cloud with 360º laser data (the laserscan_to_pointcloud can be used for 1 or many lidars, and I implemented spherical linear interpolation for reducing the impact of scan deformation due to the robot motion during the sweep of the laser to retrieve the measurements)

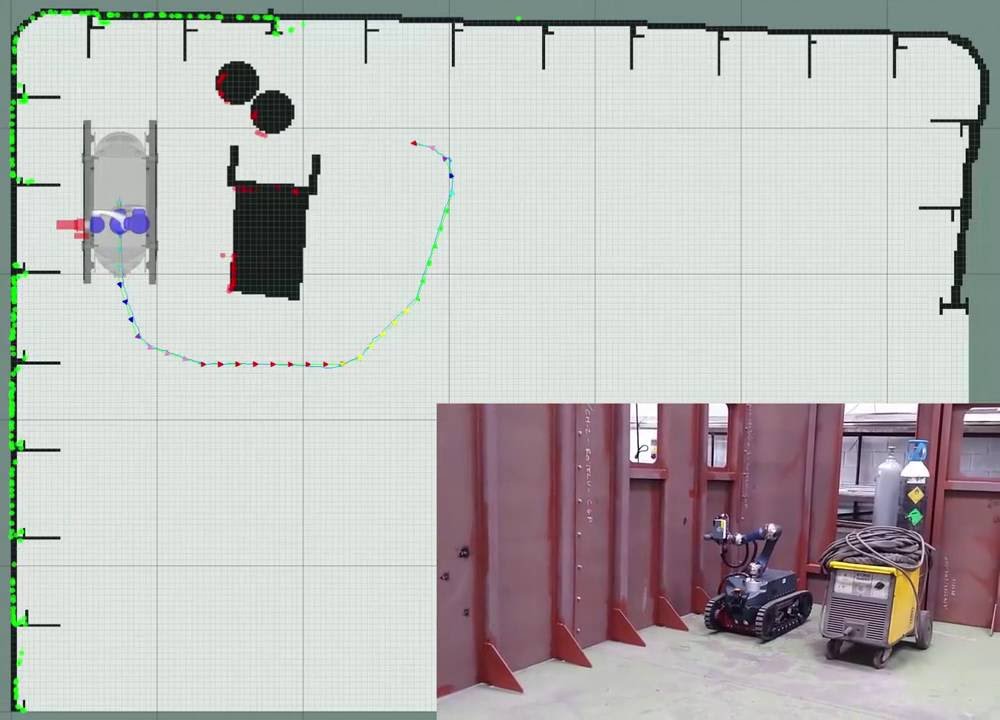

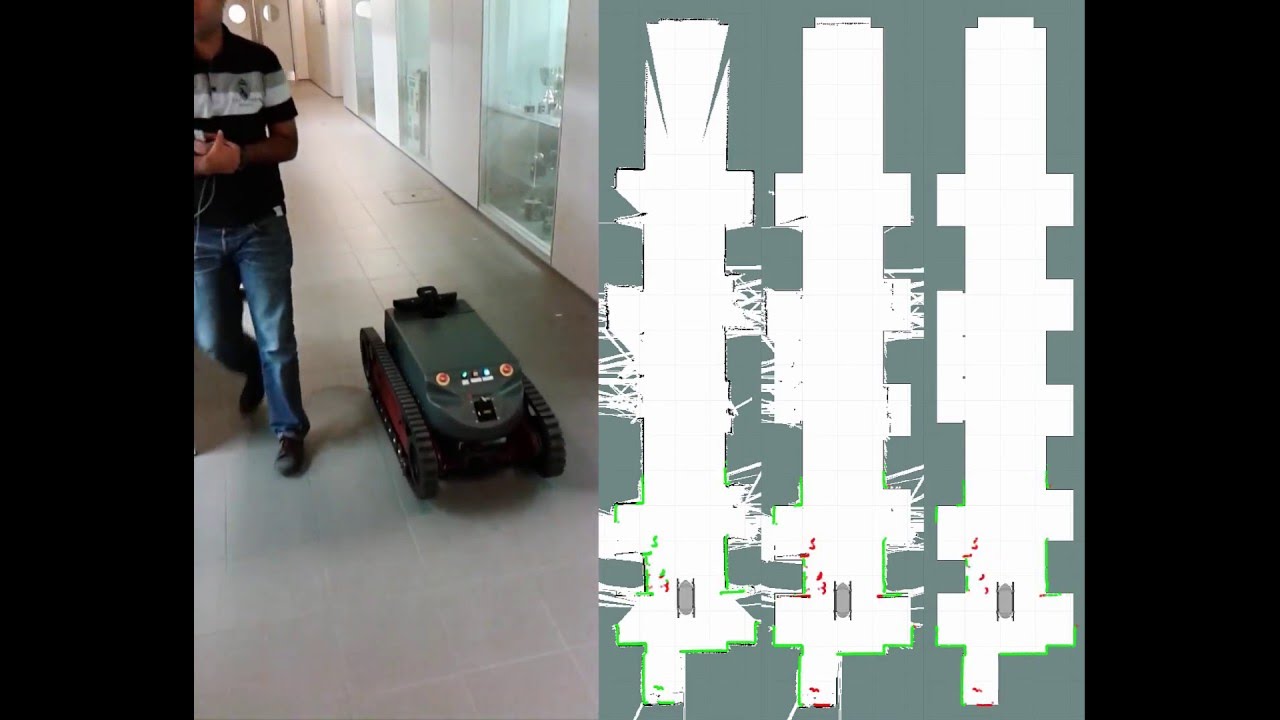

In this video you can see the merged lidar data and its overlay on top of the 2D map after point cloud registration.

As far as I understood, this localization uses NDT-MCL. How is it compared with a traditional AMCL package?

Also, as I can see that the package focuses on 3D lidars, does it make sense to use this package having only 2d lidar?

And what is the reason of using PCL instead of laserscan data for 2D measurement?