Closed danil-z closed 7 years ago

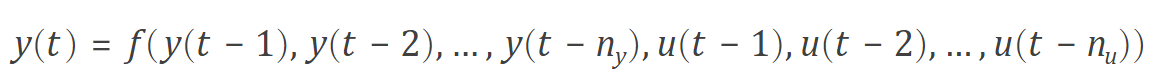

Never heard of it before, did some research, basically works like this:

So to predict the new value of y, previous inputs and outputs are used as input, right? I'm also seeing some different examples using only previous outputs as input. But I think it is possible in this library, you have to use recurrent connections though.

Curious what you find here

So I worked it out and assuming then etwork takes input ut to ut-n and output yt-1 to yt-n as input, it's definitely possible. Schematic:

So basically to copy the previous inputs to the next node, we need connections with a constant weight (=1) and nodes with a bias of 0 and the IDENTITY function as activation. Every activation sequence the previous activation will be moved to the next node. The same is done for outputs. Edit: I just figured out, I think the previous inputs/outputs should be activated reversed.

The previous outputs are fed into the hidden layer, together with the current input and previous inputs.

The only change this requires in synaptic codes is to make some nodes non-propogatable: aka they should not modify their bias and inputting connection weights. This seems do-able.

TL;DR this can be implemented. I'll start working on NARX networks, all I need is some dataset that NARX networks should be able to achieve low error on (versus regular networks).

Update: I managed to build a NARX network. However, I have doubts in the backpropagation algorithm. Does anyone have a training set?

Edit: I tested it on a sequence task, and it managed really good. I'll be posting a commit with the NARX network soon :)

This sounds like the context neuron in Elman networks (neurons holding previous activations with a fixed connection weight of 1). Those type of networks were outplaced by RNNs which are contemplated in the LSTM-g algorithm. When a neuron is self-connected, it has a fixed weight Wjj=1. You can do self connections in synaptic like the this:

var inputLayer = new Layer(3);

var hiddenLayer = new Layer(10);

var outputLayer = new Layer(2);

inputLayer.project(hiddenLayer);

hiddenLayer.project(outputLayer);

hiddenLayer.project(hiddenLayer); // recurrent connection

var RNN = new Network({

input: inputLayer,

hidden: [hiddenLayer]

output: outputLayer

});