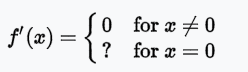

Judging from Wikipedia (see: Binary Step), that assumption is definitely correct:

But when the derivative of all x != 0 is equal to 0, then the error calculation will also return 0 for any neuron with Neuron.squash.HLIM. So basically, the values (weight, bias) won't change at all when the derivative is 0.

So my only guess is: the derivative for x != 0 is chosen to be 1 to allow propagation of neuron values.

Maybe I misunderstood something, but it seems to me that the HLIM's derivative should be equals to 0 when

x != 0.Am I wrong ?