Some applications:

- Head pose estimation (e.g. what direction the person is looking at)

- Extra features for face verification/identification

- Face morphing

- Face replacement/swap

- Detect activity of mouth, eyes, head, etc. (e.g. detect if someone is talking)

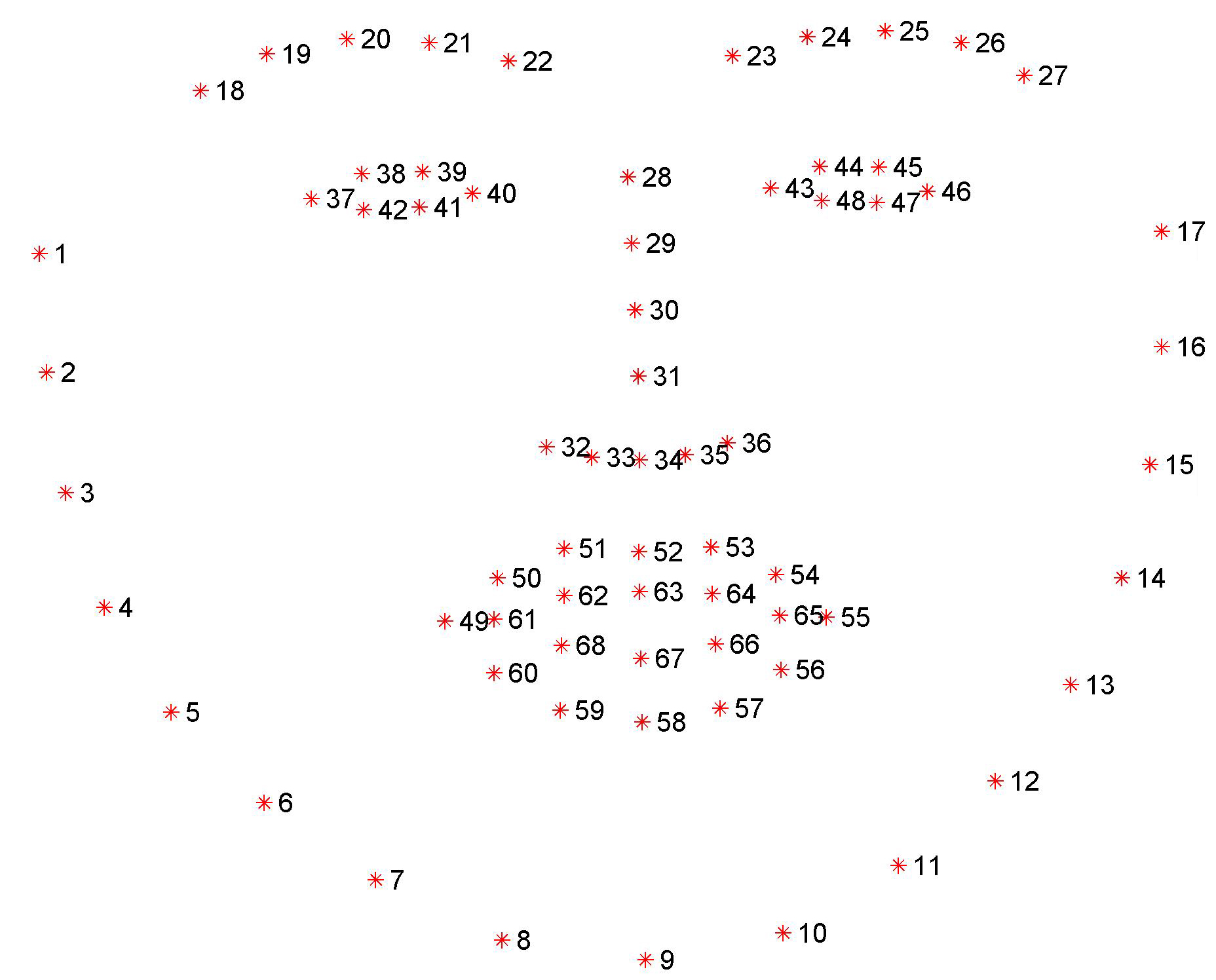

- Detect position of individual parts of the face (e.g. position of the eyes)

- Face frontalization

- Detect parts of the face under occlusion (some techniques provides this info)

What could i use landmarks for ?