Hi @hcbwiz , I read your issues (#538 and #533) and see you have done some work on testing smb direct with windows clients. Is it convenient for you to share more information with me? I didn't find any contact information on your homepage. If you would like to talk, here's my mail address: zw.xie@high-flyer.cn . Thank you.

But When I checkout to

But When I checkout to

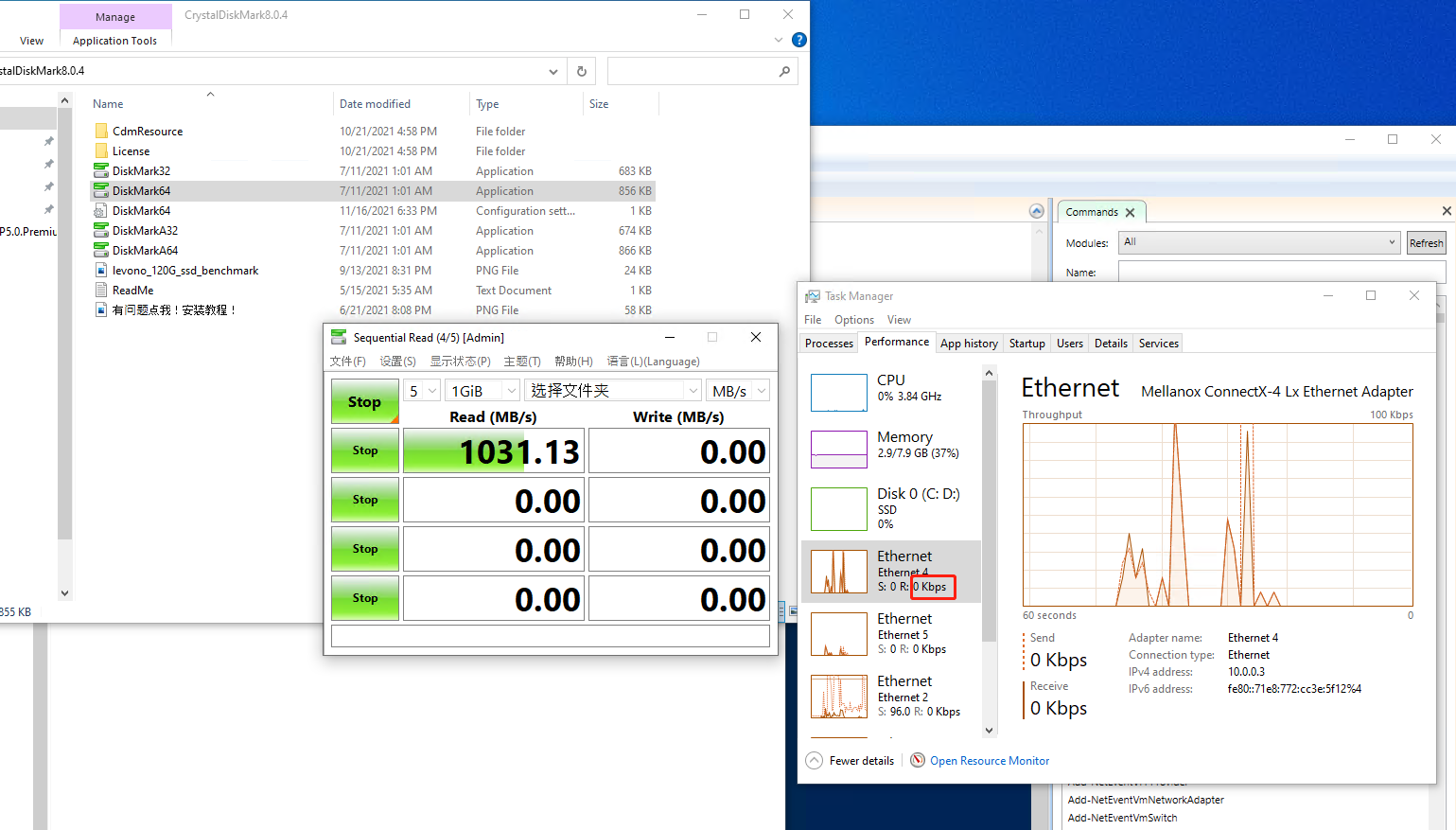

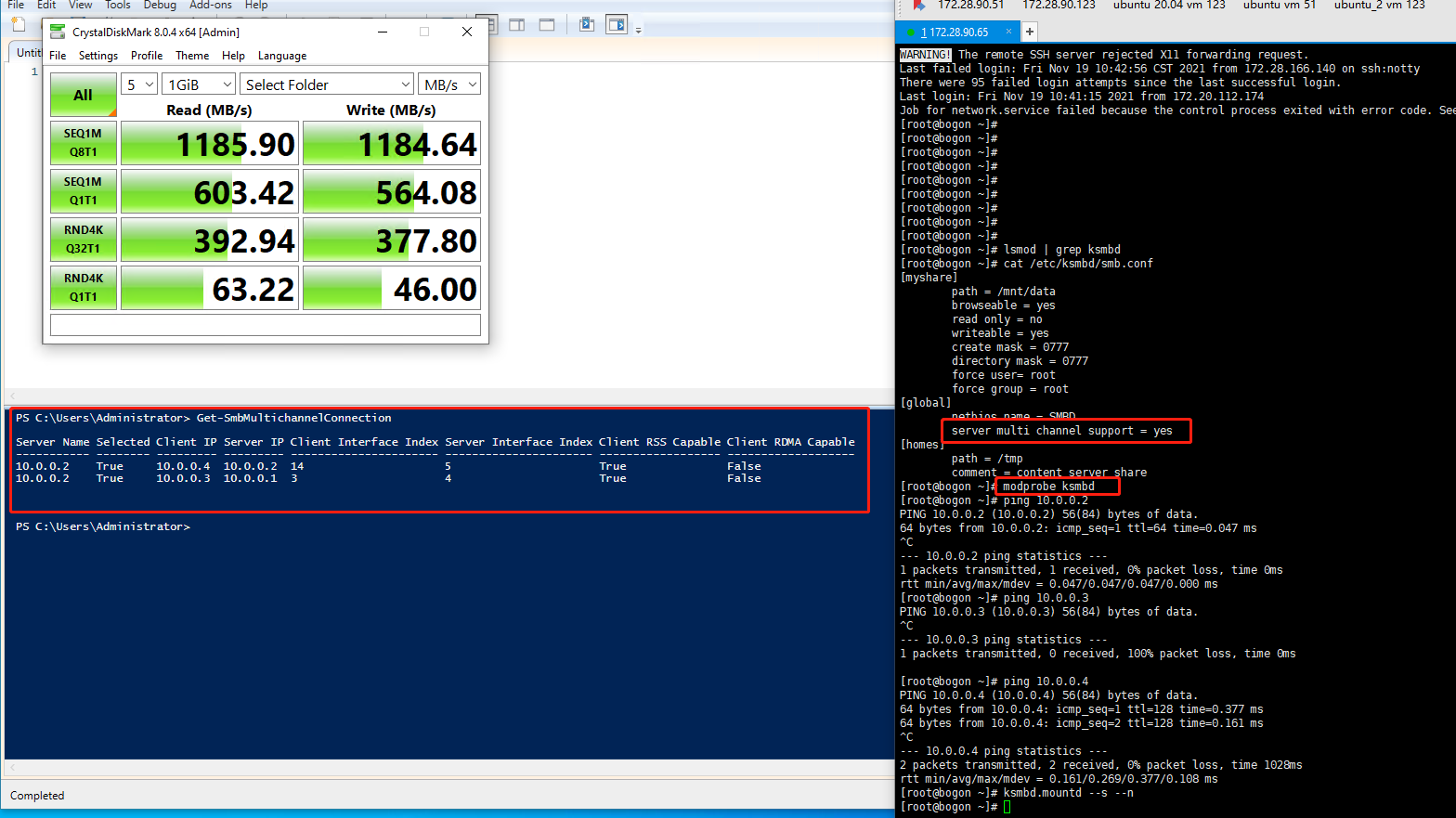

Two quick tests on the same SSD array iSCSI and smbdirect with RDMA perform about the same, ignore the slower write in this image. It also shows up nicely in the performance counters. Client is Windows 10 Pro for Workstations build 19042. Server is a debian build with 5.14 kernel with @hcbwiz ksmbd-rdma branch and a patch to force

Two quick tests on the same SSD array iSCSI and smbdirect with RDMA perform about the same, ignore the slower write in this image. It also shows up nicely in the performance counters. Client is Windows 10 Pro for Workstations build 19042. Server is a debian build with 5.14 kernel with @hcbwiz ksmbd-rdma branch and a patch to force

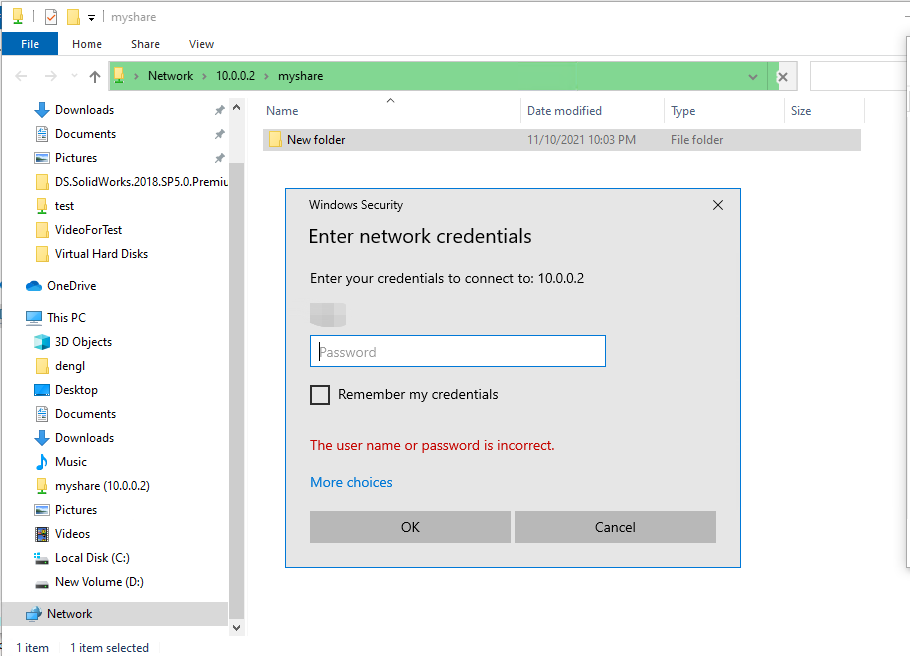

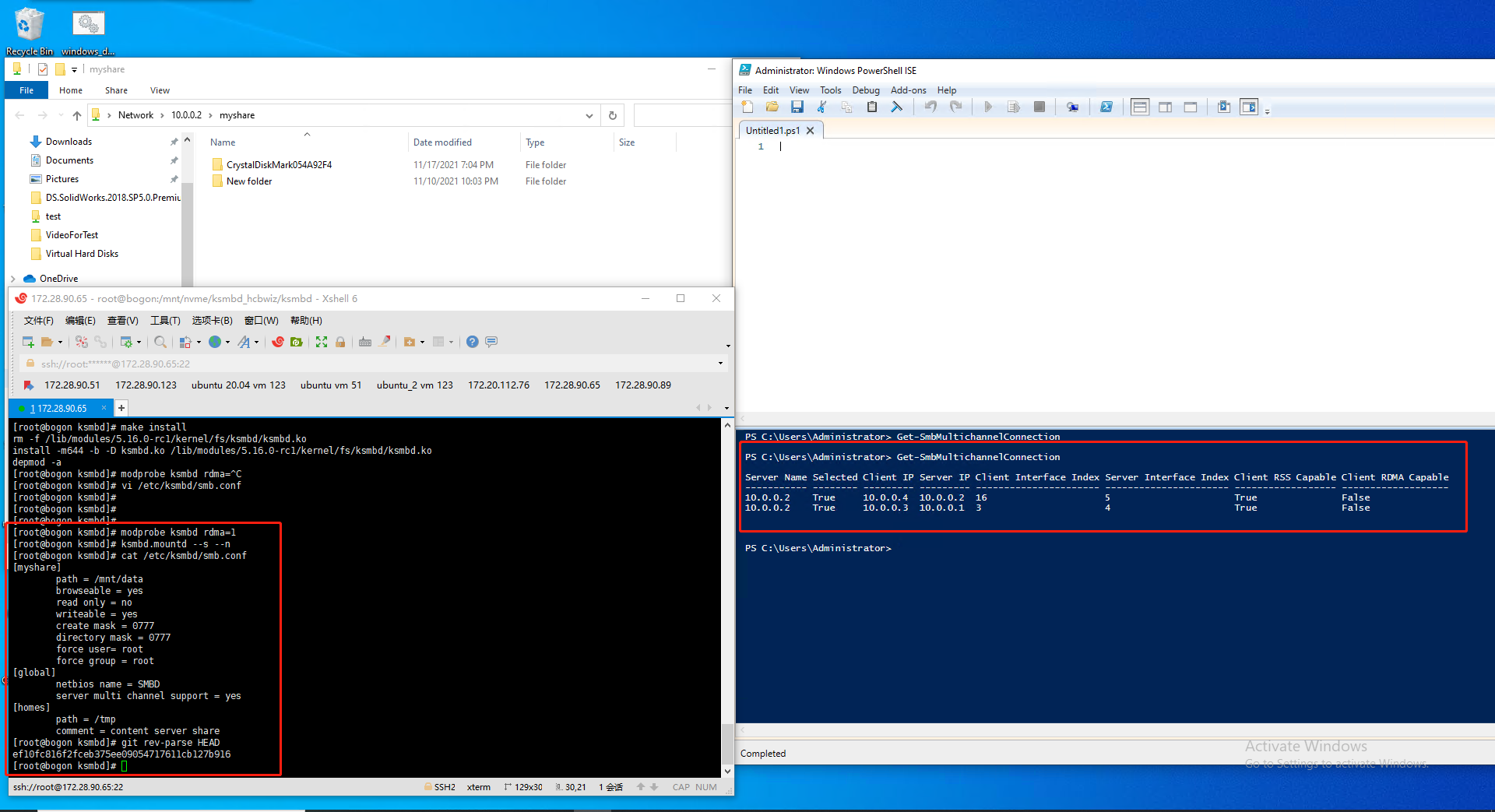

Hi, I'm trying to test smb direct with windows clients (with Mellanox Connectx-6 infiniband adapters) recently but have no luck. I'm able to build ksmbd and mount a share on windows server 2016 clients but rdma seems not enabled. I'm wondering whether this feature is already implemented. If it is implemented, what configuration is needed to enable the feature (`server multi channel support = yes' and anything else?)

The current available information looks a bit confusing. In the mail "[PATCH v8 00/13] ksmbd: introduce new SMB3 kernel server", it describes SMB direct as "Partially Supported. SMB3 Multi-channel is required to connect to Windows client" and SMB3 Multi-channel also "partially supported". In same mail, it reads 'SMB Direct is only currently possible with ksmbd (among Linux servers)'. So I guess windows clients are not possible yet. However, In Readme.md,SMB direct(RDMA) and Multi-channel are listed under Features implemented. And there seems to be already successful cases reported in other issues (#538 #529).

I would appreciate it if you would clarify this and share any progress about this feature.