One more thing: my dataset has data in non-latin language. Do I need to make any modifications to train.py or any other files?

Closed xxxpsyduck closed 5 years ago

One more thing: my dataset has data in non-latin language. Do I need to make any modifications to train.py or any other files?

Hello,

parser.add_argument('--select_data', type=str, default='/',

help='select training data (default is MJ-ST, which means MJ and ST used as training data)')

parser.add_argument('--batch_ratio', type=str, default='1',

help='assign ratio for each selected data in the batch') you should change opt.character into your own character list. https://github.com/clovaai/deep-text-recognition-benchmark/blob/6dc16df598d986d0ee872816a3fc8ef5f6c11183/train.py#L239

(2020.8.20 updated) Please read data filtering part carefully. As a default setting, we filter

len(label) > batch_max_length(=default is 25)opt.characterFurthermore, as a default, we make the label lowercase here.

To train the model for uppercase, comment out these lines. (do not use the opt.sensitive option)

So, for your own dataset, you should modify opt.character and comment out these lines.

Another way is just to comment out these filtering part (use --data_filtering_off option.) or modify this part and comment out these lines.

Instead of the character filtering part, you can simply use [UNK] token with the below modification (for attention decoder case).

[UNK] token, change below

https://github.com/clovaai/deep-text-recognition-benchmark/blob/3c2c89a88ad4f88690dce170225dfe05a0234dd5/utils.py#L108

to list_token = ['[GO]', '[s]', '[UNK]']text = [self.dict[char] if char in self.dict else self.dict['[UNK]'] for char in text]If you do not use the character filtering part and use [UNK] token, you should comment out these lines also.

Hope it helps. Best

Thank for the reply. I'm working with japanese particularly. It has thousands of characters. So I have to copy all of them to the character list. Am I correct?

@boy977 I recommend

char_list.txt which contains thousands of characters.char_list.txt in train.py, then opt.character = char_loaded.

as like https://github.com/clovaai/deep-text-recognition-benchmark/blob/6dc16df598d986d0ee872816a3fc8ef5f6c11183/train.py#L266Best

@ku21fan Hello. I tried to use "--PAD" option along with ''--rgb'' but I got the error

RuntimeError: The expanded size of the tensor (1) must match the existing size (3) at non-singleton dimension 0. Target sizes: [1, 100, 100]. Tensor sizes: [3, 100, 100]

It seems like PAD option only works with grayscale image or I did something wrong, didn't I?

@boy977 Yes, you will need to change some code to use "--PAD" option along with ''--rgb''. For example, see https://github.com/clovaai/deep-text-recognition-benchmark/blob/master/dataset.py#L277

@boy977 Yes it was. I just fixed it. Please check the recent commit 9a6f6678b1ec3343fc6891ff964ac9af1b1d04c7 Thank you for the report :)

@ku21fan one more dump question: what does "norm_ED" stand for?

@boy977 norm_ED is normalised edit distance it's another metric used to validate STR models.

@rahzaazhar About the norm_ED value, currently in the source code it is the sum of edit distance in all test cases. (It is not "normalized" yet). I think we should divide it by the number of test cases, to compare the performance among different data sets. How do you think about this?

@dviettu134 Thank you for the comment. You are right, I just updated normalized edit distance of ICDAR2019 version which divide it by the number of test cases. Please check here. https://github.com/clovaai/deep-text-recognition-benchmark/blob/master/test.py#L139-L165

Best

Hi ku21fan I'm using an intermediate (Latin) representation of Arabic characters, e.g. "Miim_B" for "م". I've prepared the dataset and modified the characters' set in the train.py file as you recommended. When I ran the train, I had realised that each character has been split in a set of subcharacters: MiimI -> 'm','I','I','m','','b' I 've tried to use .split(" ") in the utils.py/AttnLabelConverter/encode and I got this output:

_['miim_b', 'saad_m', 'raa_e', '']

Traceback (most recent call last):

File "train.py", line 317, in

Please Help!

@ooza Hello,

In my opinion.. there are 2 easy ways. 1) just add & use 'م' into the character set, instead of 'miim_b'

or if you can't do this for some reason,

2) you can work around with substitute characters for each of them. ex) add ☆★○ to the character set. and regard ☆ as 'miim_b', ★ as 'saad_m', ○ as 'raa_e'.

Hope it helps.

Best

@ku21fan Hello, My testset is made up of variable-length Chinese characters. Then, for building a more effective training set, I decide to generate some data using module of ImageDraw in python.which one should I choose, a variable-length generated dataset or selected-length generated dataset(eg.32*100)

@ku21fan Hello, My testset is made up of variable-length Chinese characters. Then, for building a more effective training set, I decide to generate some data using module of ImageDraw in python.which one should I choose, a variable-length generated dataset or selected-length generated dataset(eg.32*100)

我也遇到这个问题,怎么生成训练集呢?

I have had a problem with my alphabet. The model predicted only digits. The reason was the characters were in uppercase.

@ooza @ku21fan Could you please help me how to solve the problem of combining two characters and create new character like( شك = ش+ ك ) with characters of Arabic . Here is some part of my character text file. ء ۽ م ݥ ࢧ ݦ ه ھ ة ۀ ۂ ݳ ݴ إ ٳ ل ڶ ڷ ئ ٸ ێ ݵ ݶ ي ٕ ٖ ٜ ٠ ١ ٢ ٣ ٤ ۴ ۵ ٥ ٦ ۶ ٧ ٨ ٩ ؍ ؛ . ، ؟ ٪ ؉ ؊ ؆ ؇ ٭ ٬ ؞ « » ‹ › ( ) ؏ ۞ ۩ ۔ ـ ؎

@rm2886 I don't have enough time for testing my solution, but I want to help you. So, I would try to change --character argument from string to list. For example, you have an alphabet "abc", you should use ["a", "b", "c"]. It allows adding different combinations of symbols in the alphabet. Perhaps, you should change the data format from {imagepath}\t{label}\n to {imagepath}\t{l a be l} or something like that for getting a pair (imagepath - ["l", "a", "be", "l"]), because when the data are preparing, labels are processing just iterate by a string, but you should iterate by list (because you want the model thinks that a group of symbols is one symbol).

Something similar of what @2113vm said happened to me. My dataset was only digits + uppercase letters, so as @ku21fan suggested I skipped these lines:

and set --character as "0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ". However, if you don't use the option --data_filtering_off and you have uppercase labels on your dataset, you have to change:

to

if re.search(out_of_char, label):

Otherwise, all letters will be skipped. This happened to me because I didn't use --data_filtering_off. In my case, it might be easier to use it and forget about the filtering part 'cause I had filtered my dataset previously, but I didn't notice. Anyway, I have found @ku21fan 's code for training pretty confortable, the way you print and log all the loss, accuracy, ground truth vs. predictions information during training is really useful and makes the process much easier, thank you!

Hope it helps to someone!

@ku21fan hi ku21fan, I'm using this model to train chinese datasets I have 6000 character in len, If I generate a million img&label to train it, do u think can it make the model converge.

Hi

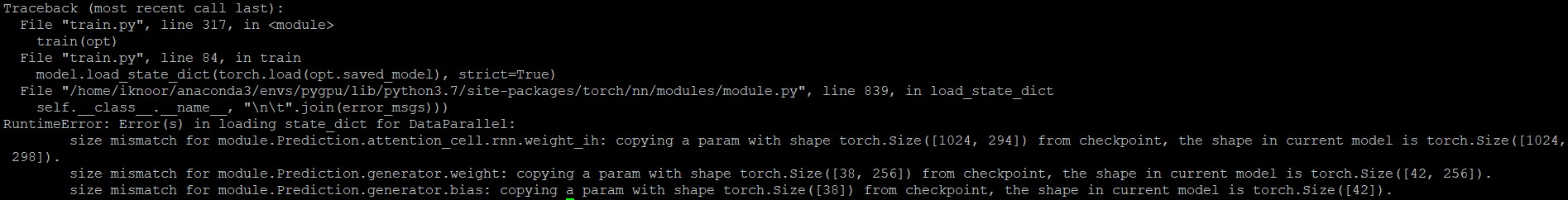

I am trying to fine-tune the normal case insensitive model (TPS-ResNet-BiLSTM-Attn) by running the following command. I have also added 4 additional characters to opt.character

CUDA_VISIBLE_DEVICES=0 python3 train.py --train_data result/train --valid_data result/valid --select_data / --batch_ratio 1 --Transformation TPS --FeatureExtraction ResNet --SequenceModeling BiLSTM --Prediction Attn --FT --saved_model TPS-ResNet-BiLSTM-Attn_15000.pth

It's still showing the following error. Am I missing something?

Thanks for help.

Iknoor

Hi

I am trying to fine-tune the normal case insensitive model (TPS-ResNet-BiLSTM-Attn) by running the following command. I have also added 4 additional characters to opt.character

CUDA_VISIBLE_DEVICES=0 python3 train.py --train_data result/train --valid_data result/valid --select_data / --batch_ratio 1 --Transformation TPS --FeatureExtraction ResNet --SequenceModeling BiLSTM --Prediction Attn --FT --saved_model TPS-ResNet-BiLSTM-Attn_15000.pthIt's still showing the following error. Am I missing something?

Thanks for help.

Iknoor

The reason is your config is not the same TPS-ResNet-BiLSTM-Attn_15000.pth. You should not change the alphabet

2. opt.character

I see that your dataset code is already inconsistent with TRBA, if you change it to non-Arabic characters, is it already possible to make changes without data filtering or use UNK tokens?

Hello, everyone,

I have been trying for a long time to solve the error 'AssertionError: datasets should not be an empty iterable'. Here is my solution:

After creating the dataset with the "create_lmdb_dataset.py" file, two files (data.mdb and lock mdb) will be created in the "result" folder.

I then created two new folders: the first called 'train', the second 'validation'.

Inside these two folders, I copied and pasted the "result" folder from the output of the "create_lmdb_dataset" file.

In the train script modify --select_data by inserting the word "train", instead of "/" as recommended here : https://github.com/clovaai/deep-text-recognition-benchmark/issues/85 (but mantain batch_ratio=1).

Now use the command: 'py train.py --train_data train/result --valid_data validation/result --Transformation None --FeatureExtraction VGG --SequenceModeling BiLSTM --Prediction CTC --data_filtering_off --workers 0'.

For me in this way worked. As rule of thumb i would recommend anyway to try to access in different ways the folder in which your dataset has been created. If the error of "iterable dataset" will begin something related to pickle something and in the detail error you see your number samples you are on a right way.

Best

unfortunately, I have tried this but I got another error in the data loader. it reads the data with LmdbDataset class but the data loader finds the data empty. I followed the steps that @Bagginses mentioned

using this command:

!CUDA_VISIBLE_DEVICES=0 python3 train.py --train_data train/results --valid_data val/results --select_data / --batch_ratio 1 --Transformation None --FeatureExtraction None --SequenceModeling None --Prediction None --Transformer --TransformerModel=vitstr_tiny_patch16_224 --imgH 224 --imgW 224 --sensitive

or this:

!CUDA_VISIBLE_DEVICES=0 python3 train.py --train_data train/results --valid_data val/results --select_data train --batch_ratio 1 --Transformation None --FeatureExtraction None --SequenceModeling None --Prediction None --Transformer --TransformerModel=vitstr_tiny_patch16_224 --imgH 224 --imgW 224 --sensitive

I got this error:

Filtering the images containing characters which are not in opt.character

Filtering the images whose label is longer than opt.batch_max_length

--------------------------------------------------------------------------------

dataset_root: train/results

opt.select_data: ['/']

opt.batch_ratio: ['1']

--------------------------------------------------------------------------------

dataset_root: train/results dataset: /

sub-directory: /. num samples: 0

dataset list: [<dataset.LmdbDataset object at 0x7f51fd150ca0>]

num total samples of /: 0 x 1.0 (total_data_usage_ratio) = 0

num samples of / per batch: 192 x 1.0 (batch_ratio) = 192

Traceback (most recent call last):

File "train.py", line 310, in <module>

train(opt)

File "train.py", line 39, in train

train_dataset = Batch_Balanced_Dataset(opt)

File "/content/deep-text-recognition-benchmark/dataset.py", line 75, in __init__

_data_loader = torch.utils.data.DataLoader(

File "/usr/local/lib/python3.8/dist-packages/torch/utils/data/dataloader.py", line 344, in __init__

sampler = RandomSampler(dataset, generator=generator) # type: ignore[arg-type]

File "/usr/local/lib/python3.8/dist-packages/torch/utils/data/sampler.py", line 107, in __init__

raise ValueError("num_samples should be a positive integer "

ValueError: num_samples should be a positive integer value, but got num_samples=0Anyone has faced this issue?

solved by adding --data_filtering_off in the command!

Hello,

parser.add_argument('--select_data', type=str, default='/', help='select training data (default is MJ-ST, which means MJ and ST used as training data)') parser.add_argument('--batch_ratio', type=str, default='1', help='assign ratio for each selected data in the batch')

- you should change opt.character into your own character list. https://github.com/clovaai/deep-text-recognition-benchmark/blob/6dc16df598d986d0ee872816a3fc8ef5f6c11183/train.py#L239

(2020.8.20 updated) Please read data filtering part carefully. As a default setting, we filter

- length filtering: the images whose

len(label) > batch_max_length(=default is 25)- character filtering: the images containing characters which are not in

opt.characterFurthermore, as a default, we make the label lowercase here. To train the model for uppercase, comment out these lines. (do not use the

opt.sensitiveoption)So, for your own dataset, you should modify

opt.characterand comment out these lines.Another way is just to comment out these filtering part (use

--data_filtering_offoption.) or modify this part and comment out these lines. Instead of the character filtering part, you can simply use[UNK]token with the below modification (for attention decoder case).

Add

[UNK]token, change below https://github.com/clovaai/deep-text-recognition-benchmark/blob/3c2c89a88ad4f88690dce170225dfe05a0234dd5/utils.py#L108to

list_token = ['[GO]', '[s]', '[UNK]']to

text = [self.dict[char] if char in self.dict else self.dict['[UNK]'] for char in text]If you do not use the character filtering part and use

[UNK]token, you should comment out these lines also.Hope it helps. Best

I try all the step to resolve this error on training the IAM dataset but I am not able to resolve this error and got the same error again and again AssertionError: datasets should not be an empty iterable I am stuck on my project so kindly give me reply as soon as possible

@pchalotra Getting the same error. Did you solve this?

any idea

Traceback (most recent call last):

File "/mnt/c/Users/jjovan/Desktop/cnn/deep-text-recognition-benchmark/train.py", line 316, in

I had num_samples = 0 error and I found an easier solution, I hope it will help someone as well. so there are 2 main checks when samples are filtered.

len(label) > self.opt.batch_max_length:

out_of_char = f'[^{self.opt.character}]' if re.search(out_of_char, label.lower()):

by default batch_max_length is 25, so anything above 25 will be skipped. In my case all the labels were above 25, so I got 0 samples. But there is an option called --batch_max_length and you can update the value as you wish, in my case --batch_max_length 60 was enough.

the second check fails, if you have any unknown character that wasn't specified in the opt.character option. after debugging about an hour or two, I realized that my labels contained space ' ' and it was not recognized by this check, thus it was skipping all the samples :P. so adding space ' ' in opt.characters option solved the issue

RuntimeError: Error(s) in loading state_dict for DataParallel: size mismatch for module.Prediction.attention_cell.rnn.weight_ih: copying a param with shape torch.Size([1024, 294]) from checkpoint, the shape in current model is torch.Size([1024, 302]). size mismatch for module.Prediction.generator.weight: copying a param with shape torch.Size([38, 256]) from checkpoint, the shape in current model is torch.Size([46, 256]). size mismatch for module.Prediction.generator.bias: copying a param with shape torch.Size([38]) from checkpoint, the shape in current model is torch.Size([46]). ''' is not recognized as an internal or external command, operable program or batch file. why is this error occuring . my characterlist is [0123456789abcdefghijklmnopqrstuvwxyz./-$#₹:&]

Hi. I created lmdb dataset on my own data by running create_lmdb_dataset.py. then I run the train command on it and got the following output:

CUDA_VISIBLE_DEVICES=0 python3 train.py --train_data result/train --valid_data result/test --Transformation TPS --FeatureExtraction ResNet --SequenceModeling BiLSTM --Prediction Attn

dataset_root: result/train opt.select_data: ['MJ', 'ST'] opt.batch_ratio: ['0.5', '0.5']

dataset_root: result/train dataset: MJ Traceback (most recent call last): File "train.py", line 283, in

train(opt)

File "train.py", line 26, in train

train_dataset = Batch_Balanced_Dataset(opt)

File "/home/mor-ai/Work/deep-text-recognition-benchmark/dataset.py", line 37, in init

_dataset = hierarchical_dataset(root=opt.train_data, opt=opt, select_data=[selected_d])

File "/home/mor-ai/Work/deep-text-recognition-benchmark/dataset.py", line 106, in hierarchical_dataset

concatenated_dataset = ConcatDataset(dataset_list)

File "/home/mor-ai/.local/lib/python3.6/site-packages/torch/utils/data/dataset.py", line 187, in init

assert len(datasets) > 0, 'datasets should not be an empty iterable'

AssertionError: datasets should not be an empty iterable

Can you help me resolve this?