Background: now that large-scale Google Surveys has ended, we will have to carefully choose which counties to sample with Google Surveys. We need a principled way to select these counties.

The high-level idea is that because we can choose where to sample, we are able to "see" where other surveys cannot, or reduce uncertainty in regions where we already have data from other surveys.

Some possible paths:

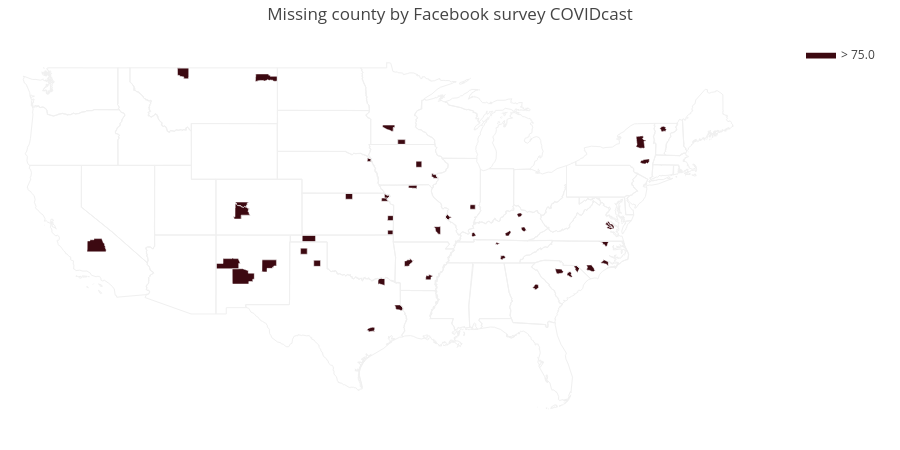

1) Reduce forecasting uncertainty: work with our forecasting team to look for places where both uncertainty and "risk" (e.g., expected COVID-19 incidence) is high in the coming weeks. 2) Reduce survey uncertainty: look at our other surveys (e.g., Facebook community question) to see where both uncertainty and "risk" is high. The problem with this approach is that uncertainty is highest where we have no samples at all, and we do not have an a priori way of prioritizing counties with no samples using survey data alone.

Some ways of prioritizing regions where we have absolutely no survey data:

1) Use our Google Health Trends sensor, which we believe to measure latent COVID-19 activity. We could train historical GHT to predict historical Google Surveys, and use future spikes in GHT to determine where to target Google Surveys samples. 2) Allow public health agencies to "nominate" themselves using an online form. 3) Allow the public (?) to "vote" on where we should sample; we can stratify the votes based on maximum population size to ensure that we have proper coverage of low-population counties. The idea is that the "crowd" would tell us where there may be high COVID incidence based on word of mouth, news reports, etc. 4) If we are choosing counties "by hand" week-to-week, we could even build a Twitter "sensor" which sifts through headlines and finds regions reporting high COVID-19 incidence. Then we look through the "top locations" every week and then decide ourselves where to sample.

We're not going to be running the Google surveys daily past Friday, May 15. But we are still able to run the surveys on a small scale (i.e. smaller budget) if we want; we have complete control over how the survey is geographically targeted, so we can pick a strategy.

We could use it to augment the Facebook surveys, but the signals do not see comparable; see issue #2. Is there another, better use for these surveys that would improve forecasting or nowcasting?