How did you obtain the robot poses? Do you have an accurate calibration between the robot and the camera? Are the camera and robot poses synchronized, or could there be some time offset?

How did you measure the accuracy of the robot poses? Did you look at the trajectory or did you obtain 3D scene geometry from them?

I have run into situations where camera trajectories estimated by visual-inertial odometry provide less accurate dense reconstruction than structure-from-motion poses as the former oversmooth the trajectory, which leads to less accurate triangulations.

A dataset of 200 images was collected with a calibrated camera along with corresponding camera poses using a robot.

First, features were detected and exhaustive matching was used to match features and create the database for the model.

Steps taken:

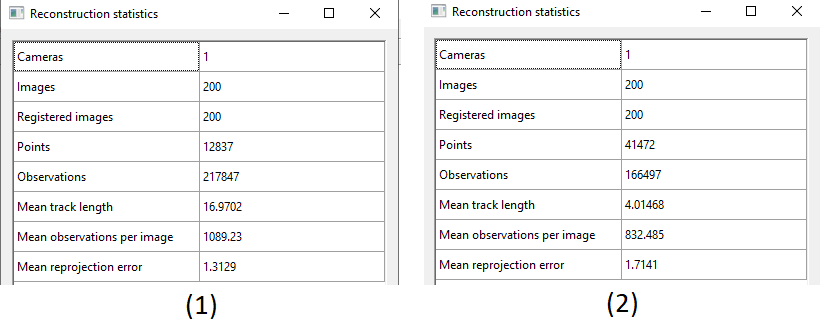

mapperfor reconstruction of scene up to scale.point_triangulatorfollowed bybundle_adjusterfor global bundle adjustment.The sparse reconstruction from step 2 is significantly noisier compared to step 1, see fig. for statistics.

I can't quite figure what is wrong here, since additional information about the scene should ideally improve the reconstruction, not worsen it.

Checks completed:

point_triangulator, returning the correct reconstruction. Therefore, the reconstruction converges for the trivial solution.incremental_mapper. This did not improve the results.Expected behavior: The reconstruction from step 2 should ideally be better or at least not worse than step 1 with more unknowns.

Desktop:

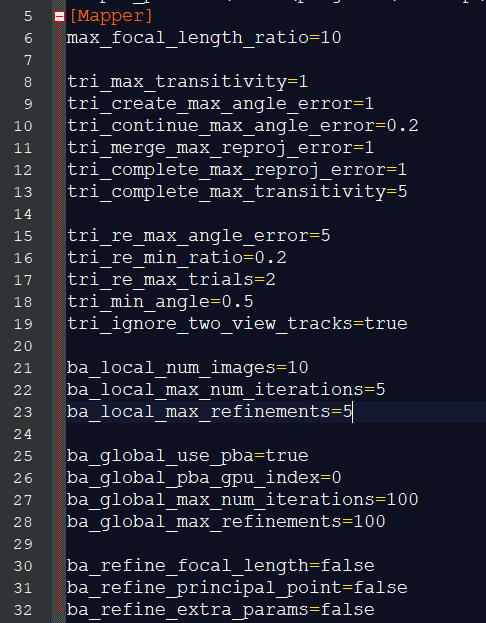

Settings: