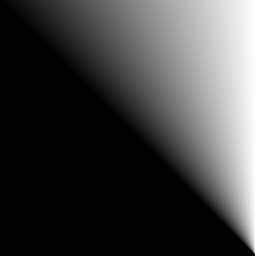

Continuing to research differences in operators, below are color_burn variants:

// Cairo:

auto f = [](float a, float b){

if( 1.f - b <= numeric_limits<float>::min() )

return 1.f;

return a > numeric_limits<float>::min() ? 1.f - min(1.f, (1.f - b) / a) : 0.f;

};

// CoreGraphics:

auto f = [](float a, float b){

if( 1.f - b <= numeric_limits<float>::min() )

return 1.f;

return a > numeric_limits<float>::min() ? 1.f - (1.f - b) / a : numeric_limits<float>::lowest();

};

Continue discussion of ISOCPP-2D/io2dts#4 here.