An interesting finding! I am not aware of it.

Is it happening in most of images? And if you crop out the foreground and run the model, does axial model classify it incorrectly?

Open DesertsP opened 3 years ago

An interesting finding! I am not aware of it.

Is it happening in most of images? And if you crop out the foreground and run the model, does axial model classify it incorrectly?

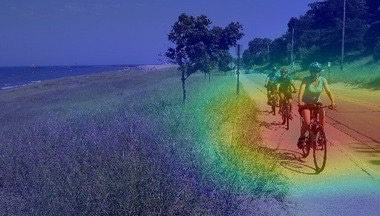

Another sample of mountain bike.

Axial26s:

ResNet18

ResNet18

I visualized some samples, and most of them have same problem. The classification network trained by AxialAttention tends to have larger and inaccurate activation regions. Although this has no effect on the classification task, I think this feature map is not better than CNN. I guess that other non-local networks for classification have same problem.

Thanks for sharing another example. It looks reasonable that attention models have larger activation regions, because of their long range modeling ability.

In order to evaluate the feature quality, one could evaluate on detection if you care about the localization ability of the feature, e.g. with fixed feature representation as done in unsupervised learning. This might be a more direct way of evaluating if a feature is better for downstream tasks. If we find out that attention model does transfer to detection without training, then probably we need a method other than CAM to explain and visualize attention models. (I'm not convinced that local activations in CAM indicate better features.)

I tried to use CAM to observe the feature map of Axial-Attention, but found that the network did not learn a good feature representation.

I use script from Bolei Zhou to generate CAM: https://github.com/zhoubolei/CAM/blob/master/pytorch_CAM.py

Pretrained AxialAttentionNet axial26s from this repo:

ResNet-18