Estimating an Optimal Learning Rate For a Deep Neural Network

Pavel Surmenok

How does learning rate impact training? Deep learning models are typically trained by a stochastic gradient descent optimizer. There are many variations of stochastic gradient descent: Adam, RMSProp, Adagrad, etc. All of them let you set the learning rate. This parameter tells the optimizer how far to move the weights in the direction opposite of the gradient for a mini-batch.

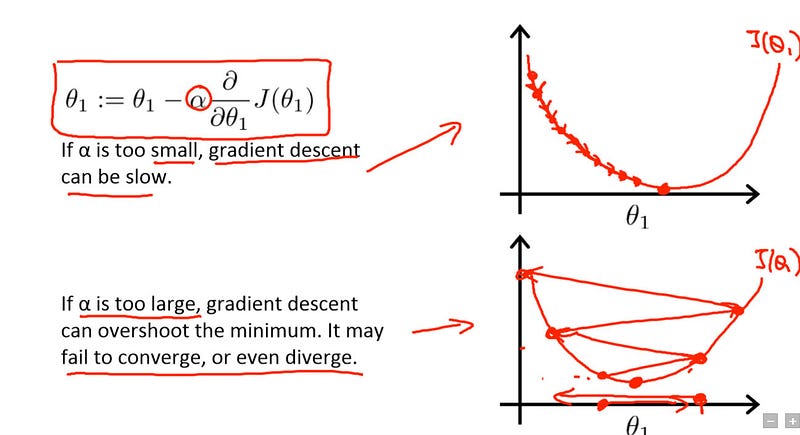

If the learning rate is low, then training is more reliable, but optimization will take a lot of time because steps towards the minimum of the loss function are tiny.

If the learning rate is high, then training may not converge or even diverge. Weight changes can be so big that the optimizer overshoots the minimum and makes the loss worse.

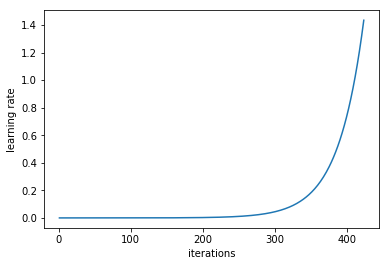

The trick is to train a network starting from a low learning rate and increase the learning rate exponentially for every batch.

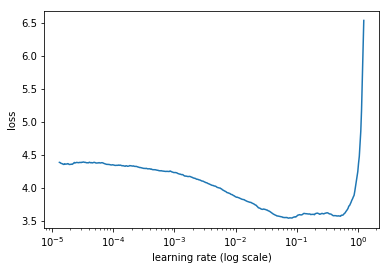

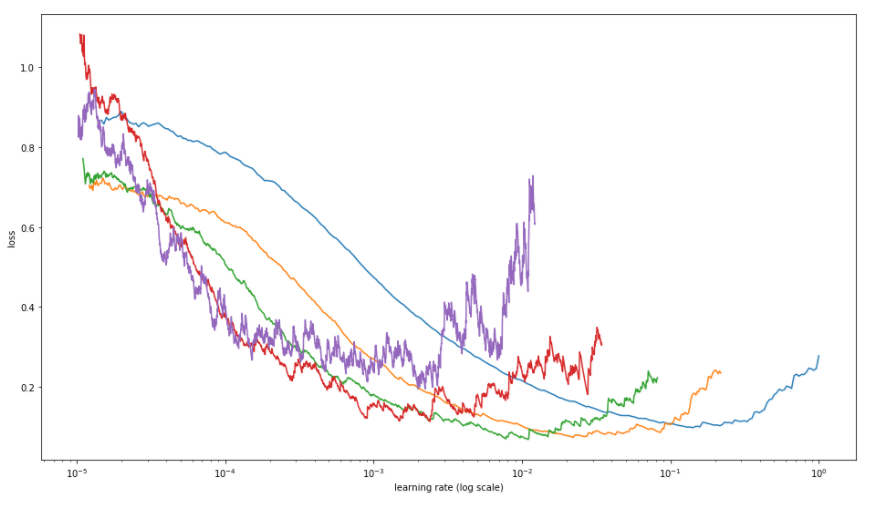

Record the learning rate and training loss for every batch. Then, plot the loss and the learning rate. Typically, it looks like this:

First, with low learning rates, the loss improves slowly, then training accelerates until the learning rate becomes too large and loss goes up: the training process diverges. We need to select a point on the graph with the fastest decrease in the loss. In this example, the loss function decreases fast when the learning rate is between 0.001 and 0.01.

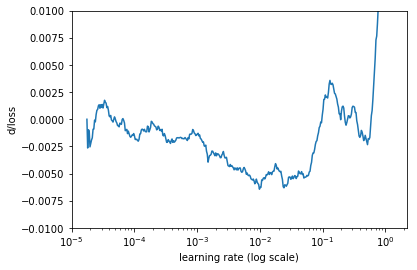

Another way to look at these numbers is calculating the rate of change of the loss (a derivative of the loss function with respect to iteration number), then plot the change rate on the y-axis and the learning rate on the x-axis with a simple moving average to smooth the graph/reduce noise.

The fastai library provides an implementation of the learning rate finder. You need just two lines of code to plot the loss over learning rates for your model:

learn.lr_find()

learn.sched.plot_lr()The code to plot the rate of change of the loss function is as follows:

def plot_loss_change(sched, sma=1, n_skip=20, y_lim=(-0.01,0.01)):

"""

Plots rate of change of the loss function.

Parameters:

sched - learning rate scheduler, an instance of LR_Finder class.

sma - number of batches for simple moving average to smooth out the curve.

n_skip - number of batches to skip on the left.

y_lim - limits for the y axis.

"""

derivatives = [0] * (sma + 1)

for i in range(1 + sma, len(learn.sched.lrs)):

derivative = (learn.sched.losses[i] - learn.sched.losses[i - sma]) / sma

derivatives.append(derivative)

plt.ylabel("d/loss")

plt.xlabel("learning rate (log scale)")

plt.plot(learn.sched.lrs[n_skip:], derivatives[n_skip:])

plt.xscale('log')

plt.ylim(y_lim)

plot_loss_change(learn.sched, sma=20)Note that selecting a learning rate once, before training, is not enough. The optimal learning rate decreases while training.

There is more to it The conventional wisdom is that the learning rate should decrease over time, and there are multiple ways to set this up: step-wise learning rate annealing when the loss stops improving, exponential learning rate decay, cosine annealing, etc.

Cyclical Learning Rates for Training Neural Networks by Leslie N. Smith describes a novel way to change the learning rate cyclically.

Paper to read about

Paper to read about

learning rates:

https://towardsdatascience.com/estimating-optimal-learning-rate-for-a-deep-neural-network-ce32f2556ce0 https://miguel-data-sc.github.io/2017-11-05-first/

pytorch:

https://towardsdatascience.com/a-practitioners-guide-to-pytorch-1d0f6a238040

advanced:

http://teleported.in/posts/decoding-resnet-architecture/