Introduction (0mins - 10mins)

See fastai homepage for production hints: Render, AWS BeanStalk, Google App Engine. Building an App is an excellent way to see how the model will work.

Planet dataset (10mins - 56mins)

Step 1: get data

Get data using Kaggle API, get a JSON file and add it to .kaggle.

Unzip the data: ! 7za -bd -y -so x {path}/train-jpg.tar.7z | tar xf - -C {path}

Step 2: bring data in for ML

check out the csv with labels

transform the data with get_transforms to flip, rotate, zoom, warp, lighting transforms. This function returns a tuple of two list of transforms.

The data block api is really cool.

Let's understand more: class Dataset in PyTorch. Define two things, getitem and len which are special magical methods which do some type of behaviour.

A dataset is not enough to train a model, we need a few items/images at a time so we can do a mini-batch so that we can train a model in parallel.

So to create a minibatch we use another PyTorch class called DataLoader. This is will use a dataset in it's constructor and grab items at random and create a batch of whatever size asked for and returns it to the GPU for modelling.

Next we need to hold out some data for validation, and for this we use a fastai class called DataBunch which will bind together a training data loader and valid data loader. train_dl:DataLoader, valid_dl:DataLoader - basically giving the DataLoader the data bunches wanted for the model.

src is creating the image set, where do the images come from, labels, split, and src.datasets turns them into PyTorch datasets, then the dataset is transformed, and dataloader/databunch created.

@ 25min there is a good example with the MNIST dataset.

show_batch is great, because data object knows how to draw itself.

The notebook of all data block API example is located in [GitHub fastai docs_src(https://github.com/fastai/fastai/blob/master/docs_src/data_block.ipynb) and in docs on fastai When transforming data warping is an option in fastai - because photos can be taken at an angle. No need to warp satellite images.

Step 3: train the model

ResNet50 helped make the model perform better.

In learn, set the metrics. When you have a classifier you will have false positives and false negatives and there are lots of ways of calculating this. Threshold required for predictions, there are lots of labels in a in multi label classification so fbeta is used with threshold=0.2 rather than using np.argmax as we used in the black/grizzly/teddy predictions.

We use a partial function here that take one function and changes some of the parameters. This is a really common thing to do! Customisation.

Questions:

- If the model makes an incorrect prediction in a deployed app is there a way to record that error and then improve the model? The user will need to tell you it is wrong, collect feedback. Record in a log. How do you fine tune: unfreeze, create a new data bunch with the mis-classified examples, and pass in the correct classification and train again.

- How do data blocks get used, is there any other examples? Yes they do have to be in a certain order. Quite often used in ETL.

- What resources do you recommend for video, to pull frames and submit to model? Use web APIs. Open cv is popular. A video resource folder will be prepared. MoviePy recommended by Rachel in the resources.

Unfreeze and find a new learning rate. The plot of the learning rate is a little more difficult. Where is shoots up go back about 10. Using discriminative learning rates.

Step 4: Improve model

I want to improve my model! How can I retrain with overfitting.....change the size of the imagery in the data bunch. Transfer learning: start a model with 128x128 images and change the image sizes. Freeze, unfreeze retrain repeat.

Camvid dataset (56mins - 1hr34mins)

Each pixel has a unique number. The training data is created by labelling every pixel in the image of a dataset. This is very common in medical datasets, natural sciences etc.

Datasets for fastai are stored in URLs, details are on the fastai website

Questions:

Can I use lr_find to return an exact number? No, it's still very artisanal, because it requires a certain amount of experimentation.

open_mask is a function used on the segmented image because it is an array of 32 classes so with open_mask fastai will know what to do....apply colours!

Let's load in a databunch

So the CamVid dataset comes with a list of validation images. The reason is that the dataset is created from stills of a video, and if we randomly mixed things up some of the validation data would be almost identical to the training data so we use .split_by_fname_file('../valid.txt')

With transform, because the x and the y datasets are aligned and must have the same extents, alignment etc, then if we do transfor the x, we also need to transfor the y so tfm_y=True

fastai is really great and it combines the two images, x and y, so that they are colour coded imagery.

Let's train a model and find a learner

lr_find and train the model

We are using a u-net architecture. Originally designed for medical imaging.

loss and learning rate

using goes up and then down again. Why is that a good idea? Look at a really cool project by José Fernández Portal plotting the loss function and the path followed by SGD

Loss is often bumpy, so you want a learning rate that is high enough to jump over the bumps and small enough at the end. Learning rate annealing. Leslie Smith -----> find low loss and non-bumpy areas and faster!

learn.reorder.plot_losses() really useful > to see the training and validation losses.

Amazing segmentation results in the The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation. But the fastai and u-net segmentation model is now beating this.

GPU problems, there are some helpers/solutions:

watch -n 1 nvidia-smito view GPU logstorch.cuda.empty_cache()to empty the jupyter cache- mixed precision - means using 16-bit instead of 32-bit floating points. This is relatively new (2years). Add

to_fp16()at the end of the learn object. This will only work on the newest NVIDIA CUDA tech. Sometimes making sometimes less precise it will generalise a little better!

BIWI head pose dataset (1hr34mins - 1hr41mins)

A dot in the middle of the face, so try to create a model to find the centre of the face. Depth sensing camera like x-box connect. Get coordinates to end up with actual coordinates on the face. It's about finding xy coordinates, so we need a CNN that spits out two numbers, it's not a classification model it is a regression (not linear). Regression is any kind of model when you output is continuous numbers or set of numbers.

Pick one folder, a different persons video, as the validation set. Important that the validation is done on a person the model has never see before.

Create model

Create a cnn, then find loss function. In classification the loss model is cross entropy loss, instead for a regression problem we use mean squared error (mse). Confident it is a good model based on the coordinates and the mse value.

NLP with IMDB (1hr41mins-1hr48mins)

The process involves the usual Data Block API to create a data bunch. Start with tokenisation, take words and convert them into standard form of tokens. Each token represents a word. Next do Numericalisation, replacing all tokens with numbers.

Now we can start to create a model. When we do NLP we actually create two models: one is a language model, train it, unfreeze, train more. Then we fine tune and create a classifier, train is etc.

Questions (1hr48mins):

- Why do you need to use stats that are the same as the pre-trained model? Use the stats that the model was trained with, otherwise you will normalise them out.

Back to theory (1hr50mins)

We are using SGD to fit a model. No amount of matrix multiplications will solve the problem, every matrix multiplication is simply a linear model.

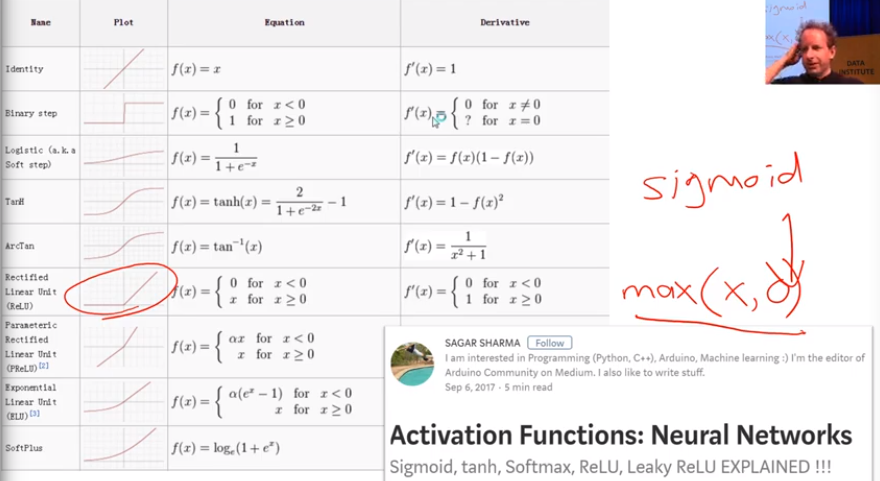

We are doing matrix multiplication or convolution (learn about later) - after each matrix multiplication we do a non-linearity or an activation function.

We used to use sigmoid. Now we almost always use a rectified linear unit aka ReLU. Defined as max(x, 0)

- look at Michael Nielson's book on Neural Networks and Deep Learning for some cool javascript. To find the values of parameters we use gradient descent.

Questions (1hr58mins):

- tokenisation? the model will figure it out

- Satellite imagery with more or less channels? There are several solutions for 2 channel. For 4 channel, you will need to modify the model itself, the initial weight tensors will need to include an extra slice. We will learn more over the next few lessons.

We start lesson 3 looking at an interesting dataset: Planet's Understanding the Amazon from Space. In order to get this data in to the shape we need it for modeling, we'll use one of fastai's most powerful (and unique!) tools: the data block API.

One important feature of the Planet dataset is that it is a multi-label dataset. That is: each satellite image can contain multiple labels, whereas previous datasets we've looked at have had exactly one label per image. We'll look at what changes we need to make to work with multi-label datasets.

Next, we will look at image segmentation, which is the process of labeling every pixel in an image with a category that shows what kind of object is portrayed by that pixel. We will use similar techniques to the earlier image classification models, with a few tweaks. fastai makes image segmentation modeling and interpretation just as easy as image classification, so there won't be too many tweaks required.

We will be using the popular Camvid dataset for this part of the lesson. In future lessons, we will come back to it and show a few extra tricks. Our final Camvid model will have dramatically lower error than an model we've been able to find in the academic literature!

What if your dependent variable is a continuous value, instead of a category? We answer that question next, looking at a keypoint dataset, and building a model that predicts face keypoints with high accuracy.