#coding=utf-8

from sklearn.feature_extraction.text import TfidfVectorizer

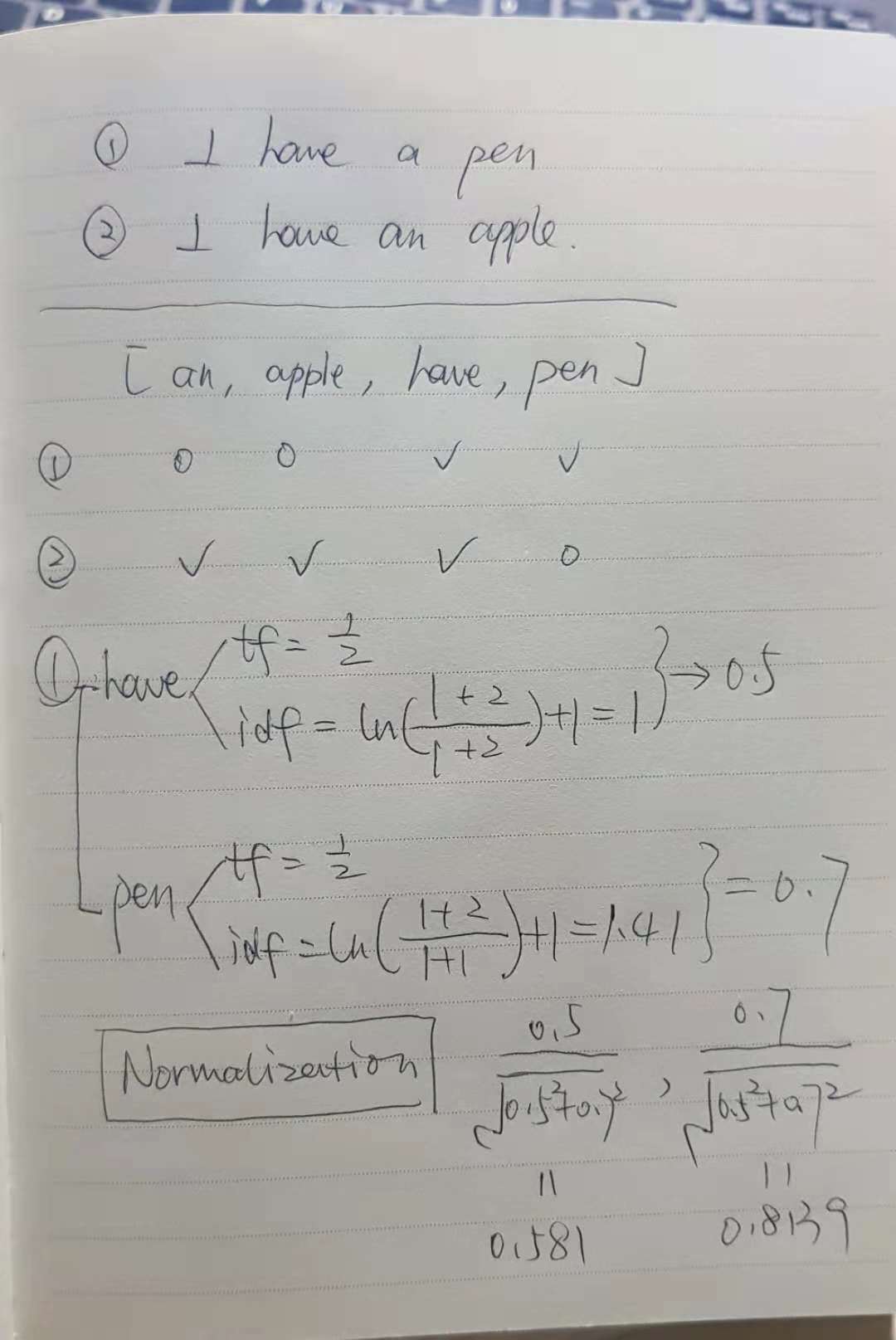

document = ["I have a pen.",

"I have an apple."]

tfidf_model = TfidfVectorizer().fit(document)

sparse_result = tfidf_model.transform(document) # 得到tf-idf矩阵,稀疏矩阵表示法

print(sparse_result)

# (0, 3) 0.814802474667

# (0, 2) 0.579738671538

# (1, 2) 0.449436416524

# (1, 1) 0.631667201738

# (1, 0) 0.631667201738

print(sparse_result.todense()) # 转化为更直观的一般矩阵

# [[ 0. 0. 0.57973867 0.81480247]

# [ 0.6316672 0.6316672 0.44943642 0. ]]

print(tfidf_model.vocabulary_) # 词语与列的对应关系

Word2vector

0. Preparation

jieba cut

1. Bag of words (BoW), word to vector by word frequency

from sklearn.feature_extraction.text import CountVectorizercountvector = CountVectorizer(token_pattern=None)model_fit = countvector.fit(document)print(model_fit)print(model_fit.vocabulary_), 여기 왜 싱클 글자 없을까?token_pattern='(?u)\\b\\w\\w+\\b'regex땜에 단어 길이 둘 넘어야 인식할수있음, 그리고 이렇게token_pattern=r"(?u)\\b\\w+\\b" # must be double \싱클 글자 인식할수있도록 바꿀수있음.model_transform = model_fit.transform(document)print(model_transform), sparse matrix稀疏矩阵,(row_inx, column_idx) valuemodel_transform.toarray(), dense matrix稠密矩阵, sorted_dict순서에 따라 vector를 생성하고, 해당 위치에 단어 frequency를 채우고All in one

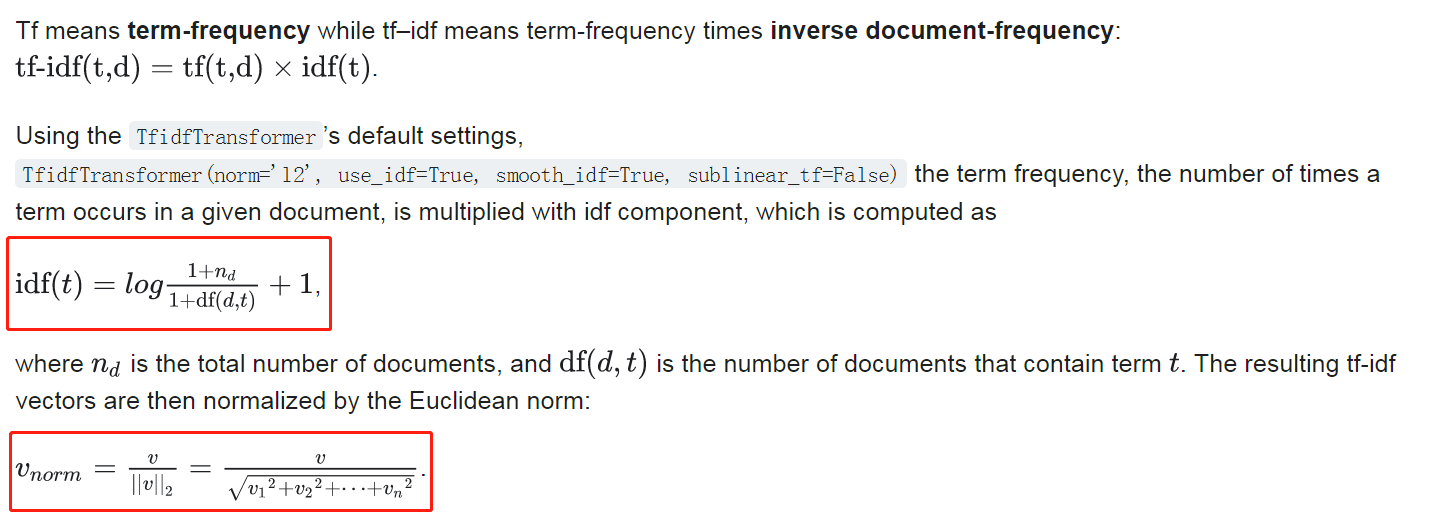

2. Word to vector by TF-IDF

from sklearn.feature_extraction.text import TfidfVectorizertfidf = TfidfVectorizer()model_fit = tfidf.fit(document)print(model_fit.vocabulary_)model_transform = model_fit.transform(document)print(model_transform)model_transform.toarray()Reference: