The same issue in this comment / related to #1103

Closed bobrippling closed 1 year ago

The same issue in this comment / related to #1103

@bobrippling how do you run your spark application? We never ship the jars in python delta-spark. We ask the user either use --packages io.delta:delta-core_2.12:1.2.1 or call configure_spark_with_delta_pip to start the spark application.

Thanks @zsxwing - bringing down delta-storage from maven does the trick.

For future reference, is this the expected approach from a python standpoint, or do we expect the jar to be available as part of pip or similar?

Thanks!

Hi @bobrippling - just to clarify, how did you run your spark application? I'd like to be able to reproduce this to write a test for it.

Hi @scottsand-db - it's fired up as

spark-submit \

--deploy-mode cluster \

--conf spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension \

--conf spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog \

--conf spark.delta.logStore.class=org.apache.spark.sql.delta.storage.S3SingleDriverLogStore \

[--conf ...]if that's helpful to you?

Hi @bobrippling - that helps.

Quick question - are you purposefully trying to use the old S3SingleDriverLogStore LogStore implementation? We have reimplemented it in our new delta-storage artifact, with class name io.delta.storage.S3SingleDriverLogStore here.

And by the way, for paths with S3 scheme, we will automatically use this io.delta.storage.S3SingleDriverLogStore implementation. There's no need to specify spark.delta.logStore.class.

Btw, zsxwing has already provided a solution here: https://github.com/delta-io/delta/issues/1152#issuecomment-1140082770. You need to include --packages io.delta:delta-core_2.12:1.2.1. If you don't have any more questions, I'll close this issue.

Great, thanks @scottsand-db. I'm happy to move onto the new LogStore implementation (i.e. drop S3SingleDriverLogStore), and to confirm - zsxwing's solution fixes it for me. And thanks for the tip about the path too - that's useful.

No more questions from me - thanks again both

hi @bobrippling @scottsand-db I am using spark 3.2.0 in EMR, I still have this issue after specifying the packages. Do you have any idea on it? Thanks

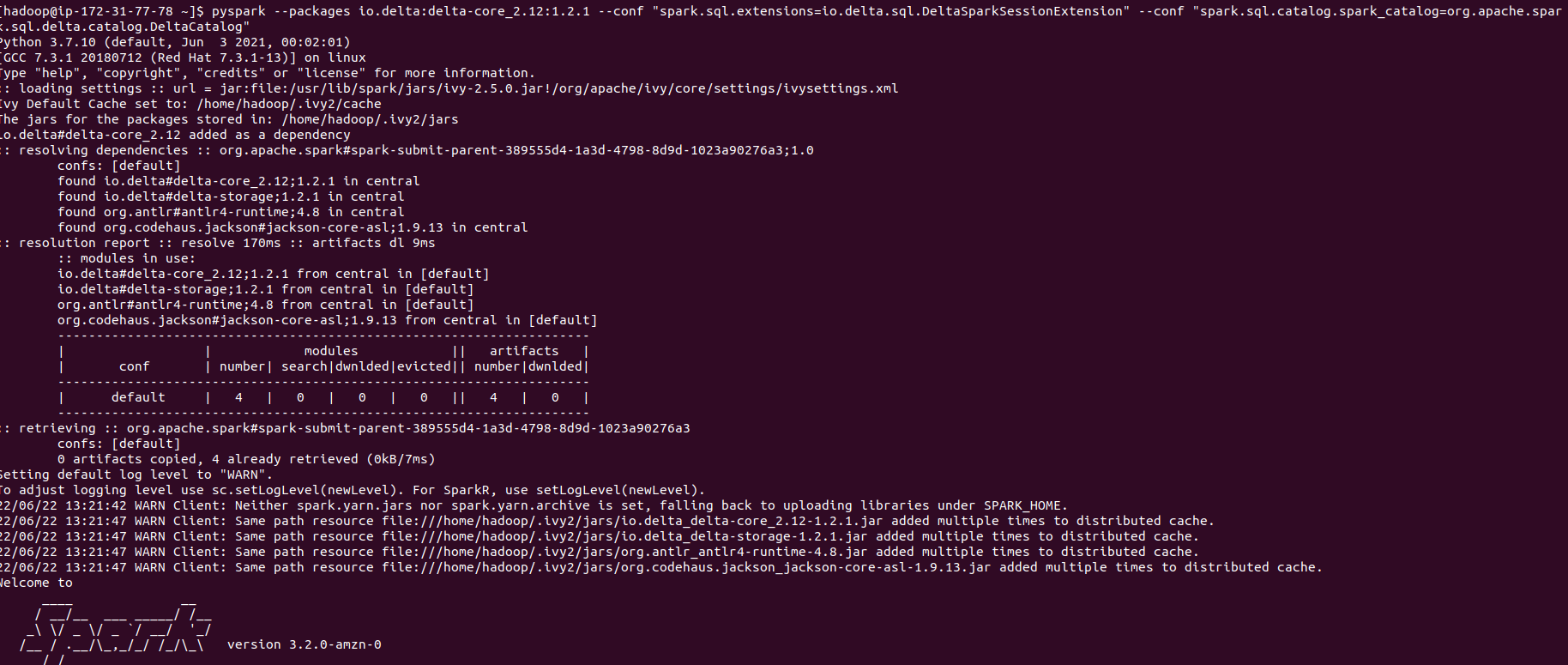

pyspark --packages io.delta:delta-core_2.12:1.2.1 --conf "spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension" --conf "spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog"

The error is:

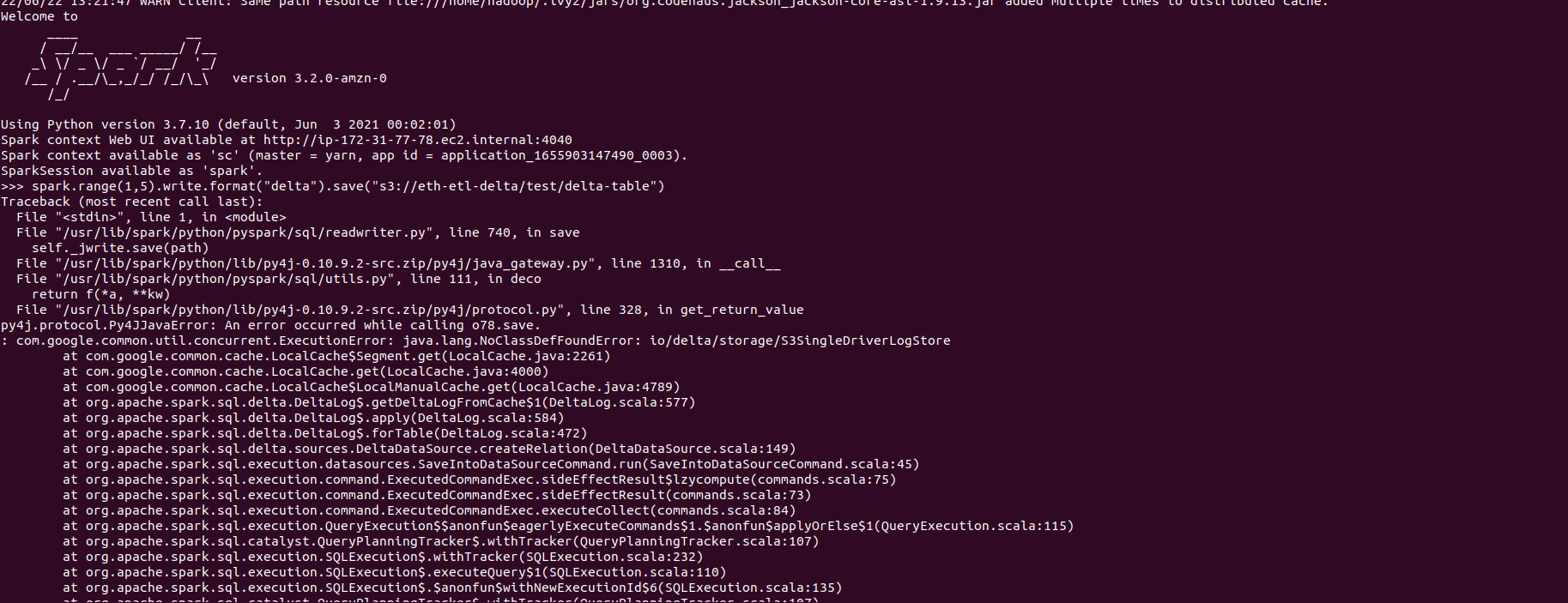

>>> spark.range(1,5).write.format("delta").save("s3://eth-etl-delta/test/delta-table") Traceback (most recent call last): File "<stdin>", line 1, in <module> File "/usr/lib/spark/python/pyspark/sql/readwriter.py", line 740, in save self._jwrite.save(path) File "/usr/lib/spark/python/lib/py4j-0.10.9.2-src.zip/py4j/java_gateway.py", line 1310, in __call__ File "/usr/lib/spark/python/pyspark/sql/utils.py", line 111, in deco return f(*a, **kw) File "/usr/lib/spark/python/lib/py4j-0.10.9.2-src.zip/py4j/protocol.py", line 328, in get_return_value py4j.protocol.Py4JJavaError: An error occurred while calling o78.save. : com.google.common.util.concurrent.ExecutionError: java.lang.NoClassDefFoundError: io/delta/storage/S3SingleDriverLogStore at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2261) at com.google.common.cache.LocalCache.get(LocalCache.java:4000) at com.google.common.cache.LocalCache$LocalManualCache.get(LocalCache.java:4789) at org.apache.spark.sql.delta.DeltaLog$.getDeltaLogFromCache$1(DeltaLog.scala:577) at org.apache.spark.sql.delta.DeltaLog$.apply(DeltaLog.scala:584) at org.apache.spark.sql.delta.DeltaLog$.forTable(DeltaLog.scala:472) at org.apache.spark.sql.delta.sources.DeltaDataSource.createRelation(DeltaDataSource.scala:149)

The full command:

Hi @hustnn, that first screenshot shows that delta-storage is, in fact, being downloaded. This seems to be some other issue, perhaps related to the .getDeltaLogCache call.

@hustnn could you provide the entire stack trace?

@zsxwing @bobrippling I managed to make it work after copying the delta-storage jar to spark lib.

@hustnn so you have a delta-core jar in spark jars directory? If so, this makes sense. This line https://github.com/delta-io/delta/blob/v1.2.1/core/src/main/scala/org/apache/spark/sql/delta/storage/DelegatingLogStore.scala#L159 requires delta-core and delta-storage are loaded in the same way, either both exists in jars directory, or both loaded through --packages.

@hustnn so you have a delta-core jar in spark

jarsdirectory? If so, this makes sense. This line https://github.com/delta-io/delta/blob/v1.2.1/core/src/main/scala/org/apache/spark/sql/delta/storage/DelegatingLogStore.scala#L159 requires delta-core and delta-storage are loaded in the same way, either both exists injarsdirectory, or both loaded through --packages.

Yes, I see. Thanks @zsxwing

I am using: Pyspark 3.2.1 io.delta 1.2.1

com.google.common.util.concurrent.ExecutionError: java.lang.NoClassDefFoundError: io/delta/storage/S3SingleDriverLogStore

...

22/08/29 14:13:38 INFO SparkContext: Added JAR file:/tmp/spark-5d4cffaa-b1e1-4eeb-9f44-fe3dd4cf3340/delta-core_2.12-1.2.1.jar at spark://py-stats-0dd6da82e9f3422a-driver-svc.streaming.svc:7078/jars/delta-core_2.12-1.2.1.jar with timestamp 1661782417538

22/08/29 14:13:38 INFO SparkContext: Added JAR file:/tmp/spark-5d4cffaa-b1e1-4eeb-9f44-fe3dd4cf3340/delta-storage-1.2.1.jar at spark://py-stats-0dd6da82e9f3422a-driver-svc.streaming.svc:7078/jars/delta-storage-1.2.1.jar with timestamp 1661782417538

22/08/29 14:13:38 INFO SparkContext: Added JAR file:/tmp/spark-5d4cffaa-b1e1-4eeb-9f44-fe3dd4cf3340/delta-contribs_2.12-1.2.1.jar at spark://py-stats-0dd6da82e9f3422a-driver-svc.streaming.svc:7078/jars/delta-contribs_2.12-1.2.1.jar with timestamp 1661782417538

...I do also:

spark = SparkSession\

.builder\

.config("spark.sql.extensions", "io.delta.sql.DeltaSparkSessionExtension")\

.config("spark.sql.catalog.spark_catalog", "org.apache.spark.sql.delta.catalog.DeltaCatalog")\

.appName("py-stats")\

.getOrCreate()@0xdarkman could you check your Spark's jars directory and see if there are any delta jars there?

yes, this resolves the problem. thank you.

@0xdarkman could you check your Spark's

jarsdirectory and see if there are any delta jars there?

I am not able to write anything. I see _delta_log I see the data but it seems I have some problem with writting. Would you mind to look into the question I have just opened in SO?

https://stackoverflow.com/questions/73542462/pyspark-write-stream-to-delta-no-data

Seems like that stackoverflow question has an answer that has resolved the issue. @0xdarkman do you need any more help from us?

I'm also running into same error, posted the question here - https://stackoverflow.com/questions/76858804/deltalake-setup-error-java-lang-noclassdeffounderror-io-delta-storage-logst. Looking at the above comments, I need to copy delta-storage jar. Can you point me to the correct jar that I need to download?

Kernel: /opt/conda/miniconda3/bin/python Python version: 3.10.8 | packaged by conda-forge | (main, Nov 22 2022, 08:23:14) [GCC 10.4.0] PySpark version: 3.4.1 spark version: 3.3.0

@theroadbacktonature - which delta version are you using? You just need to use the delta-storage jar at the same version. https://mvnrepository.com/artifact/io.delta/delta-storage

I'm using Delta 2.3. So downloaded delta-storage jar and also passing this as dependency during spark session, now getting different error during write emp_details.write.format("delta").mode("overwrite").save(delta_path)

os.environ['PYSPARK_SUBMIT_ARGS'] = '--packages io.delta:delta-core_2.12:2.3.0, io.delta:delta-storage:2.3.0 --conf spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension --conf spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog pyspark-shell'

Error:

23/08/08 16:33:43 WARN TaskSetManager: Lost task 3.0 in stage 1.0 (TID 4) (cluster-b6b8-w-1.c.test-382806.internal executor 2): java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to field org.apache.spark.sql.catalyst.expressions.ScalaUDF.f of type scala.Function1 in instance of org.apache.spark.sql.catalyst.expressions.ScalaUDF

at java.base/java.io.ObjectStreamClass$FieldReflector.setObjFieldValues(ObjectStreamClass.java:2076)

at java.base/java.io.ObjectStreamClass$FieldReflector.checkObjectFieldValueTypes(ObjectStreamClass.java:2039)

at java.base/java.io.ObjectStreamClass.checkObjFieldValueTypes(ObjectStreamClass.java:1293)

at java.base/java.io.ObjectInputStream.defaultCheckFieldValues(ObjectInputStream.java:2512)

at java.base/java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2419)@theroadbacktonature - why are you using PySpark version: 3.4.1 and spark version: 3.3.0?

This is an existing Dataproc cluster used by ML team for adhoc exploration. I'm just setting up delta. I'm currently setting up delta 2.3 which is compatible for Spark version 3.3.0. Should I try out delta 2.4? Kindly suggest recommendations for possible solutions.

PySpark version (pyspark.version) : 3.4.1 and spark version (spark.version): 3.3.0 is from Jupyter notebook attached to Dataproc cluster. On Dataproc shell, below is the version.

tried with delta 2.4. Also tried with delta 2.3 directly on spark shell instead of Jupyter. In both cases running into same error "cannot assign instance of java.lang.invoke.SerializedLambda..."

Earlier I have executed pip install delta-spark==2.3.0, looking at the logs it brought pyspark 3.4, hence uninstalled it. Have below configuration now. But still running into same error "java.lang.ClassCastException: cannot assign instance of java.lang.invoke.SerializedLambda to..."

current oconfig:

Kernel: /opt/conda/miniconda3/bin/python

Python version: 3.10.8 | packaged by conda-forge | (main, Nov 22 2022, 08:23:14) [GCC 10.4.0]

PySpark version: 3.3.2

spark version: 3.3.0Seems like that stackoverflow question has an answer that has resolved the issue. @0xdarkman do you need any more help from us?

no, all good. thanks

Bug

Describe the problem

I've upgraded python

delta-sparkto 1.2.0 (andpysparkto 3.3.0). When I runpyspark, I see the below error (in observed results).I believe as part of #954, the

LogStoreclass was changed to now useio.delta.storage.S3SingleDriverLogStore.The problem appears to be that the

LogStoreclass isn't present in the python artefact leading to the below error. Is this an oversight in the packaging, or should I be specifying an extra dependency to bring in this class?I also see errors about the

S3SingleDriverLogStore, which I assume are consequences of this.Steps to reproduce

Upgrade to

delta-spark1.2.0, and run via python (with the default logger).Observed results

Expected results

A successful run, using the

LogStoreclass to log to S3.Further details

n/a

Environment information

Willingness to contribute

The Delta Lake Community encourages bug fix contributions. Would you or another member of your organization be willing to contribute a fix for this bug to the Delta Lake code base?