Thank you for taking the time to write such a thoughtful review! We've responded to your points individually below.

I do wonder if some warnings should be given in the article on that visualization can give a false sense of understanding

As of 73e80b2561a64a92a1b8182c65c48fb7a3ee1df8, we have a section discussing the trustworthiness of these interfaces, and forward reference it from the introduction. We think this is an important direction for future work.

Is it really necessary to probe into the internals? … Is it actually useful?

Over the last few days, we’ve added a lot of interactive captions to expose the kind of insights one can gain from our interfaces.

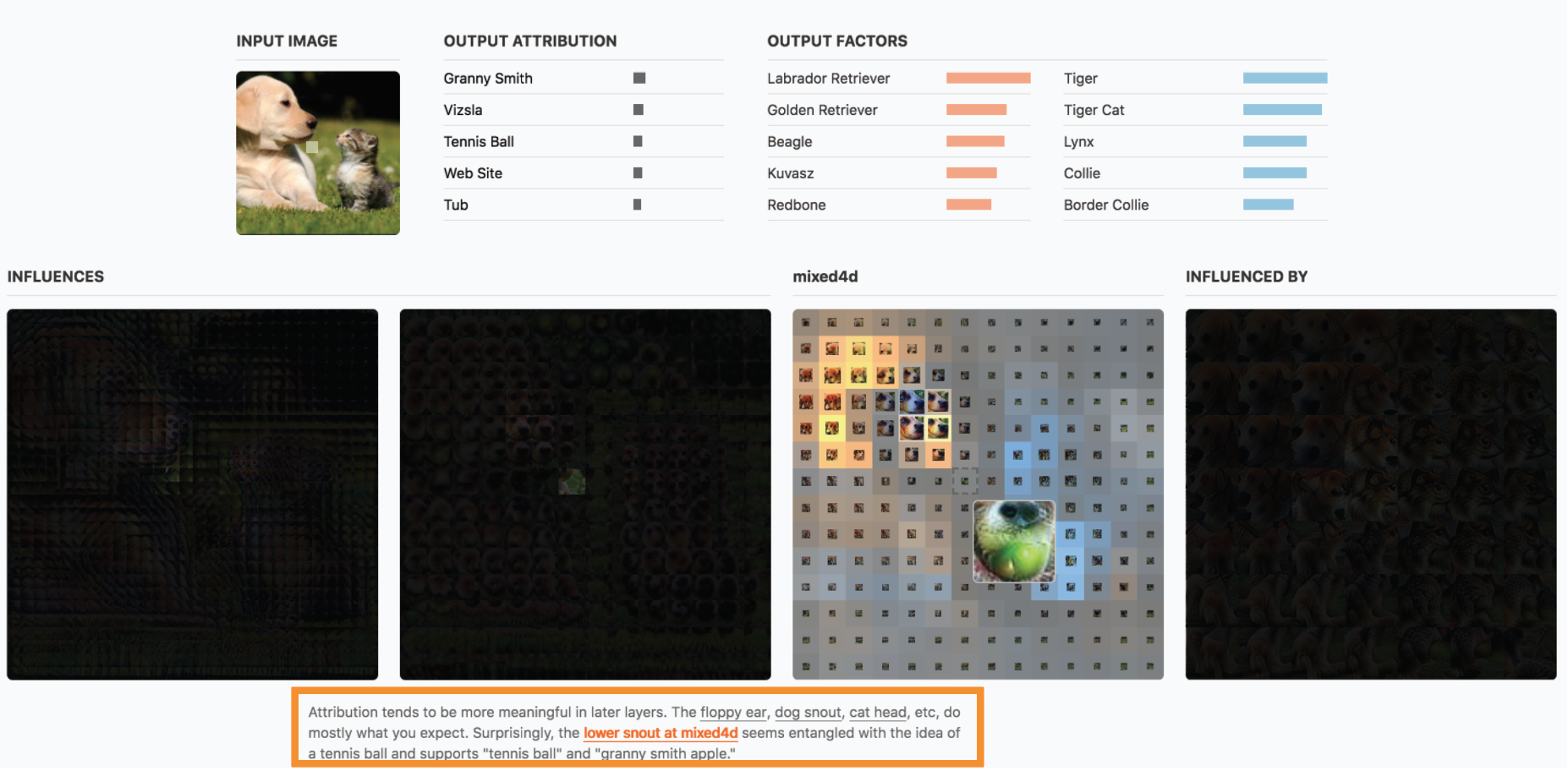

One example we find exciting is the how the model “hallucinates” a tennis ball into the mouth of the Labrador retriever. At first glance, it seems surprising that the dog cat image has the third most likely classification be a tennis ball. Digging deeper with the spatial attribution interface, however, we see that the lower snout along with background at “mixed4d” get perceived as a snout holding a tennis ball. This in turn leads to an increased probability of “tennis ball” and also “granny smith apple”. The interesting thing about this is that it seems to reveal a kind of entanglement of features: the model has entangled snouts with tennis balls, and then tennis balls with apples.

We don’t see a way to describe something like this without reference to the model’s internal abstractions. In some sense, one might ultimately care about the input to output mapping the network represents, but the space of mappings from images to labels is very large. Without reference to details of the procedure, it’s unclear to me how one could describe things like this.

Is it consistent or stable? For two functionally equivalent networks with different architectures, would the interfaces give similar view?...

The interface is stable in the sense that the same interface can be used for multiple models. The grammar of how different building blocks compose is independent of the particular use case. Of course, since the interface is describing decisions in terms of the model’s internal abstractions, those abstractions can vary greatly between models. This is what we want: if the model is making decisions in a different way, our interface should expose that. In practice, we find that some particularly important features recur in many models, while others are unique.

(There is an interesting research direction related to this, however, about how to highlight the similarities and differences.)

“Interpretability” to me means the degree to which a model is interpretable. It should be an attribute of a model. (common use: linear models are more interpretable than deep ones) But the article is mostly about for given a model, how to probe into its internals.

This is an important use of the term, but "interpretability" is increasingly the name for the field of techniques for understanding models. ("Transparency" seems to have died due to legal connotations.) When we use "interpretability", we mean this broader sense -- either the field of study, the techniques of that field, or the questions of interest ("how can we understand different aspects of what ML models do?").

The article only deals with image models. Should mention this somewhere. I actually think there is the potential of doing the whole thing for text models too.

We totally agree! As of 73e80b2561a64a92a1b8182c65c48fb7a3ee1df8, there is a sentence about this in the introduction.

When you say you use Googlenet, I prefer it immediately mentioned that the techniques don’t work well for e.g. ResNet (yet), as part of main text, not just a footnote. Otherwise I worry that an unfamiliar reader can walk away with the wrong impression that this works well for all popular image networks.

Done as of 7f7b4ee80b5f5b2fe1421071a7d9be750dbecff1.

There are many types of feature visualizations, .. There are people (like me) who have doubts about how good deepdream is as a feature visualization method, as optimization procedure could introduce artifacts.

As of 90979c453314, we've added an additional clarification that we're using a specific technique for all examples in this article and why chose to use optimization-based feature visualization (to separate things that caused a feature to activate vs merely correlations).

More generally, when we introduce feature visualization, we both link to our previous Distill article that conducts a systematic review, and cite a wide variety of techniques. We also explicitly call out using alternate feature vis techniques as a direction for future work.

Should briefly describe what mixed3a is.

Done as of 8043789d6c0.

For the visualization with varying box sizes, describe which correspond to activation level? The length of edge, or area of the box?

We’re using area, which has been clarified as b343adc5.

What does “linearly approximating” mean? Either give a reference, or describe it more precisely.

Done as of 3c4312b6ec3. We also provide notebooks with reference implementations.

Footnote 6 seems to be in some functional PL syntax? Doesn’t look like it compiles. (I read Haskell only.) Seems an overly syntactic way of describing the space.

In 3a6af47eba0, we clarify that this is a technique used in some HCI communities. We think it’s a powerful technique and wanted to provide an initial exploration of how it applies to interpretability interfaces.

The following peer review was solicited as part of the Distill review process. The review was formatted by the editor to help with readability.

The reviewer chose to waive anonymity. Distill offers reviewers a choice between anonymous review and offering reviews under their name. Non-anonymous review allows reviewers to get credit for the service them offer to the community.

Distill is grateful to the reviewer, Qiqi Yan, for taking the time to write such a thorough review.

Summary:

There have been tons of techniques in the literature developed for probing into image networks to try to interpret how they operate. This article nicely categorizes those techniques into (1) feature visualization (2) feature attribution (3) feature grouping, and shows that these can be integrated into an interactive interface on top to let users get a sense of the internals of an image network.

Overall I definitely recommend accepting the article. The community would love this article, partially because it’s a nice integration of techniques, partially because it is visually appealing (I do wonder if some warnings should be given in the article on that visualization can give a false sense of understanding).

One challenging topic that I wish gets more discussion is, for these efforts of probing / visualizing internals of image networks: Is it really necessary to probe into the internals? The network is trained to have some reasonable human-understandable i/o behavior. Maybe doing analyses also on i/o level is human-friendly, usually good enough, and seemingly safer as internals are often too complex / misleading. Is it actually useful? If yes, any concrete or anecdotal example of how the interfaces to internals have informed people to take some actions, like fixing a type of misclassification? Is it consistent or stable? For two functionally equivalent networks with different architectures, would the interfaces give similar view? For two identical networks, with different parameter initialization, would the interfaces give similar view? Addressing these questions may not be easy or necessary for this article. But currently the article seems to at least lack discussions on motivations and utilities. The main sentence on motivation I can find is: by reference 1 (another long article), “we need to both construct deep abstractions and reify (or instantiate) them in rich interfaces”. Honestly I’m not sure how to interpret this deep sounding but actually super vague sentence.

Some comments:

“Interpretability” to me means the degree to which a model is interpretable. It should be an attribute of a model. (common use: linear models are more interpretable than deep ones) But the article is mostly about for given a model, how to probe into its internals.

The article only deals with image models. Should mention this somewhere. I actually think there is the potential of doing the whole thing for text models too.

When you say you use Googlenet, I prefer it immediately mentioned that the techniques don’t work well for e.g. ResNet (yet), as part of main text, not just a footnote. Otherwise I worry that an unfamiliar reader can walk away with the wrong impression that this works well for all popular image networks.

There are many types of feature visualizations, using image patches that maximize activations, deepdream style optimization, deconvolution, etc. I think there are pros and cons for each of them. Somewhere the article switches to using “feature visualization” to refer to only the deep dream style visualization, without explanations. There are people (like me) who have doubts about how good deepdream is as a feature visualization method, as optimization procedure could introduce artifacts.

Should briefly describe what mixed3a is.

For the visualization with varying box sizes, describe which correspond to activation level? The length of edge, or area of the box?

What does “linearly approximating” mean? Either give a reference, or describe it more precisely.

Footnote 6 seems to be in some functional PL syntax? Doesn’t look like it compiles. (I read Haskell only.) Seems an overly syntactic way of describing the space.

Overall on the writing style, it’s a long article, I’d wish there were more highlighted keywords / bulleted sentences so that the experts can skim through more easily.