@antondachauer thanks for your report.

I tried to reproduce the build but I think the config files are missing:

Removing intermediate container 0b9e67707d26

---> 9915b175f1aa

Step 4/12 : ADD nginx.conf /etc/nginx/

ERROR: Service 'nginx' failed to build: ADD failed: stat /var/lib/docker/tmp/docker-builder232539285/nginx.conf: no such file or directory

Issues without logs and details cannot be exploited, and will be closed.

Expected behavior

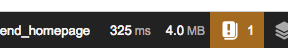

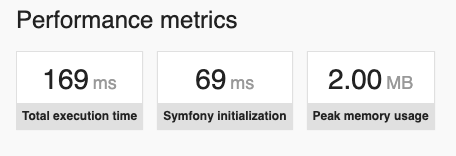

Page loading time < 10sec (Symfony dev env) Page loading time < 3sec (Symfony prod env)

Actual behavior

Page loading time > 40sec (dev)

Information

Steps to reproduce the behavior

docker-compose.yml

php7-fpm Dockerfile

See https://github.com/docker-library/php/blob/master/7.1/fpm/Dockerfile

nginx Dockerfile