Did someone try to mount the volume as delegated or cached? https://docs.docker.com/docker-for-mac/osxfs-caching/

Closed dimasnake closed 2 years ago

Did someone try to mount the volume as delegated or cached? https://docs.docker.com/docker-for-mac/osxfs-caching/

@trickreich @raupie @er1z There is a possible solution for both Windows or OSX and it's Vagrant. It lets you configure shared folders using rsync which has far better performance.

Docker for Windows pretty much didn't detect file changes at all or took ~30 seconds to reload, using rsync it dropped to below 6 seconds and I am pretty sure I can optimize this more in Webpack (Node.js build tool). This is with 5 containers and 3 different volumes.

In my case I work with Unity and use Docker for my server side stack, so it's pretty important to be able to run both on Windows.

Edit: It's necessary to cache the vendor folders though, in my case I exclude node_modules/ from rsync so there is only few MB of files left and instead create a symlink to local node_modules/ in container. Every container installs it's own dependencies and you have to rebuild the images everytime you add any new packages.

Edit 2: With further optimization of the Vagrant (using rsync) + Docker configuration, primarily avoiding any unnecessary I/O and after updating to newest Webpack I was able to lower the build times to 200ms. I am actually surprised how well it runs right now, so if you are on Windows then Vagrant is a viable option but you have to be very careful, keep all of your vendor files/cache in your containers and only rsync your source code.

rsync is just a workaround, not a real solution. The only environment Docker works flawlessly is Linux. Windows version is just a „dummy” thing because of I/O. This won't change unless someone create a way to expose Windows-host data to VM. And as the time shows it's almost impossible task to complete.

To really speed up you have to avoid using shared volumes. With a shared volume I have to wait more than 10 seconds, sometimes more than 30, to load the page. Without shared volume the page is delivered in less than 200 milliseconds.

My setup in a symfony project looks like this: There is a web container and a tools container. The tools container is used as a shell for using command line tools like symfony console or composer. For this I use a shared volume to sync changes (especially the vendor folder) back to the windows filesystem. But the web container doesn't use a shared volume. Instead any changes will be copied with "docker cp" into the container. This is a job for the IDE. I use IntelliJ and there is a "File Watchers" functionality. Every change in the IDE will copied instantly in the container. If you change a file in the tools container, the File Watcher will notice the change, when you activate the IDE, and copy the changes in the web container. But for the logs and cache directories I still use a docker volume which isn't synchronized with windows. Maybe not necessary.

I have set these windows environment variables:

DOCKER_TLS_VERIFY=1

DOCKER_CERT_PATH=C:\Users\<username>\.docker\machine\machines\default

DOCKER_HOST=tcp://192.168.99.100:2376The file watcher config looks like this:

Program: C:\Program Files\Docker Toolbox\docker.exe Arguments: cp $FilePath$ [containername]:/project/$FilePathRelativeToProjectRoot$

But before you have to copy the whole project folder in the container. Initialize the container with:

docker cp <projectfolder>/. [containername]:/project

Also you have to do this if the filesystem is out of sync. Usualy it's only needed once.

@jarkt great advice! I am experimenting with this option right now, but what I am missing in your file watcher config is that there is no containername in your docker cp command. Shouldn't this be more like

Arguments: cp $FilePath$ <containerName>:/project/$FilePathRelativeToProjectRoot$

? Or how does the docker cp command knows where to copy it.

Without synchronizing back the cache, Symfony plugin cannot operate as effectively as it could have accessed to the fresh container configuration.

@tomasbruckner Yes you're right. It was a display issue. Github seems to filter the content between < and > in comments.

But by this way, what I described is not my current config anymore. I now use SFTP sync. Here is an example, note the comments:

docker network create symfony

docker volume create symfony_tmp

docker volume create symfony_npm

docker volume create symfony_project

# /tmp for symfony cache and log files - not synced with host system:

volume_tmp="-v symfony_tmp:/tmp/"

# /project/node_modules for npm/yarn - not synced with host system:

volume_npm="-v symfony_npm:/project/node_modules/"

# /project for tools container - synced with host system:

volume_project_shared="-v $(cd "$(dirname "$0")/.." || exit; pwd):/project/"

# /project for web and sftp containers - not synced with host system:

volume_project_web="-v symfony_project:/project/"

volume_project_sftp="-v symfony_project:/home/project/upload/"

docker_api="-v /var/run/docker.sock:/var/run/docker.sock"

network="--net symfony"

db_params="\

-e MYSQL_ROOT_PASSWORD=secret123 \

-e MYSQL_DATABASE=symfony \

-e MYSQL_USER=symfony \

-e MYSQL_PASSWORD=secret123 \

"

sftp_users="-e SFTP_USERS=project:secret123:::upload"

docker create $network --name symfony_db $db_params -p 3306:3306 mariadb:10

docker create $network --name symfony_dbadmin -e PMA_HOST=symfony_db -p 8080:80 phpmyadmin/phpmyadmin

docker create $network --name symfony_web $volume_npm $volume_tmp $volume_project_web -p 80:8080 symfony/web

docker create $network -it --name symfony_tools $volume_npm $volume_tmp $volume_project_shared $docker_api symfony/tools

# Build container for file watching in /project/assets for automatic rebuild:

docker create $network -it --name symfony_builder --volumes-from=symfony_web symfony/tools \

/bin/bash -c "yarn install && yarn encore dev --watch --watch-poll=500"

docker create --name symfony_sftp $sftp_users $volume_project_sftp -p 2222:22 --entrypoint "" atmoz/sftp \

/bin/bash -c "/entrypoint chown project /home/project/upload && /entrypoint"This is heavy to describe, but maybe good for inspriration. Important is which folder is shared with whom. "symfony" is the project name and the images "web" and "tools" are not public. You have to create them with all what you need, like web server, nodejs and yarn. The relevant part is the symfony_sftp container. Connect via SFTP to synchronize the project folder. I'm not sure if you can do it in both directions with WinSCP, but you can use IntelliJ for it. Hope it helps someone.

I see this issue when running git commands inside the container on a reasonably large git repository (12k commits, 4k files).

Running git status on a Windows 10 host takes ~0.08 seconds, while running it in a mounted volume in a windowsservercore:1803 container takes around 40s. It also massively increases CPU usage while running.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale comment.

Stale issues will be closed after an additional 30d of inactivity.

Prevent issues from auto-closing with an /lifecycle frozen comment.

If this issue is safe to close now please do so.

Send feedback to Docker Community Slack channels #docker-for-mac or #docker-for-windows. /lifecycle stale

/remove-lifecycle stale

This is still up to date issue.

same issue, so frustrated!!!!!!!!!!

windows colleagues in my team reported this issue recently too!

same issue, is there any chance it will be fixed for windows

i've got bad performance because of my windows user is on a corporate network I've found a solution provided by configuring docker for windows volumes and I get better performance The idea is to create a local user and share C drive with that user

Hope it helps

I reinstalled whole docker (with delete vm), and I have same problems with slow volumes

Just to add to the discussion and possible solutions:

I am fine with files being copied slowly, or not being available at once in the container. However, the file access from within the container should not be slowed (at least not that much), and it should not get even slower when there are more files being mounted in the volume (Files, which are not changing for most of the time).

So if there is some midpoint solution possible, I'd go for faster file access. It is especially a problem for dev environments when using stuff like npm (node_modules) and composer (vendor). I hoped, that this will be achieved witch :cached volume mode, but tbh it does not alleviate the issue, it just makes it a bit faster (which is not enough on larger projects).

Yes, you can prepare projects not to mount everything, but that's not always an option.

Cached mounts solved most of the problems on Mac, hopefully it will land in DfW as well. I have high hopes this will improve with Microsoft pushing FOSS and embracing linux for developer stuff. Their own developers are also using Docker and do feel the pain IMHO. They did some impressive work making MSSQL server work on linux with their kernel proxy layer.

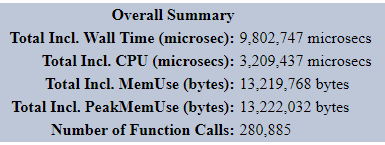

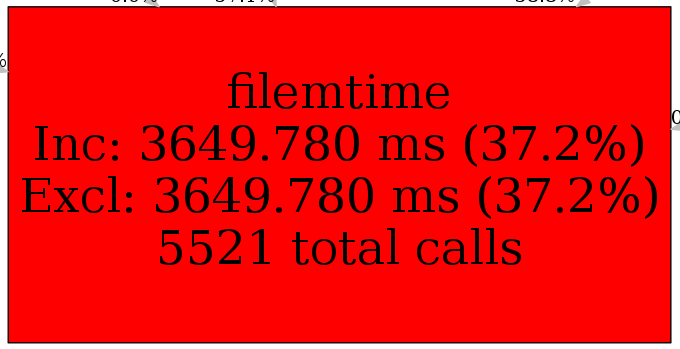

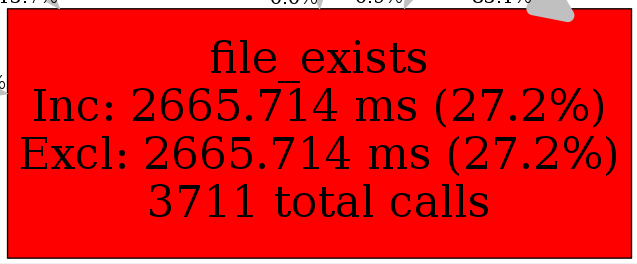

Example of concrete numbers, if anyone is interested

My use case is similar to most people's here: large git project for an Apache PHP web application. The file access speeds make docker almost unusable. All git operations take upwards of 1 minute if run inside the container. composer and npm updates take long enough that you might as well go and make a cup of tea. And many web pages in the project take up to 1 minute to load.

Running git operations on the host is obviously much quicker, but unfortunately the makeup of the project means that helper scripts need to run many git operations, plus composer and npm inside the container - otherwise there are too many other issues (including permission issues) if run directly on the windows host.

I may have a look at creating a volume using the cifs driver and see if manually adjusting the r/wsize is possible and makes a difference.

This really is a fundamental problem with Docker for Windows though.

P.S. I have also tried LCOW mode, but that was even slower!

I may have a look at creating a volume using the cifs driver

AFAIR DfW already it does. :)

r/wsize changes almost nothing, that is not a way. I wonder if my life will be long enough to see an I/O bridge with fair performance. :D

https://devblogs.microsoft.com/commandline/announcing-wsl-2/

Microsoft announcing WSL 2, this means docker can run natively on windows without Hyper-V or Virtualbox. I think this will fix the shared volumes issues

@jva91 Where does it say that Hyper-V will not be required?

From Wikipedia:

In 2019, Microsoft announced a completely redesigned WSL architecture (WSL 2) to use lightweight Hyper-V VMs hosting actual (customized) Linux kernel images, claiming full syscall compatibility.

Windows Subsystem for Linux 2 (WSL 2) is the next version of WSL and is based on a Linux 4.19 kernel shipping in Windows. This same kernel is technology built used for Azure and in both cases helps to reduce Linux boot time and streamline memory use. WSL 2 also improves filesystem I/O performance, Linux compatibility, and can run Docker containers natively so that a VM is no longer needed for containers on Windows. The first WSL 2 preview will be available later this year.

A separate VM for containers is not needed, but if I understand correctly, WSL 2 itself will run inside a "lightweight utility virtual machine" (which is probably Hyper-V).

More info from Microsoft devs about Docker in WSL 2 - https://youtu.be/lwhMThePdIo?t=757

All git operations should be done in the host-machine.

There is a difference between volumes that are managed by the host, and volumes that are managed by Docker. Docker-managed volumes do not have this issue.

You can also mount another volume inside of a directory that is already inside of a volume.

This is my development environment, so my source code needs to be mounted from the host. This is something we really can't get around. But the libraries i use (/node_modules), and my generated code (/dist) can be completely managed by the container.

First i mount my local directory:

.:/app

Since i don't really care about the build results here, I can mount an anonymous volume to /dist:

/app/dist

But /node_modules is a different story. 1: because I might not want to run yarn install every time I restart. 2: I might need to sync the content back to the host so that my IDE is able to see the imported libraries, and can provide intelisense etc.

So i create a named volume (my_node_modules?)

docker volume create my_node_volumes

and I mount it:

my_node_modules:/app/node_modules

Now everything runs at least 5x faster.

I ran the benchmark mentioned above:

# in node_modules: (docker-managed volume)

root@325fa235749d:/app/node_modules# dd if=/dev/zero of=test.dat bs=1024 count=100000

100000+0 records in

100000+0 records out

102400000 bytes (102 MB) copied, 0.267618 s, 383 MB/s

# in the app directory (host-managed volume)

root@325fa235749d:/app# dd if=/dev/zero of=test.dat bs=1024 count=100000

100000+0 records in

100000+0 records out

102400000 bytes (102 MB) copied, 75.9278 s, 1.3 MB/soh so about 300x

In order to sync /node_modules back to the host, I created another container that mounts my named volume and /app/node_modules. Then i run rsync to copy the latest libraries back to the host.

Running Unison against host vs. vm with Docker solved issue. Don't care I have all files twice; performance is worth it.

Anyone tested the new WSL 2?, is the volume slowness mitigated? Cheers

Initial benchmarking link here: https://github.com/Microsoft/WSL/issues/873#issuecomment-502302818 It seems there is an improving in the I/O but still is a beta and needs improving for heavy cpu operations.

My benchmark WSL 2 container:

root@8018b0f62183:/# dd if=/dev/zero of=test.dat bs=1024 count=100000

100000+0 records in

100000+0 records out

102400000 bytes (102 MB, 98 MiB) copied, 0.468344 s, 219 MB/smount a volume:

root@8018b0f62183:/var/www# dd if=/dev/zero of=test.dat bs=1024 count=100000

100000+0 records in

100000+0 records out

102400000 bytes (102 MB, 98 MiB) copied, 21.4534 s, 4.8 MB/sStill unusable

@er1z - could you share some more info on your setup for unison. I'm interested in trying this. Did you use a ready made unison docker image? It are there a lot of manual steps to run?

Any pointers appreciated. Thanks

@biskyt — I have following setup: Windows host and Ubuntu VM on HyperV with host-only networking. On Windows — IDE and Ubuntu — Docker.

I've downloaded and installed Unison on both. On Windows, I start unison -socket 1234 within desired directory, on Ubuntu: unison myprofile:

myprofile.prf`

root = /workspace

root = socket://192.168.123.1:1234

auto = true

batch = true

group = false

owner = false

silent = true

repeat = watchSometimes Unison gets crashed (especially on updating many small files at once, eg. on node_modules install or so) — it's worth running both on some kind of supervisor/systemd: https://github.com/er1z/oneliners/blob/master/systemd_unison_service

There are some software for Windows which allows to setup a exec as a service but cannot remember the name right now.

Thanks @er1z - I'll give that a try.

Also, I have recently tried using the Remote development features of Visual Studio Code. Specifically the Remote - SSH option. This has worked very well. From the IDE perspective, there is no noticeable difference between the code being natively on the host. It "just works". And becuase all git operations are run remotely inside the container, the response is instant.

So as long as you're happy to use VS code as your IDE, then this is a great option.

Note that VS Code also has a "Remote - Containers" option, but this (I assume) would still suffer from the same file access issues we're complaining about, as it mounts the code from a host folder into the container.

I've noticed that Unison approach is faster.

@biskyt @er1z maybe another solution would be to store all files in linux only and access them via explorer.exe . (see https://devblogs.microsoft.com/commandline/wsl-2-is-now-available-in-windows-insiders/). Then you shouldn't need to use unison at all.

@weberdominik how edit files in IDE like Jetbrains? IDE doesn't support path \\wsl$\Ubuntu\home\

Finally it works fast.

Put files in \\wsl$\Ubuntu\home\user\project

Create symlink mklink /D C:\project \\wsl$\Ubuntu\home\user\project

In container:

root@d8187af6bc11:/var/www# dd if=/dev/zero of=test.dat bs=1024 count=100000

100000+0 records in

100000+0 records out

102400000 bytes (102 MB, 98 MiB) copied, 0.363478 s, 282 MB/s@dimasnake — but how about IOPS? Bps is not accurate here; it may be even about 10 MiB/s and git checkout will be faster than you posted above.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale comment.

Stale issues will be closed after an additional 30d of inactivity.

Prevent issues from auto-closing with an /lifecycle frozen comment.

If this issue is safe to close now please do so.

Send feedback to Docker Community Slack channels #docker-for-mac or #docker-for-windows. /lifecycle stale

/remove-lifecycle stale

Sweet, that worked! Yeah, definitely not stale. One of the most annoying issues I've been dealing with from the beginning.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale comment.

Stale issues will be closed after an additional 30d of inactivity.

Prevent issues from auto-closing with an /lifecycle frozen comment.

If this issue is safe to close now please do so.

Send feedback to Docker Community Slack channels #docker-for-mac or #docker-for-windows. /lifecycle stale

/remove-lifecycle stale

So did anyone find a solution to fix the problem in Windows10?I was annoyed with it for a few days.

I'm afraid that this problem won't be solved for at least a few years ahead. Only workarounds are available, unfortunately. I've ended with VM with Linux and Unison; tried many ways but this one is the least painful.

@er1z how about the upcoming WSL2 which comes with Windows 10 version 2004(year 20 month 04), should be this April.

WSL2 ships an actual Linux kernel and its Linux file system is 5x-10x faster than WSL

@dimasnake mentioned that it works nicely on his end with WSL2.

@er1z how about the upcoming WSL2 which comes with Windows 10 version 2004(year 20 month 04), should be this April.

WSL2 ships an actual Linux kernel and its Linux file system is 5x-10x faster than WSL

@dimasnake mentioned that it works nicely on his end with WSL2.

Shared volumes is still slow in wsl2 but it's fast when you put files on wsl itself. It's the best solution for me right now.

it's fast when you put files on wsl itself.

Yes, it's fast, but very slow indexing files in IDE and high cpu usage while files indexing.

I was having similar issues and binding all volumes from with wsl itself instead through the windows mount solved it for me. This is recommended best practice: https://docs.docker.com/docker-for-windows/wsl/#best-practices .

I'm not experiencing any significant performance differences between a real linux machine and docker for windows anymore.

@Piepongwong — but it assumes that you don't need a performance on host. Good luck on IntelliJ project re-indexing every time you focus main window…

Expected behavior

File access in volumes should be comparable to access times in non-volumes, similarly to Linux installations of docker

Actual behavior

File access in volumes is many times slower than on non-volumes.

Information

Version: 1.12.3-beta29.2 (8280) Channel: Beta Sha1: 902414df0cea7fdc85b87f0077b0106c3af9f64c Started on: 2016/11/01 21:19:46.408 Resources: C:\Program Files\Docker\Docker\Resources OS: Windows 10 Pro Edition: Professional Id: 1607 Build: 14393 BuildLabName: 14393.351.amd64fre.rs1_release_inmarket.161014-1755

Steps to reproduce the behavior

Get on the commandline of a lightweight docker container

and mount a volume:

In 9 times slower.