I filed an issue with osxphotos about this here: https://github.com/RhetTbull/osxphotos/issues/121

Open simonw opened 4 years ago

I filed an issue with osxphotos about this here: https://github.com/RhetTbull/osxphotos/issues/121

In https://github.com/RhetTbull/osxphotos/issues/121#issuecomment-623249263 Rhet Turnbull spotted a table called ZSCENEIDENTIFIER which looked like it might have the right data, but the columns in it aren't particularly helpful:

Z_PK,Z_ENT,Z_OPT,ZSCENEIDENTIFIER,ZASSETATTRIBUTES,ZCONFIDENCE

8,49,1,731,5,0.11834716796875

9,49,1,684,6,0.0233648251742125

10,49,1,1702,1,0.026153564453125I love the look of those confidence scores, but what do the numbers mean?

I figured there must be a separate database that Photos uses to store the text of the identified labels.

I used "Open Files and Ports" in Activity Monitor against the Photos app to try and spot candidates... and found /Users/simon/Pictures/Photos Library.photoslibrary/database/search/psi.sqlite - a 53MB SQLite database file.

Here's the schema of that file:

$ sqlite3 psi.sqlite .schema

CREATE TABLE word_embedding(word TEXT, extended_word TEXT, score DOUBLE);

CREATE INDEX word_embedding_index ON word_embedding(word);

CREATE VIRTUAL TABLE word_embedding_prefix USING fts5(extended_word)

/* word_embedding_prefix(extended_word) */;

CREATE TABLE IF NOT EXISTS 'word_embedding_prefix_data'(id INTEGER PRIMARY KEY, block BLOB);

CREATE TABLE IF NOT EXISTS 'word_embedding_prefix_idx'(segid, term, pgno, PRIMARY KEY(segid, term)) WITHOUT ROWID;

CREATE TABLE IF NOT EXISTS 'word_embedding_prefix_content'(id INTEGER PRIMARY KEY, c0);

CREATE TABLE IF NOT EXISTS 'word_embedding_prefix_docsize'(id INTEGER PRIMARY KEY, sz BLOB);

CREATE TABLE IF NOT EXISTS 'word_embedding_prefix_config'(k PRIMARY KEY, v) WITHOUT ROWID;

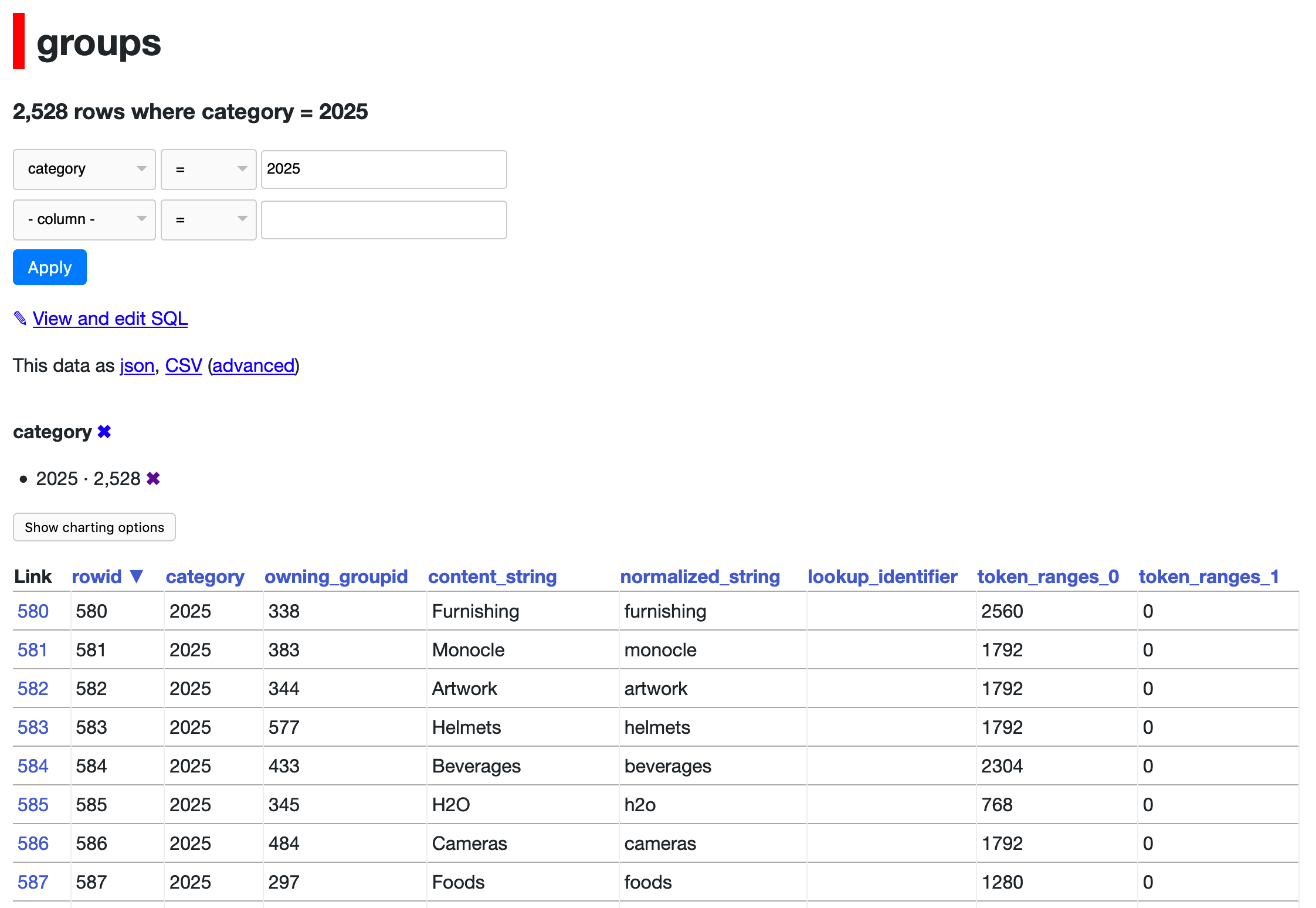

CREATE TABLE groups(category INT2, owning_groupid INT, content_string TEXT, normalized_string TEXT, lookup_identifier TEXT, token_ranges_0 INT8, token_ranges_1 INT8, UNIQUE(category, owning_groupid, content_string, lookup_identifier, token_ranges_0, token_ranges_1));

CREATE TABLE assets(uuid_0 INT, uuid_1 INT, creationDate INT, UNIQUE(uuid_0, uuid_1));

CREATE TABLE ga(groupid INT, assetid INT, PRIMARY KEY(groupid, assetid));

CREATE TABLE collections(uuid_0 INT, uuid_1 INT, startDate INT, endDate INT, title TEXT, subtitle TEXT, keyAssetUUID_0 INT, keyAssetUUID_1 INT, typeAndNumberOfAssets INT32, sortDate DOUBLE, UNIQUE(uuid_0, uuid_1));

CREATE TABLE gc(groupid INT, collectionid INT, PRIMARY KEY(groupid, collectionid));

CREATE VIRTUAL TABLE prefix USING fts5(content='groups', normalized_string, category UNINDEXED, tokenize = 'PSITokenizer');

CREATE TABLE IF NOT EXISTS 'prefix_data'(id INTEGER PRIMARY KEY, block BLOB);

CREATE TABLE IF NOT EXISTS 'prefix_idx'(segid, term, pgno, PRIMARY KEY(segid, term)) WITHOUT ROWID;

CREATE TABLE IF NOT EXISTS 'prefix_docsize'(id INTEGER PRIMARY KEY, sz BLOB);

CREATE TABLE IF NOT EXISTS 'prefix_config'(k PRIMARY KEY, v) WITHOUT ROWID;

CREATE TABLE lookup(identifier TEXT PRIMARY KEY, category INT2);

CREATE TRIGGER trigger_groups_insert AFTER INSERT ON groups BEGIN INSERT INTO prefix(rowid, normalized_string, category) VALUES (new.rowid, new.normalized_string, new.category); END;

CREATE TRIGGER trigger_groups_delete AFTER DELETE ON groups BEGIN INSERT INTO prefix(prefix, rowid, normalized_string, category) VALUES('delete', old.rowid, old.normalized_string, old.category); END;

CREATE INDEX group_pk ON groups(category, content_string, normalized_string, lookup_identifier);

CREATE INDEX asset_pk ON assets(uuid_0, uuid_1);

CREATE INDEX ga_assetid ON ga(assetid, groupid);

CREATE INDEX collection_pk ON collections(uuid_0, uuid_1);

CREATE INDEX gc_collectionid ON gc(collectionid);Running datasette against it directly doesn't work:

simon@Simons-MacBook-Pro search % datasette psi.sqlite

Serve! files=('psi.sqlite',) (immutables=()) on port 8001

Usage: datasette serve [OPTIONS] [FILES]...

Error: Connection to psi.sqlite failed check: no such tokenizer: PSITokenizerInstead, I created a new SQLite database with a copy of some of the key tables, like this:

sqlite-utils rows psi.sqlite groups | sqlite-utils insert /tmp/search.db groups -

sqlite-utils rows psi.sqlite assets | sqlite-utils insert /tmp/search.db assets -

sqlite-utils rows psi.sqlite ga | sqlite-utils insert /tmp/search.db ga -

sqlite-utils rows psi.sqlite collections | sqlite-utils insert /tmp/search.db collections -

sqlite-utils rows psi.sqlite gc | sqlite-utils insert /tmp/search.db gc -

sqlite-utils rows psi.sqlite lookup | sqlite-utils insert /tmp/search.db lookup -I'm pretty sure this is what I'm after. The groups table has what looks like identified labels in the rows with category = 2025:

Then there's a ga table that maps groups to assets:

And an assets table which looks like it has one row for every one of my photos:

One major challenge: these UUIDs are split into two integer numbers, uuid_0 and uuid_1 - but the main photos database uses regular UUIDs like this:

I need to figure out how to match up these two different UUID representations. I asked on Twitter if anyone has any ideas: https://twitter.com/simonw/status/1257500689019703296

Here's how to convert two integers unto a UUID using Java. Not sure if it's the solution I need though (or how to do the same thing in Python):

https://repl.it/repls/EuphoricSomberClasslibrary

import java.util.UUID;

class Main {

public static void main(String[] args) {

java.util.UUID uuid = new java.util.UUID(

2544182952487526660L,

-3640314103732024685L

);

System.out.println(

uuid

);

}

}I'm traveling w/o access to my Mac so can't help with any code right now. I suspected ZSCENEIDENTIFIER was a foreign key into one of these psi.sqlite tables. But looks like you're on to something connecting groups to assets. As for the UUID, I think there's two ints because each is 64-bits but UUIDs are 128-bits. Thus they need to be combined to get the 128 bit UUID. You might be able to use Apple's NSUUID, for example, by wrapping with pyObjC. Here's one example of using this in PyObjC's test suite. Interesting it's stored this way instead of a UUIDString as in Photos.sqlite. Perhaps it for faster indexing.

This function seems to convert them into UUIDs that match my photos:

def to_uuid(uuid_0, uuid_1):

b = uuid_0.to_bytes(8, 'little', signed=True) + uuid_1.to_bytes(8, 'little', signed=True)

return str(uuid.UUID(bytes=b)).upper()Things were not matching up for me correctly:

I think that's because my import script didn't correctly import the existing rowid values.

Trying this import mechanism instead:

sqlite3 /Users/simon/Pictures/Photos\ Library.photoslibrary/database/search/psi.sqlite .dump | grep -v 'CREATE INDEX' | grep -v 'CREATE TRIGGER' | grep -v 'CREATE VIRTUAL TABLE' | sqlite3 search.db

Even that didn't work - it didn't copy across the rowid values. I'm pretty sure that's what's wrong here:

sqlite3 /Users/simon/Pictures/Photos\ Library.photoslibrary/database/search/psi.sqlite 'select rowid, uuid_0, uuid_1 from assets limit 10'

1619605|-9205353363298198838|4814875488794983828

1641378|-9205348195631362269|390804289838822030

1634974|-9205331524553603243|-3834026796261633148

1619083|-9205326176986145401|7563404215614709654

22131|-9205315724827218763|8370531509591906734

1645633|-9205247376092758131|-1311540150497601346Yes! Turning those rowid values into id with this script did the job:

import sqlite3

import sqlite_utils

conn = sqlite3.connect(

"/Users/simon/Pictures/Photos Library.photoslibrary/database/search/psi.sqlite"

)

def all_rows(table):

result = conn.execute("select rowid as id, * from {}".format(table))

cols = [c[0] for c in result.description]

for row in result.fetchall():

yield dict(zip(cols, row))

if __name__ == "__main__":

db = sqlite_utils.Database("psi_copy.db")

for table in ("assets", "collections", "ga", "gc", "groups"):

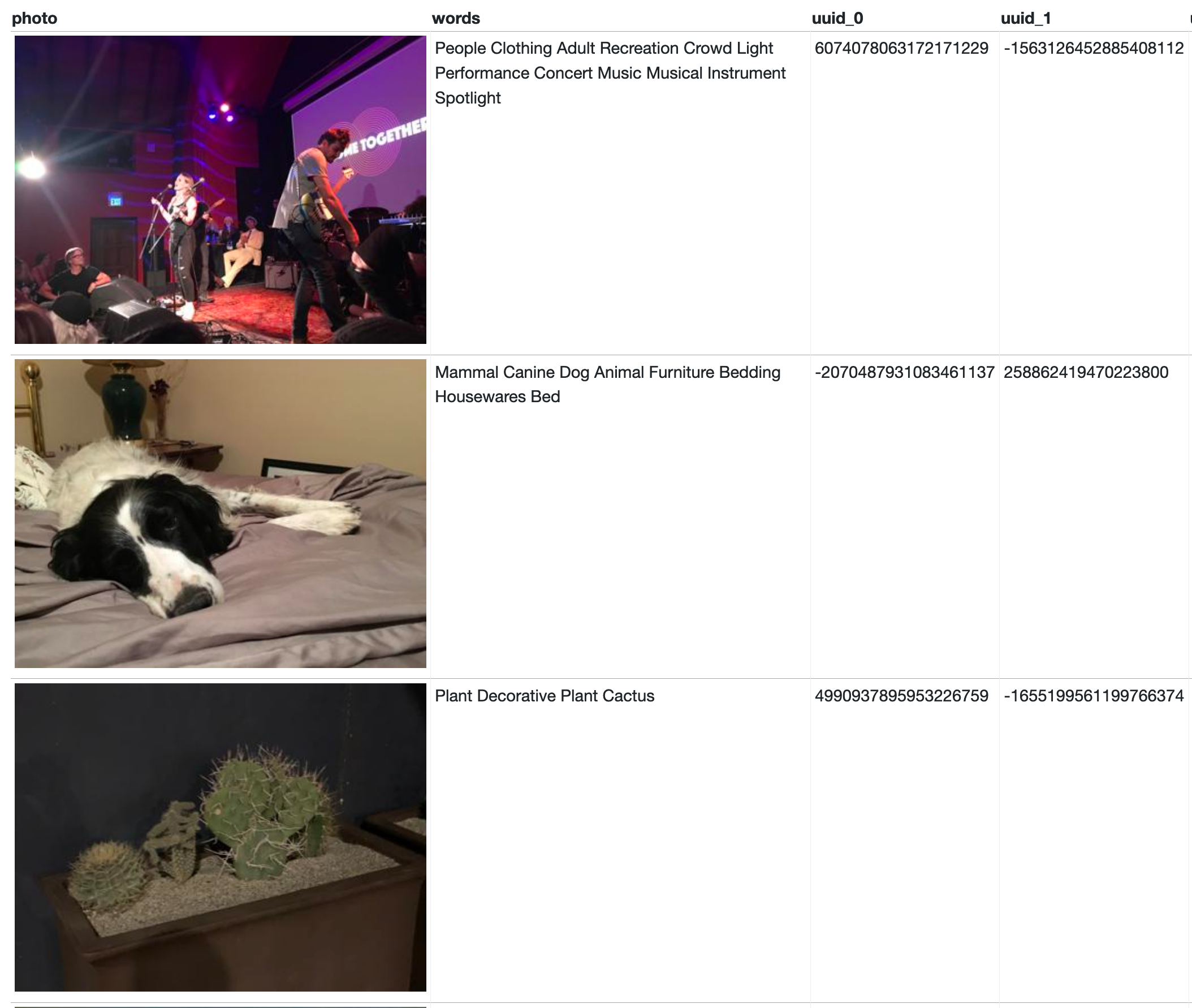

db[table].upsert_all(all_rows(table), pk="id", alter=True)Then I ran this query:

select

json_object('img_src', 'https://photos.simonwillison.net/i/' || photos.sha256 || '.' || photos.ext || '?w=400') as photo,

group_concat(strip_null_chars(groups.content_string), ' ') as words, assets.uuid_0, assets.uuid_1, to_uuid(assets.uuid_0, assets.uuid_1) as uuid

from assets join ga on assets.id = ga.assetid

join groups on ga.groupid = groups.id

join photos on photos.uuid = to_uuid(assets.uuid_0, assets.uuid_1)

where groups.category = 2024

group by assets.id

order by random() limit 10And got these results!

It looks like groups.content_string often has a null byte in it. I should clean this up as part of the import.

Follow-on from #1. Apple Photos runs some very sophisticated machine learning on-device to figure out if photos are of dogs, llamas and so on. I really want to extract those labels out into my own database.