@YamamotoSayaka did you manage to solve the issue? I am facing the same problem. @dontLoveBugs

Closed YamamotoSayaka closed 5 years ago

@YamamotoSayaka did you manage to solve the issue? I am facing the same problem. @dontLoveBugs

Hi @VasilisGks, I think the problem here is that the whole dataset from nyuv2 is required for training. the mat file only provides part of the training samples ( around 600 samples? ) and is not enough. In the original paper, the author uses around 12k unique frames, it's much larger than the mat file.

And btw, this is one ReLU missing in the final step, it might lead to negative values during training.

Yes, nyu data is difficult to get. You can use kitti dataset.

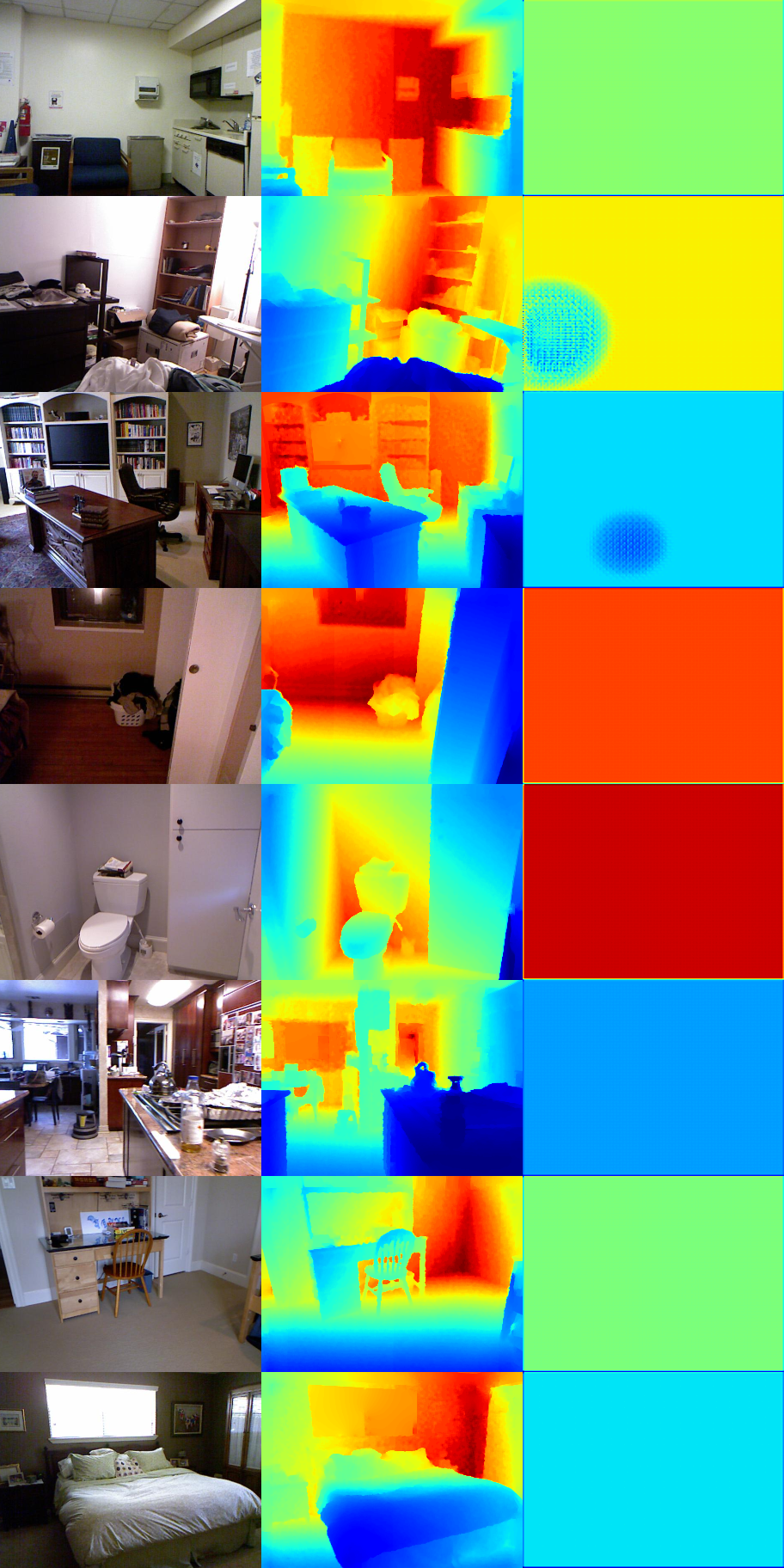

Hi, I followed #2 and prepared the nyu dataset, but my perfomance was bad and the loss never went down. Here's my train log and config file. Basically, I just followed your settings and change num of epochs to 100. This is my final result

I tried to load your pretrained model and evaluate the result, I got a similar perfomance with yours.

So I thought the problem might be with the optimizer in training? However, in your pretrained model, the optimizer is SGD ( Parameter Group 0 dampening: 0 lr: 1.0000000000000006e-14 momentum: 0.9 nesterov: False weight_decay: 0.0001 ) However, in the code you provided, the optimizer at epoch 60 is

SGD ( Parameter Group 0 dampening: 0 lr: 1.0000000000000004e-08 momentum: 0.9 nesterov: False weight_decay: 0.0001

Parameter Group 1 dampening: 0 lr: 1.0000000000000005e-08 momentum: 0.9 nesterov: False weight_decay: 0.0001 ) Can this be the problem?

I'm using python3.6 with pytorch0.4.1 with one single M40 GPU. Any ideas? @dontLoveBugs