We're having similar issues. It happened out of the blue last week and then again this morning. Suddenly these timeouts start piling up and our DTU's spike to 100% and stay there.

- .net core 2.2

- Linux instances inside AKS

- Azure SQL

- Our timeouts are set at 90 seconds

- We use Dapper.

- We were on Microsoft.Data.SqlClient 2.1.1 this morning but while it was happening we tried upgrading to 2.1.2, but nothing changed.

- Last week we tried disabling MARS but that actually broke everything, so we rolled that back immediately

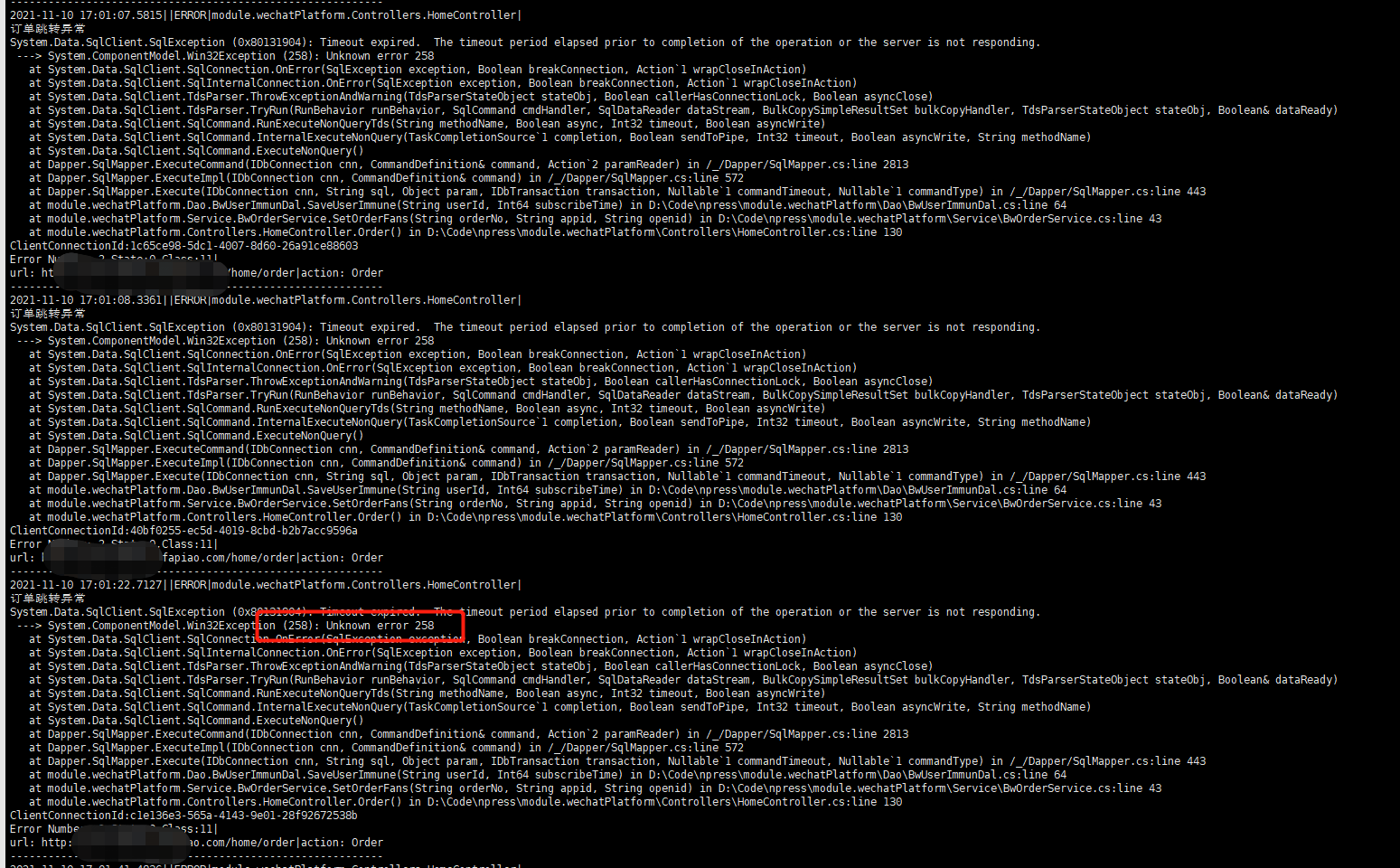

Unfortunately, the only thing that seems to fix this for us is manually scaling up our tier in SQL Azure. We generally run at a P4. So we simply bump it to the next one (P6) and then its fine, even when we immediately bump it back down to the P4. It is like something just gets "stuck" and there is no way to remedy it without scaling the SQL server. Which sucks because who knows when it is going to happen. Here's a sample stack trace:

Execution Timeout Expired. The timeout period elapsed prior to completion of the operation or the server is not responding.

at Microsoft.Data.SqlClient.SqlCommand.<>c.b__188_0(Task`1 result)

at System.Threading.Tasks.ContinuationResultTaskFromResultTask`2.InnerInvoke()

at System.Threading.ExecutionContext.RunInternal(ExecutionContext executionContext, ContextCallback callback, Object state)

--- End of stack trace from previous location where exception was thrown ---

at System.Threading.Tasks.Task.ExecuteWithThreadLocal(Task& currentTaskSlot)

--- End of stack trace from previous location where exception was thrown ---

at Dapper.SqlMapper.QueryMultipleAsync(IDbConnection cnn, CommandDefinition command) in /_/Dapper/SqlMapper.Async.cs:line 1013

at Data.BaseDB.QueryMultipleAsync(String sql, Object param, Boolean readReplica, Nullable`1 commandTimeout) in /src/src/Data/BaseDB.cs:line 77

at Data.ReportsDB.PaymentMethodBreakdownAsync(String clientKey, DateTime startDate, DateTime endDate, List`1 events, List`1 paymentMethods) in /src/src/Data/ReportsDB.cs:line 728

Execution Timeout Expired. The timeout period elapsed prior to completion of the operation or the server is not responding.

Unknown error 258Here's an example of the DTU's.... everything is trucking along normal and then it starts to go nuts and then around the time in the morning that business starts picking up, we're at 100% till we finally bounce the tier and then it more or less goes back to normal 😖

Also opened a ticket with Azure support to see if they can help diagnose from their end.

Describe the bug

When executing SQL such as

SELECT FieldA, FieldB FROM A INNER JOIN C ON A.FieldId = C.FieldId UNION SELECT FieldA, FieldD FROM A INNER JOIN D ON A.FieldId = D.FieldId, throw the error like below, not every time, just a little part of queries have this issue.To reproduce

Sorry, currently can't reproduce in the local environment, so can't provide more detail to reproduce.

Expected behavior

SQL should execute successfully every time.

Further technical details

Microsoft.Data.SqlClient version: 1.1.3 .NET target: Core 3.1 Operating system: Docker container

What I found/tried

https://stackoverflow.com/questions/57270245/sql-server-dbcommand-timeout-with-net-core-container-under-load

https://github.com/StackExchange/Dapper/issues/1435