I suspect it's because the trial is still running even after that automl cell finished. Somehow AutoMLExperiment doesn't always succeed in cancelling the last running trial..

Open andrasfuchs opened 2 years ago

I suspect it's because the trial is still running even after that automl cell finished. Somehow AutoMLExperiment doesn't always succeed in cancelling the last running trial..

We probably also need to clean up some things in our NotebookMonitor -

It could be holding references to a lot of things.

@andrasfuchs if you "restart kernel" does it free up the memory for you?

I'll dig more to see if I can find anything.

@JakeRadMSFT How can I restart the kernel?

@andrasfuchs if you’re using latest notebook editor extension there is a restart button in notebook toolbar.

I tried it again today, but after a "Run All", it got crazy again, eating up the memory and closing other running processes.

The critical part got terminated with an exception.

The memory was not freed up after the exception, I had to close the Visual Studio process manually. I had no chance to test the kernel restart.

@LittleLittleCloud thoughts here?

I was thinking there's some places we forget to clear trial result and release memory (like hold all models in memory) but I didn't see the memory goes up while training. So now I suspect the crazy memory usage is caused by LightGbm trainer, which is possible to have bad-memory allocation especially when the search space goes big

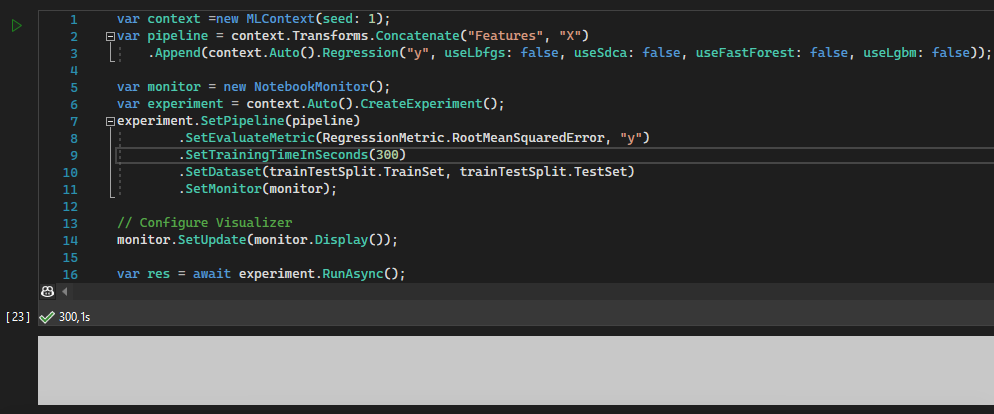

@andrasfuchs Can you try disable lgbm trainer by setting

useLgbm: false next to useSdca:false

in the following code snippet

and try the notebook again

And @JakeRadMSFT , maybe it would be helpful to add a system monitor section together with trial Monitor?

I got the gray rectangles instead of the results, but the memory problem seems to be better if I use useLgbm: false.

10+ GBs of RAM usage is still a lot, I think...

...and this memory is not freed up after the notebook run was completed.

The Training and AutoML notebook is able to consume a lot of memory, causing to hang or crash other processes.

Strangely enough, it usually works fine if you run the notebook only once. So to reproduce the problem, you should: