Does something like rewriting LINQ as loops fit into a process like this?

Open benaadams opened 7 years ago

Does something like rewriting LINQ as loops fit into a process like this?

Speaking of optimziations,

int square(int num) {

int a = 0;

for (int x = 0; x < num; x+=2) {

if (x % 2) {

a += x;

}

}

return a;

}gcc changes the above function to return zero. 😄

And when we're at it! maybe constexpr can be taken into account! when and if it will ever get implemented. 😄

I don't know but maybe this is the right phase for handling constexpr just a thought.

I think IL rewrite and constexpr are two separate things.

@fanoI They are two different things but what lead me to write this is really this part:

a "publish" compile?

Maybe during debug compilation the compiler won't evaluate functions as constexpr to reduce compilation time and then when you do publish or release it evaluate it at compile-time.

Just because they are two different things doesn't mean it's not the right phase to handle it.

Part of the challenge in building an IL-IL optimizer is that in general the scope of optimizations is limited to a method or perhaps a single assembly. One can do some cool things at this scope but quite often the code one would like to optimize is scattered across many methods and many assemblies.

To broaden the scope, you can gather all the relevant assemblies together sometime well before execution and optimize them all together. But by doing this you inevitably build in strong dependence on the exact versions of the assemblies involved, and so the resulting set of optimized IL assemblies must now be deployed and versioned and serviced together. For some app models this is a natural fit; for others it is not.

Engineering challenges abound. @mikedn touched on some of them over in #15644, but there are many more. Just to touch on a few:

I'm not saying that building an IL-IL optimizer is a bad idea, mind you -- but building one that is broadly applicable is a major undertaking.

@AndyAyersMS

To broaden the scope, you can gather all the relevant assemblies together sometime well before execution and optimize them all together. But by doing this you inevitably build in strong dependence on the exact versions of the assemblies involved, and so the resulting set of optimized IL assemblies must now be deployed and versioned and serviced together. For some app models this is a natural fit; for others it is not.

In C++ we face the exact same problem, don't we? static linking vs dynamic linking? there's trade-offs and yet many people choose to have static linking for their applications.

All this can be part of a compiler flag, I guess it needs to be an opt-in feature.

In C++ we face the exact same problem, don't we? static linking vs dynamic linking? there's trade-offs and yet many people choose to have static linking for their applications.

What problem? C++ has headers and many C++ libraries are header only, starting with the C++ standard library (with a few exceptions). Besides, many used static linking for convenience, not for performance reasons. And static linking is certainly not the norm, for example you can find many games where the code is spread across many dlls.

All this can be part of a compiler flag, I guess it needs to be an opt-in feature.

Such a feature is being worked on. It's called corert.

@mikedn

What problem? C++ has headers and many C++ libraries are header only, starting with the C++ standard library (with a few exceptions). Besides, many used static linking for convenience, not for performance reasons. And static linking is certainly not the norm, for example you can find many games where the code is spread across many dlls.

I think you missed my point... my point being is people that do choose static linking face the following problems which he mentions in his post:

strong dependence on the exact versions of the assemblies involved

deployed and versioned and serviced together

It certainly isn't the norm for games but for many other applications static linking is fairly common.

I wasn't speaking about performance at all and it wasn't my intention to imply that the analogy has anything to do with the discussed feature itself but merely to point out that in the C++ world some people would accept these trade-offs and if people do that out of convenience then they would surely do it if it can increase performance especially in the .NET world so they might pay the price for bundling everything together but they will get optimized code which might worth it for them.

Such a feature is being worked on. It's called corert.

As far as I understand CoreRT is mostly designed for AOT scenarios and the emitted code isn't really IL, this isn't part of Roslyn and there's no compiler flag so how is it related? or as you said being worked on? maybe I'm missing something.

I think you missed my point...

but merely to point out that in the C++ world some people would accept these trade-offs and if people do that out of convenience

Could be. It's somewhat unavoidable when apples and oranges comparisons are used. The C++ world is rather different from the .NET world and attempts to make decisions in one world based on what happens in the other may very well result in failure.

then they would surely do it if it can increase performance especially in the .NET world so they might pay the price for bundling everything together but they will get optimized code which might worth it for them

Why do you think that bundling everything together would increase performance?

Do you even know that you have all the code after bundling? No, there's always Assembly.Load & friends.

Can you transform a virtual call into a direct call because you can see that no class has overridden the called method? No, you can't because someone may create a class at runtime and override that method.

Can you look at all the List<T> code, conclude that count <= array.Length is always true and eliminate a range check? No, you can't because someone might use reflection to set the count field and break the invariant.

As far as I understand CoreRT is mostly designed for AOT scenarios and the emitted code isn't really IL, this isn't part of Roslyn and there's no compiler flag so how is it related? or as you said being worked on? maybe I'm missing something.

Well, do you want better compiler optimizations? Are you willing to merge all the code into a single binary and give up runtime code loading? Are you willing to give up runtime code generation? Are you willing to give up full reflection functionality? Then it sounds to me that CoreRT is exactly what you're looking for.

Do want it to emit IL? Why would you want that? I thought you wanted performance. Do you want it to be part of Roslyn as a compiler flag? Again, I thought you wanted performance.

The problem with this proposal is that it is extremely nebulous. Most people just want better performance and don't care how they get it. Some propose that we might get better performance by doing certain things but nobody knows exactly what and how much performance we can get by doing that.

And then we have the Roslyn team who had to cut a lot of stuff from C# 7 to deliver it. And the JIT team who too has a lot of work to do. And the CoreRT teams who for whatever reasons moves rather slowly. And the proposition is to embark into this new nebulous project that overlaps existing projects and delivers unknowns. Hmm...

@mikedn

Could be. It's somewhat unavoidable when apples and oranges comparisons are used. The C++ world is rather different from the .NET world and attempts to make decisions in one world based on what happens in the other may very well result in failure.

Okay, first of all I didn't just compare two worlds I made an analogy between two worlds and I merely pointed out that people choose one thing over the other regardless to the trade-offs in one world so they might do the same in a different world where the impact might be greater...

Why do you think that bundling everything together would increase performance?

I never said it will increase performance! I said that if it can increase performance then people will use it just for this fact alone and will accept the trade-offs he pointed out in his post.

I'm not an expert on the subject so I'm not going to pretend like my words are made of steel but based on what @AndyAyersMS wrote it seems like bundling assembly can eliminate some challenges.

By everything I only refer to the assemblies you own not 3rd-party ones.

Do you even know that you have all the code after bundling? No, there's always Assembly.Load & friends.

No, I don't know.

Well, do you want better compiler optimizations? Are you willing to merge all the code into a single binary and give up runtime code loading? Are you willing to give up runtime code generation? Are you willing to give up full reflection functionality?

ATM I don't know what would be the trade-offs, there's no proposal about it or design work that delve into limitations, so how did you decide what would be the limitations for such a feature?

Then it sounds to me that CoreRT is exactly what you're looking for.

Maybe... but I don't think so.

Do want it to emit IL? Why would you want that? I thought you wanted performance. Do you want it to be part of Roslyn as a compiler flag? Again, I thought you wanted performance.

Just because I want to optimize existing IL for performance to reduce run-time overheads it means that now I want to compile it to native code?

The problem with this proposal is that it is extremely nebulous. Most people just want better performance and don't care how they get it. Some propose that we might get better performance by doing certain things but nobody knows exactly what and how much performance we can get by doing that.

I agree but how would you get to something without discussing it first? don't you think we need to have an open discussion about it? and what we really want to get out of it?

This isn't really a proposal, this is the reason it was marked as Discussion.

And then we have the Roslyn team who had to cut a lot of stuff from C# 7 to deliver it. And the JIT team who too has a lot of work to do. And the CoreRT teams who for whatever reasons moves rather slowly. And the proposition is to embark into this new nebulous project that overlaps existing projects and delivers unknowns. Hmm...

You're right but I don't think it's our job as a community to get into their schedule, we can still discuss things and make proposals without stepping into their shoes, when and if these things will see the light of day, that's a different story so to that I'd say patience is a virtue.

@eyalsk we're always happy to take in feedback and consider new ideas. And yes, we have a lot of work to do, but we also have the responsibility to continually evaluate whether we're working on the right things and adapt and adjust. So please continue to raise issues and make proposals. We do read and think about these things.

I personally find it easier to kickstart a healthy discussion by working from the specific towards the general. When someone brings concrete ideas for areas where we can change or improve, or points out examples from existing code, we are more readily able to brainstorm about how to best approach making things better. When we see something broad and general we can end up talking past one another as broad proposals often create more questions than answers.

The discussion here has perhaps generalized a bit too quickly and might benefit from turning back to discussing specifics for a while. So I'm curious what you have in mind for a constexpr-type optimization, and if you see examples today where you think we should improve.

Likewise for some of the other things mentioned above: linq rewriting, things too expensive to do at jit time, etc....

@AndyAyersMS

The discussion here has perhaps generalized a bit too quickly and might benefit from turning back to discussing specifics for a while. So I'm curious what you have in mind for a constexpr-type optimization, and if you see examples today where you think we should improve.

I'm still thinking about constexpr and specific scenarios that would make sense in C#, I made a discussion about it #15079 but the discussion hasn't yield anything specific just yet.

Personally, I want constexpr mostly for some mathematical functions or more generally pure functions that can be evaluated at compile-time, I already made a proposal for raising a number to a power so maybe it's worth adding constexpr than adding language specific operators for it like **.

I never said it will increase performance! I said that if it can increase performance then people will use it just for this fact alone and will accept the trade-offs he pointed out in his post.

Well, it was a rhetorical question.

I'm not an expert on the subject so I'm not going to pretend like my words are made of steel but based on what @AndyAyersMS wrote it seems like bundling assembly can eliminate some challenges. By everything I only refer to the assemblies you own not 3rd-party ones.

Some but not all. As already mentioned, even if you have all the code there are problems with reflection and runtime code generation. And if you limit yourself only to your own code then the optimization possibilities will likely be even more limited.

I agree but how would you get to something without discussing it first?

The trouble is that I have no idea what we are discussing. As I already said, this whole thing is nebulous.

Personally, I want constexpr mostly for some mathematical functions or more generally pure functions that can be evaluated at compile-time,

Dragging constexpr into this discussion only serves to muddy the waters. Constexpr, as defined in C++, has little to do with performance. C++ got constexpr because it has certain contexts where compile time evaluation is required - e.g. array sizes, attributes, template non-type arguments etc. C# too has such contexts (e.g. attributes, const) but fewer and in particular it lacks template non-type arguments and more generally meta-programming.

It may be useful to be able to write [DefaultValue(Math.Cos(42.0))] but what is being discussed here has nothing to do with that. C++ like constexpr needs to be implemented by the C# compiler, it cannot be done by some sort of IL rewriter.

The scenario I'm thinking of in particular

ASP.NET app publish

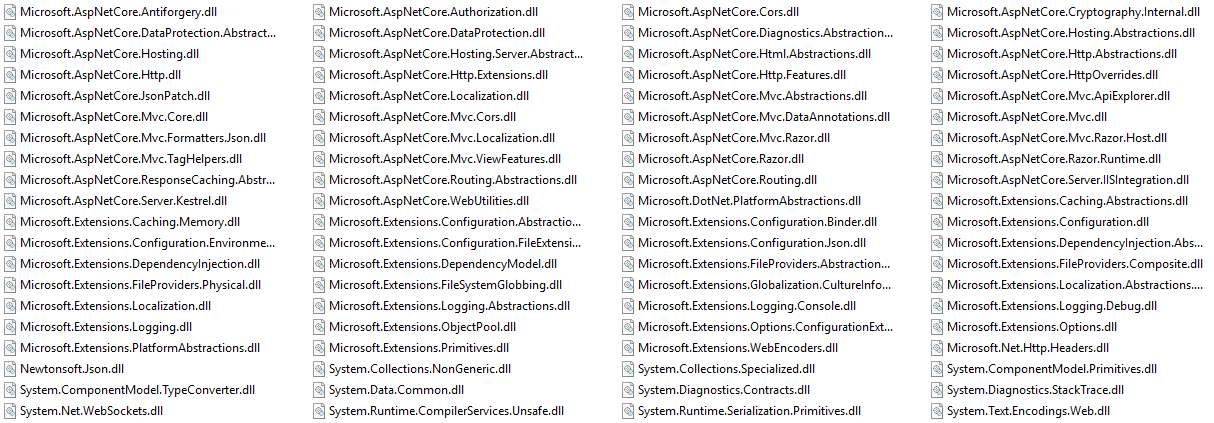

publish (e.g. pre-compiling MVC views; rather than runtime compile to il).publish (as per current publish step)publish <- this stepIt would be a partial link step of everything above .NET Standard/Platform Runtime; e.g. app+dependencies, which is still quite a lot of things e.g. these are the ASP.NET dependencies brought into the publish directory:

So it would still use platform/global versioned .NET Standard dlls (mscorlib and friends) with PGO compiled System.Private.CoreLib (as now when not doing standalone app); and allow for emergency patching at that level; but link and optimize your local libraries.

Sort of the inverse of what Xamarin does for Android and iOS; where it focuses on mscorlib (discarding unused code), then optionally your own libraries.

So you'd end up with a single dll + pdb for deployment of your app; that would remain platform independent and portable; and be jitted at runtime. With windows or linux, 32 bit or 64 bit remaining a deferred choice and operational decision rather than a pre-baked developer decision.

Then it sounds to me that CoreRT is exactly what you're looking for.

No because I want the benefits the runtime Jit provides. I want Vector acceleration enabled for the specific CPU architecture its deployed on, I want runtime readonly statics converted to consts and branch elimination because of it; etc... I want a portable cross platform executable etc...

So you'd end up with a single dll + pdb for deployment of your app

So far this sounds just like an ordinary IL merge tool.

and be jitted at runtime

Why at runtime? What's wrong with crossgen?

No because I want the benefits the runtime Jit provides. I want Vector acceleration enabled for the specific CPU architecture its deployed on, I want runtime readonly statics converted to consts and branch elimination because of it; etc... I want a portable cross platform executable etc...

Why not add such missing features to CoreRT/Crossgen?

So far this sounds just like an ordinary IL merge tool.

And this is the point I'm suggesting that optimizations can be made that rosyln won't (or can't) do and the jit can't do. Either due to lack of whole program view or time.

Why at runtime? What's wrong with crossgen?

Crossgen doesn't work for VM deployed apps which can be resized and moved between physical hardware yet remain the same fully deployed O/S; unless the first start up step is to recheck every assumption made and potentially re-crossgen.

e.g. on Azure if I switch between an A series VM and an H series; if its compiled for the H series it will fail on the A series; if its compiled for the A series it won't take advantage of the H series improved CPUs.

if its compiled for the H series it will fail on the A series

This is not correct. crossgen will either not generate the native code at all, leave hole in the native image and the method gets JITed at runtime from IL; or it will generate code that works for all supported CPUs. System.Runtime.BypassNGenAttribute or System.Runtime.BypassReadyToRunAttribute can be used to force specific methods to not be crossgened and be JITed at runtime.

We are actually too conservative in some cases and leaving too many holes in the native images, for example https://github.com/dotnet/coreclr/issues/7780

crossgen will either not generate the native code at all, leave hole in the native image and the method gets JITed at runtime from IL; or it will generate code that works for all supported CPUs.

Good to know; I take that back 😁 Will the code it generates be more conservative the otherway also e.g. take less advantage of newer procs, or will it only generate the code that will be the same?

Maybe a better example then would be ifs based on readonly statics read from a config file or based on IntPtr.Size or ProcessorCount where the Jit would eliminate entire branches of code; whereas crossgen would have to leave them in. Might be getting a little esoteric now...

And this is the point I'm suggesting that optimizations can be made that rosyln won't (or can't) do and the jit can't do. Either due to lack of whole program view or time.

The whole program view has been mentioned before and it is problematic. The lack of time is a bit of a red herring. Crossgen doesn't lack time. And one can imagine adding more JIT optimizations that are opt-in if they turn out to be slow. And the fact that you do some IL optimizations doesn't mean that the JIT won't benefit from having more time.

unless the first start up step is to recheck every assumption made and potentially re-crossgen.

That sounds like a reasonable solution unless the crossgen time is problematic.

This is not correct. crossgen will either not generate the native code at all, leave hole in the native image and the method gets JITed at runtime from IL; or it will generate code that works for all supported CPUs.

Yes but sometimes that isn't exactly useful. If I know that all the CPUs the program is going to run have AVX2 then I so no reason why crossgen can't be convinced to generate AVX2 code.

Well, it was a rhetorical question.

I wasn't trying to answer any question but to clarify what I wrote.

Some but not all. As already mentioned, even if you have all the code there are problems with reflection and runtime code generation. And if you limit yourself only to your own code then the optimization possibilities will likely be even more limited.

Sometimes, some optimization is better than nothing, again we're not speaking about details here so I don't know whether it make sense or not..

I know it's going to be limited but this limit is undefined and maybe, just maybe it would be enough to warrant this feature, I really don't know.

Dragging constexpr into this discussion only serves to muddy the waters.

I guess I can respect that but I wasn't speaking about constexpr per se I said that maybe it would fit into the same phase that is after the compiler compiled the code.

Constexpr, as defined in C++, has little to do with performance.

That's your own interpretation and opinion not a fact because many people would tell you a different story.

However, I did not imply that constexpr has anything to do with performance, like I said above I just thought that if a tool would be developed to optimize IL after the code is compiled then again maybe this would be the right phase to take constexpr into account and instead of evaluating it at compile-time, it would be evaluated at post-compile time.

C++ got constexpr because it has certain contexts where compile time evaluation is required - e.g. array sizes, attributes, template non-type arguments etc.

Yeah? the moment you start asking questions like why it's required? you soon realize that it has a lot with performance and not as little as you think but really, performance is a vague word without context so it's useless to speak about it.

It may be useful to be able to write [DefaultValue(Math.Cos(42.0))] but what is being discussed here has nothing to do with that. C++ like constexpr needs to be implemented by the C# compiler, it cannot be done by some sort of IL rewriter.

Yeah, okay I won't derail the discussion any further about it.

Maybe a better example then would be if based on readonly statics read from a config file or based on IntPtr.Size or ProcessorCount where the Jit would eliminate entire branches of code; whereas crossgen would have to leave them in. Might be getting a little esoteric now...

LOL, it is esoteric. You want some kind of IL optimizer but at the same time you want CPU dependent optimizations :smile:

LOL, it is esoteric. You want some kind of IL optimizer but at the same time you want CPU dependent optimizations

I was saying why I wanted an il optimizer and also runtime Jitted code.

@AndyAyersMS for linq optimization I assume the kind of things done by LinqOptimizer or roslyn-linq-rewrite which essentially converting linq to procedural code; dropping interfaces for concrete types etc.

I was saying why I wanted an il optimizer and also runtime Jitted code.

Yeah, I know. But it's funny because the IL optimizer is supposed to do some optimizations without knowing the context and then the JIT has to sort things out.

CoreRT compiler or crossgen are focused on transparent non-breaking optimizations. They have limited opportunities to change shape of the program because of such changes are potentially breaking for the reasons mentioned here - anything can be potentially inspected and accessed via reflection, etc.

I do like the idea of linking or optimizing set of assemblies (not entire app) together at IL level. It may be interesting to look at certain optimizations discussed here as plugins for https://github.com/mono/linker. The linker is changing the shape of the program already, so it is potentially breaking non-transparent optimization. If your program depends on reflection, you have to give hints to the linker about what it can or cannot assume.

If the IL optimizer can see a set of assemblies and it can be assured that nothing (or subset) is accesses externally or via reflection, it opens opportunities for devirtualization and more aggressive tree shaking at IL level. The promised assumptions can be enforced at runtime - DisablePrivateReflectionAttribute is a prior art in this space.

Similarly, if you give optimizer hints that your linq expressions are reasonable (functional and do not depend on the exact side-effects), it opens opportunities for optimizing Linq expressions - like what is done Linq optimizers mentioned by @benaadams .

Or if you are interested in optimizations for size, you can instruct the linker that you do not care about error messages in ArgumentExceptions and it can strip all the error messages. This optimization is actually done in a custom way in .NET Native for UWP toolchain.

So optimize step would be applied at application publish time; rather than when publishing a library; as that would be the "bake" time.

Some more concrete ideas of optimizations I'd like that it doesn't look like roslyn, jit or even Generators will solve without code bloat (by creating an entire side by side function for a one line code change)

throw new Exception -> void ThrowExceptionthrow new Exception(nameof(...)) -> void ThrowException(Enum...)throw new Exception(nameof(...), string) -> void ThrowException(Enum..., Enum...)foreach to for loops for arrays/IList/List - devirtualize if possMostly they are all things you can do by writing different code; but easier for the "pit of success" if a compiler does it.

[1] I used to use DotNetOpenAuth.dll which is about half the size of System.Private.CoreLib.dll or x4.5 the size of Microsoft.AspNetCore.Server.Kestrel.dll. All I used it for was essentially 3 redirects with some parameters - didn't really need the rest of it...

Or if you are interested in optimizations for size, you can instruct the linker

There are a lot of things I'd like to tell the compiler/linker. For example I'd like to tell them I don't care about reflection. I keep hearing about fears of code bloat as regards to optimization yet we keep caring around the reflection anchor in all cases, whether it's needed/used or not.

Some more concrete ideas of optimizations I'd like that it doesn't look like roslyn, jit or even Generators will solve without code bloat (by creating an entire side by side function for a one line code change)

Finally, we have something for discussion. A few questions/observations:

Enum.ToString -> switch statement call dotnet/coreclr#6713 (comment)

What kind of code benefits from that?

Upfront fast-path String.Equals overloads, hard to do at runtime dotnet/coreclr#8579

What fast-path are you talking about? As already mentioned in that issue it's not clear what each change improves. Besides, this appears to be the same thing as "Function splits to Inlinable (fast-path) + Non-inlinable (slow-path); rather than all non-inlinable"

Remove bounds checks for instance readonly arrays dotnet/coreclr#5371

Presumably by introducing a local variable for the array field? There's not a lot else that you can do at IL level to remove range checks, unless you want to produce unverifiable code.

Convert enumeration/foreach to for loops for arrays

Why would you do that? Are you talking about the case of an array accessed via an interface?

Convert enumeration/foreach to for loops for List

That may make sense since the list enumerator is unfortunately rather fat. But it can alter the meaning of the code and I'm not sure if the optimizer can figure that out. It certainly won't be able to catch the case of a list modified accidentally by another thread but perhaps that's not a problem.

Enum.ToString -> switch statement call dotnet/coreclr#6713 (comment)

What kind of code benefits from that?

Wordy serialization, like json

Upfront fast-path String.Equals overloads, hard to do at runtime dotnet/coreclr#8579

What fast-path are you talking about?

Currently the calling overhead on String.Equals(...,StringComparison.OrdinalIgnoreCase) is larger than monomorphic dispatch via interface. So

String.Equals(...,StringComparison.Ordinal) -> String.Equals(...)String.Equals(...,StringComparison.OrdinalIgnoreCase) -> Something better...String.Equals(...,StringComparison.InvariantCulture) -> ref checks + CompareInfo:CompareString.Equals(...,StringComparison.InvariantCultureIgnoreCase) -> ref + CompareInfo:CompareString.Equals(...,StringComparison.CurrentCulture) -> ref + CompareInfo:CompareString.Equals(...,StringComparison.CurrentCultureIgnoreCase) -> ref +CompareInfo:CompareRemove bounds checks for instance readonly arrays dotnet/coreclr#5371

Presumably by introducing a local variable for the array field?

Yep, fine with that.

Convert enumeration/foreach to for loops for arrays

Why would you do that? Are you talking about the case of an array accessed via an interface?

Yes, is x 10 slower

Convert enumeration/foreach to for loops for List

That may make sense since the list enumerator is unfortunately rather fat. But it can alter the meaning of the code and I'm not sure if the optimizer can figure that out.

x 2 slower for regular foreach and x 6 slower for IList foreach; R# happily by default suggests you to convert a List for to foreach and provides a one click refactor.

If you are modifying it inside the enumerator you should hopefully see the bug in debug and release. If you are using the exception throw for flow control then you don't care about performance and won't use a publish step. Could be opt in flag if need be.

It certainly won't be able to catch the case of a list modified accidentally by another thread but perhaps that's not a problem.

Current implementation has many race conditions on _version anyway so don't think that's a problem

Wordy serialization, like json

My thought as well. But how is that supposed to work?

A very simple serializer would use reflection, end up with a boxed enum and call ToString on it. Where does the generated switch fits in?

A more complex serializer would generate serialization code and then it could generate the switch itself. Besides, in many cases serialization code is generated at runtime so an IL optimizer wouldn't even see that code.

Currently the calling overhead on String.Equals(...,StringComparison.OrdinalIgnoreCase) is larger than monomorphic dispatch via interface. So...

That seems like something that can be solved by tweaking that particular overload, you have already done something like that in that PR. Another solution would be to add EqualsOrdinalIgnoreCase since this is needed quite often.

Anyway, this is in the same bucket as "Function splits to Inlinable (fast-path) + Non-inlinable (slow-path); rather than all non-inlinable" - IL inlining. Not impossible but not easy to get right, ask @AndyAyersMS :smile:

If you are modifying it inside the enumerator you should hopefully see the bug in debug and release. If you are using the exception throw for flow control then you don't care about performance and won't use a publish step.

Yeah, I'm not too worried about that. As long as the array range checks are still in place (or correctly eliminated) it should be fine because no matter what happens you can't end up with garbage values and/or corrupted memory.

A very simple serializer would use reflection

Maybe would add to list; reflection in a generic being converted to a concrete function and reflection converted to direct calls. Don't have any examples of this; probably a new use case/pattern?

reflection in a generic being converted to a concrete function and reflection converted to direct calls

Direct call? That would be possible only in some very basic (and mostly useless) scenarios). The best one can hope here is a delegate call (perhaps without a delegate allocation). In which case maybe you should simply use dynamic.

Is it too much to ask for my pure-math function written in C# to execute as fast as the exact same code written in C/C++? Honestly, it's frustrating. I'm a strong .Net advocate but when a colleagues points to code that has no garbage collection, no boundary checks, no function calls, and asks, "Why is it so slow in C#?" The only logical answer, after looking at the "optimized" assembly, I can think of is, "The JIT'er is a crap compiler." and I hate saying it. BTW: this was prompted by a block of performance/business critical code that runs about 60x faster as a C function.

Also, to address the proposal...today we have a policy, "Don't use LINQ" and the reason is it always slower than the procedural equivalent. If we had a IL optimizer that could convert it to something more performant then we could benefit from more readable code in many cases...increasing productivity.

@SunnyWar

this was prompted by a block of performance/business critical code that runs about 60x faster as a C function.

If you have specific performance critical code that is slow on the CoreCLR, you might want to create an issue about it on the coreclr repo. I believe they like to see that kind of issues, to decide what optimizations should the JIT make.

today we have a policy, "Don't use LINQ" and the reason is it always slower than the procedural equivalent.

As already referenced earlier this thread: roslyn-linq-rewrite.

@AndyAyersMS

I personally find it easier to kickstart a healthy discussion by working from the specific towards the general. When someone brings concrete ideas for areas where we can change or improve, or points out examples from existing code, we are more readily able to brainstorm about how to best approach making things better.

Ok, here is a conrete idea: enchant NGEN, make it work for non-WinStore applications, enable auto-vectorization and other cool stuff. When you are running NGEN you already knows if processor supports SSE/AVX, amount of memory and so on. When user changes these values (for example by buying some additional memory) application may be silently recompiled (or recompiled if user explicitely runs it and it wan't recompiled yet). But it's not expected to be occured often (I'm even not sure if amount of installed memory is considered by JIT).

Another option is implement something like Java HotSpot. It makes crazy speculative optimizations, but it worth as we can see.

Thanks.

Dynamic/adaptive optimization is on our radar (I'd like to say "on our roadmap" but we're not at that stage quite yet). We have a bunch of groundwork to do first...

Are you actually referring to .NetNative and not NGEN above?

@AndyAyersMS .Net Native sounded incredibly cool when announced, but after several years Microsoft stoped to push this technologie and I'm not even sure if it still alive now. For last i think 5 years .Net Native was in state `hmmm, please wait another bit, we are almost done, we just need some fixes to make reflection work, and we're ready to conquer the world', but I just don't see any progress anywhere. No blogs/posts/interview, just nothing. So I'm not talking about .Net Native. However, there is LLILC which is in active developement, and sounds very similar to .Net Native except it seems to be alive. It's another cool project, but it relies on LLVM optimizators, which are out of scope of Roslyn.

So I am talking about NGEN.

I just don't see any progress anywhere

Take a look at https://github.com/dotnet/corert. It is the open sourced version of .NET Native and it is pretty active: https://github.com/dotnet/corert/graphs/contributors .

LLILC which is in active development

LLILC has been paused. Check https://github.com/dotnet/llilc/graphs/contributors ...

@Pzixel Here's where you threw me off: NGEN already works for desktop, and the equivalent (crossgen) works for CoreCLR. However, these tools are intended more for optimizing startup time and reducing working set than for producing the best code quality.

So I surmised you might be talking about something else, perhaps a tool that (likely ahead of time) does extensive program analysis and wide-scale aggressive optimization and binds in knowledge of the exact assemblies and machine target and such. Something like this is more or less in alignment with .NetNative / CoreRT.

LLILC never got far enough to show promise, though I and others were optimistic that it would get there in time. How much time, though, that was a big question...

Rather than this very limited form of optimization we should have a tiered JIT that is a superset of this proposal from its effects. It optimizes more and should make jitting even faster than before (because we don't need to spend so much time on cold code).

A question but this "IL Optimizer" it is not clear to me if it will be a separate project from Roslyn or not?

If I understand well this will make simpler to generate assembly code for the various architectures too as a lot of optimizations will be done at the IL stage where there is more context on what is going on.

A question but this "IL Optimizer" it is not clear to me if it will be a separate project from Roslyn or not?

I can imagine it'll be a separate thing during the experimental stages, but eventually gets integrated and becomes an extra step in the build process.

One thing I would like to see is for this optimizer to be highly configurable as to which optimizations one would like to apply. Preferably in a way that can be set per-project, and checked into source control (piggyback on MSBuild perhaps? 🤔 ) Some optimizations should also be tweakable by themselves, for example the tree-shaking:

Let's say Alice wants to remove all internal types that are not referenced in the compilation unit, so she turns it on and it'll just do its thing for the entire assembly. Bob has some internal things for a different assembly via [InternalsVisibleTo] or Reflection, so he should be able to specify the types that the optimizer should not remove. Charlie wants to be in full control, and would rather specify a list of the types that the optimizer is only allowed to remove. Wildcards when specifying would also be useful (MyNamespace.Stuff.*).

Removing unused internal/private members would have the same Blacklist/Whitelist options, with methods also being able to specify a blanket for All/Static (inc. extension)/Extension only/None and properties/fields All (but never in structs)/Static/None.

Several places here mention, as @benaadams put it, "the kind of things done by LinqOptimizer or roslyn-linq-rewrite". I'm curious: with the referenced tools already available, what's the benefit of doing similar things in an IL rewrite step? I realize that comparing an IL rewrite step to LinqOptimizer would have most of the same tradeoffs as comparing roslyn-linq-rewrite to LinqOptimizer, but comparing an IL rewrite step to roslyn-linq-rewrite, I'm not following what the benefit would be (aside from, as noted at that link, applying to languages other than C#), what am I missing?

I think the benefit is that a general purpose tool applies to all IL based languages and can include all optimizations.

Things like stripping the init flag from the locals directive so that locals don't have to be zeroed first also applies (this is a big perf boost for some stackalloc scenarios and also applies to methods with a lot of locals).

Other optimizations like replacing multiple comparisons with a single compare and such work as well:

if (x < 0 || x > 15) can be transformed to be if ((uint)(x) > 15).

There are tens (if not hundreds) of these micro-optimizations that native compilers do, and things like the AOT could do, but which the JIT may not be able to do (due to time constraints).

Other optimizations like replacing multiple comparisons with a single compare and such work as well: if (x < 0 || x > 15) can be transformed to be if ((uint)(x) > 15)

That happens to work but another similar one - if (x == 2 || x == 4 || x == 8 || x == 33) doesn't work so well because it depends on the target bitness. Sure, you could tell the IL optimizer to assume a particular target bitness but then things start to become a bit blurry, we have IL so we don't need to bother (too much) with that kind of stuff.

There are tens (if not hundreds) of these micro-optimizations that native compilers do

And like the one above many won't be possible in IL because they require knowledge about the target hw. Or the optimization result is simply not expressible in IL. You end up with a tool that can't do its job properly but needs a lot of effort to be written. And it's duplicated effort since you still the need to optimize code.

but which the JIT may not be able to do (due to time constraints).

Eh, the eternal story of time constraints. Except that nobody attempted to implement such optimization in the JIT to see how much time they consume. For example, in some cases the cost of recognizing a pattern might be amortized by generating smaller IR, less basic blocks, less work in the register allocator and so on.

Besides, some people might very well be willing to put up with a slower JIT if it produces faster code.

Ultimately what people want is for code to run faster. Nobody really cares how that happens (unless it happens in such a cumbersome manner that it gives headaches). That MS never bothered too much with the JIT doesn't mean that there's no room for improvement or that IL optimizers needs to be created.

Note to say that IL rewriters are completely pointless. But they're not a panacea to CQ problems.

Thanks, @tannergooding, I realize that roslyn-link-rewrite's scope is narrower than "everything". What I'm trying to ask is, for the sort of transforms that are in its scope, is there a benefit/desire to have an IL rewrite step perform those same ones? If so, why, and if not, what similar transforms have people had in mind when pointing to it as an example?

I would think that a general purpose IL rewriter should be scoped to whatever optimizations are valid and would likely duplicate some of the ones the JIT/AOT already cover. As @mikedn pointed out, there are plenty that can't be done (or can only be partially done) based on the target bitness/endianness/etc.

However, I would think (at least from a primitive perspective) this could be made easier by building on-top of what the JIT/AOT compilers already have.

That is, today the JIT/AOT compilers support reading and parsing IL, as well as generating and transforming the various trees it creates from the IL>

If that code was generalized slightly, I would imagine it would be possible to perform machine-independent transformations and save them back to the assembly outside of the JIT itself.

This would mean that the JIT can effectively skip most of the machine-independent transformations (or prioritize them lower) for code that has gone through this pass and it can instead focus on the machine-dependent transformations/code-gen.

Not that it would be easy to do so, but I think it would be beneficial in the long run (and having the JIT/AOT code shared/extensible would also, theoretically, allow other better tools to be written as well).

The jit can only apply so many optimizations as it is time constrained at runtime.

AOT/NGen which has more time ends up with asm but loses some optimizations the jit can do at runtime as it needs to be conservative as to cpu architecture; static readonly to consts etc

The compile to il compilers (C#/VB.NET/etc); which aren't as time constrained but have a lot of optimizations considered out of scope.

Do we need a 3rd compiler between roslyn and jit that optimizes the il as part of the regular compile or a "publish" compile?

This could be a collaboration between the jit and roslyn teams?

I'm sure there are lots of low hanging fruit between the two; that the jit would like to do but are too expensive.

There is also the whole program optimization or linker + tree shaking which is also a component in this picture. (e.g. Mono/Xamarin linker). Likely also partial linking (e.g. nugets/non-platform runtime libs)

From https://github.com/dotnet/roslyn/issues/15644#issuecomment-267370590

/cc @migueldeicaza @gafter @jkotas @AndyAyersMS