Anything's possible... if you're willing to invest months and years of work into it.

Closed itsamelambda closed 2 years ago

Anything's possible... if you're willing to invest months and years of work into it.

Porting JIT or GC to C# would cost man-years for sure. What would we get in return? Efforts like these need to have positive return of investment.

Note that we are porting more CoreCLR code from C++ to C# in places where we get a clear positive ROI. For example, majority of number parsing/formatting code was ported from C++ to C# for 2.1 release. This port both improved performance and made implementation of Span overloads much easier.

Out of interest, is it technically possible to port all of the JIT and GC to C#? (leaving aside the question of whether it's a good idea or not). Are there any parts that would still have to drop down to assembly/C/C++ because it's not possible to write them in C# or is C# a broad enough language that is gives you enough low-level functionality?

As a follow-up, does the same apply to the entire .NET runtime, I've always assumed that you'd have to use assembly for some parts, i.e. method dispatch, stubs, etc or is that only needed for performance gains and you could actually write a slow (but functional) version in C#?

@vectorix On the wider point of porting parts of the runtime to C#, take a look at the work being done in CoreRT, https://github.com/dotnet/corert/blob/master/Documentation/intro-to-corert.md#architecture (although currently they use the same C/C++ JIT and GC as CoreCLR, most/all other parts of the runtime have been re-written in C#)

Out of interest, is it technically possible to port all of the JIT and GC to C#?

I don't see any technical reasons that would prevent writing a JIT entirely in managed code. It has to read IL and spit out machine code, you can do that in any language you want, even in JavaScript. You'll likely run into performance issues but that's another story.

Of course, you'll inevitably run into a chicken & egg problem - who's JITing the JIT? There are various options - including using CoreRT to compile the JIT into a native dll that can replace the existing clrjit.dll.

If I had lots of spare time available I'd do just that. But I don't so I prefer to invest my spare time into making the existing JIT better. It's there, it works, it gets the job done today and not in 5 years from now.

I've always assumed that you'd have to use assembly for some parts, i.e. method dispatch, stubs

The usual way to cheat on these are compiler/JIT intrinsics. You make it produce code that you need to avoid need to write direct assembly. It is often harder to maintain such code than direct assembly, but it makes you pure.

@mikedn and @jkotas thanks for the info

Porting JIT or GC to C# would cost man-years for sure. What would we get in return?

It would likely improve iteration times in research environments, similar to Jikes RVM. I would worry about it suffering the same fate in the end though (obsolete due to progressive mismatch in functionality relative to the primary supported runtime).

Thanks for all the responses.

Clearly rewriting the JIT is a large effort, implying that it has to be financially backed by one or more large organizations. On this point, I would like to refer to Oracle and Java. There is the GraalVM, which is a VM implementation of the JVM which is 97.5% written in Java. Version 1.0 was released on April 17, 2018.

The top three contributors on Github (chumer, thomaswue, dougxc) are all affiliated with Oracle Labs. So roughly, the reasoning on this point goes, if Oracle can pull it off, so could Microsoft. Perhaps it is possible to learn from their experience and avoid pitfalls.

The claimed benefits for Twitter are that they can save costs by improving the VM performance. One claim in a talk from GeeCON was 2.5% less GC and 11% less user CPU time by using the VM written almost entirely in Java, apparently related to escape analysis.

They even mentioned that they can use it as an AOT compiler. I guess it could be interesting to figure out whether this is better than using LLVM as a back-end. JDK9 contains Graal which can be used for AOT, still undocumented but nevertheless, interesting. One of the problems in .NET Framework is that the desktop framework does not have proper AOT for applications in WinForms and WPF. Ideally the C# JIT could also do AOT for the desktop and WebAssembly. Mono is also duplicating effort for Unity for AOT. It seems strange that .NET can rally under a single MSIL compiler like Roslyn to avoid the fragmentation of compiler efforts in C++ (e.g. MSVC, Clang, GCC) but then end up with multiple and fragmented JIT implementations.

Still, doing something because Oracle does it is not necessarily a good reason in itself. GraalVM may well be a big mistake. So, why would anyone want to do this?

The first reason would be developer productivity and safety. All the benefits of using C# over C/C++ would apply, meaning that it would be possible to express more complex optimizations and evolve the JIT faster by an order of magnitude. This investment is still desirable, so it seems like a longer term economical thing to do. We should keep in mind that when the JVM and JIT was initially developed, there was no choice of using C# because C/C++ was needed to bootstrap the functionality. Now, C/C++ may only be needed as a glue layer, with all the major compiler bits written in managed code. Given the functional nature of the "IL in, bytecode out" and the desire for no side effects, perhaps F# would also be suitable.

The second reason would be dogfooding. If there are any reasons why C# would cause lower performance in terms of JIT throughput, these ought to be addressed as well anyway. Writing a compiler would likely encounter more situations than computational microbenchmarks and far more pathological workloads and corner cases.

The third reason would be bragging rights. C# and .NET is a mature platform with 15 years legacy and has all the tooling and support needed. Having the entire runtime stack in managed code will make .NET look even better. It is difficult to quantify the value of this in dollar terms.

The fourth reason is performance. There is always the claim that managed code is slower than native. But there is no reason for it to be this way. For example, background JIT can use multi-code CPU:s better with C# async, which can offset any percentage differences in C# performance on a single threaded JIT.

The fifth reason would be synergies with Roslyn. Roslyn is already written in C#, and the only way the JIT communicates with Roslyn is the MSIL. Sometimes, compiler optimizations belong in Roslyn, and the upstream richer representation may contain important hints for the compiler that get erased in MSIL that the JIT needs to piece together again to infer certain things. These two components can work together and perhaps share more rich representations, for example about structs. The approach used in Graal mentioneds using AST rewriting. AST would have more structural information than a stack machine MSIL representation.

http://www.oracle.com/technetwork/java/jvmls2013wimmer-2014084.pdf

One way to start this process would be to write a highly optimizing JIT in C# that can take over optimization from the RyuJIT and apply more optimizations. This would be complementary to RyuJIT, and it would be acceptable to have lower throughput initially to optimize heavily called functions. Then, the work could move down the stack.

Another alternative is to develop a minimum viable product that does no optimizations whatsoever but at least has feature parity with MSIL and then run benchmarks against RyuJIT.

Just wanted to put these ideas out there and see what may be of relevance and feel free to close this issue if it is not feasible.

Graal is faster, more efficient, and it offers a revolutionary way to support different languages on the JVM. You are able to introduce more than a single language in a project, and be able to run programs faster. You can write using any language while not sacrificing the performance when running the compiled code of your language on the JVM.

https://zeroturnaround.com/rebellabs/graal-and-truffle-for-polyglot-languages-on-jvm/

There is the GraalVM, which is a VM implementation of the JVM which is 97.5% written in Java. Version 1.0 was released on April 17, 2018.

There's a bit of a difference between a VM and a JIT. The VM contains a JIT, a GC and some other stuff. Rewriting CLR's "other stuff" in C# is being done in the CoreRT project. I suppose you could claim it's not quite the same thing because CoreRT is focused on AOT. But hey, that seems like a pretty good reason to rewrite something - to bring up an entirely new feature. Rewriting for the sake of rewriting with the hope of gaining a few percentage points in performance is a bit of a risky proposition.

They even mentioned that they can use it as an AOT compiler.

And CoreRT is already using RyuJIT for AOT, there was no need to rewrite the JIT in C#.

All the benefits of using C# over C/C++ would apply, meaning that it would be possible to express more complex optimizations and evolve the JIT faster by an order of magnitude.

I like C# and I wrote tons of C#. Same for C++. And though I'm not what one would call a "compiler engineer" I happen to have written a bit of compiler code in both C# and C++. And I don't buy the argument that using C# would have some significant benefits in this space. It's a nice language but it's a long distance to "by an order of magnitude".

The third reason would be bragging rights.

That's not a reason.

The fourth reason is performance. There is always the claim that managed code is slower than native. But there is no reason for it to be this way. For example, background JIT can use multi-code CPU:s better with C# async, which can offset any percentage differences in C# performance on a single threaded JIT.

Managed code is slower and it always be, there's a price to pay for "managed". As for multi threading - that's of little use. Multi threaded compilation is usually done at method level and at that level you don't need async and whatever else C# may have to offer in this space.

The fifth reason would be synergies with Roslyn. Roslyn is already written in C#, and the only way the JIT communicates with Roslyn is the MSIL. Sometimes, compiler optimizations belong in Roslyn, and the upstream richer representation may contain important hints for the compiler that get erased in MSIL that the JIT needs to piece together again to infer certain things.

That may be a valid reason but it's a bit more complicated than that. There may be interesting optimizations to be performed at a high level, on an AST. But then ASTs are a rather poor representation for more low level optimizations such as the ones JIT performs. So it's far from clear that rewriting the JIT in C# may provide some advantage in this area. You may end up sharing a bit of code but then maybe not.

Rewriting for the sake of rewriting with the hope of gaining a few percentage points in performance is a bit of a risky proposition.

@mikedn In your opinion having experience with compiler development, why did it make commercial sense for Oracle to rewrite the JIT in Java when they supposedly already have a mature C++ implementation of a JIT? The point that Chris Thalinger made in his talk was that even a few % is a big saving on a large scale like Twitter. Just wonder if you agreed with his premise.

Managed code is slower and it always be, there's a price to pay for "managed".

I guess it is about trade-offs. Nothing comes for free, there is also a heavy price to pay for including endemic C++ buffer overflow bugs like Heartbleed affecting millions of users, which would not have been possible if OpenSSL was in managed code. How that cost is amortized is another question. I think the interesting point here was more about the performance of the resulting machine code from the JIT and whether amortizing this cost over repeated execution pays off. Or whether there are any other benefits, like multi-language support, AOT, hardware platform support, etc. In this case it was claimed that the Java-based compiler was able to output code that performs over 10% faster than what the C++ compiler could produce for the Twitter case. I think it is interesting that Graal can do this in 1.0 and this is against a mature C++ JIT compiler. They were also being selective about where to apply Graal so it would not be a one-size-fits-all solution.

That may be a valid reason but it's a bit more complicated than that.

Of course, could not agree more. Graal was a multi-year research project. For example, compiling JavaScript on .NET JIT may not be of interest. Just some food for thought.

why did it make commercial sense for Oracle to rewrite the JIT in Java when they supposedly already have a mature C++ implementation of a JIT?

You'll have to ask Oracle. The only thing I can say here is that a long time ago (when it was open sourced) I had a brief look at the JVM JIT source code and that 3 letter acronym that starts with W passed through my mind.

which would not have been possible if OpenSSL was in managed code

Until someone thinks that "Hey, this C# code is not fast enough. I know, I'll use unsafe code." Unfortunately it's very difficult to stop people from doing stupid things.

In this case it was claimed that the Java-based compiler was able to output code that performs over 10% faster than what the C++ compiler could produce for the Twitter case. I think it is interesting that Graal can do this in 1.0 and this is against a mature C++ JIT compiler.

Hmm, but it's very likely that's not because the new JIT was written in Java. It's because the new JIT was better designed, has additional optimizations etc.

You'll have to ask Oracle.

I asked if C#/.NET can be supported on Graal. https://github.com/oracle/graal/issues/349

I asked if C#/.NET can be supported on Graal.

LOL, asking the competition why they don't support C#/.NET :grin:

Our goal is to support "any language" with GraalVM and to make them easily interoperable. A joint project to support .NET code could be interesting.

I like C# and I wrote tons of C#. Same for C++. And though I'm not what one would call a "compiler engineer" I happen to have written a bit of compiler code in both C# and C++. And I don't buy the argument that using C# would have some significant benefits in this space.

I would like to bring a different view on this, specially on the perspective of productivity in C# vs C++, whenever I had to work on C# projects vs C++ projects what is the most striking difference for me is the way you can refactor code and push changes in, even when building from scratch a project. C++ tends to make you a lot more lazy - some could argue that you could choose something more optimal on the first shot thanks to this constraint- because changing a C++ code base is more tedious: compilation time for a change that kills any agile iteration time during development, duplications of headers and implementations, order dependencies that can force you to not even touch at the include headers because it is just too laborious to work this out (specially on a "dirty" codebase), sneaky memory trashing bugs... etc.

In the end, you are less ambitious on the improvement that you can bring to a C++ codebase (or even during the prototyping/design phase), because it is more laborious to change it. In the JIT/AOT compiler space, this is quite important because you have the freedom to change anything as all the compiler internals/details won't be exposed as a public API. Being able to explore more quickly more opportunities for designing a system is in my opinion the principal interest for moving this kind of work to a language like C#

But as I said in my article - and suggested also in this thread by others, the short/medium term benefits would be quite limited. It would require a significant work for several people/years. And it is more appealing to see this endeavor being invested currently in improving the .NET Core JIT or bringing AOT to real life. But in a long term vision, definitely something that I would still bet on.

Porting JIT or GC to C# would cost man-years for sure.

It would require a significant work for several people/years.

@jkotas @xoofx

From data available in published literature it seems to be much easier task - as a benchmark we could use 9 man-months for porting of Clang to Java - without LLVM backend - 2 millions LOC. See details below.

I would assume that porting JIT to C# would be significantly easier - effort similar to implementing Hardware Intrinsics.

However, the important aspect is why we should think about porting / writing JIT in C#. IMO we need much more flexible JIT implementation which would be based on modern JIT/VM design principles similar but more advanced than Truffle and Sulong projects which are integrated with GraalVM. All arguments in favor of using C# instead of C++ are compelling and should prevail.

Essentially it means that that in worst case scenario we should write new JIT from scratch what would obviously cost multiple man-years and in best case scenario we could reuse large chunks of current code base.

Personally I do not see any reason why .NET should be avoiding complete compiler bootstrapping what should be a standard design goal for all non-interpreted maturing languages.

Definitely worth prototyping.

who's JITing the JIT?

I think this is an important aspect to consider for new platform bootstrapping. These include Solaris and BSD based operating systems as well as new architectures. C++ makes it comparatively easier to port the infrastructure. Java has been ported to most of the operating systems already, so the reliance on Java in that regard makes sense for projects like Clank.

If "JIT+Runtime written in C#" option is to be entertained, we can probably create the bootstrapping-of-new-platform or stage0 based on Mono AOT, as Mono current has vast platform reach due to:

The bootstrapping problem is not limited to JIT. It exists today already: Who is compiling corefx? Roslyn. What does Roslyn depend on? corefx. If you want to get to a new platform, you need to cross-compile .NET Core first on platform where it works. And once you are on the new platform, you use previous version to compile the next version. It is how it works today already. You do not need to be pulling new dependencies to solve it.

IMO we need much more flexible JIT implementation which would be based on modern JIT/VM design principles...

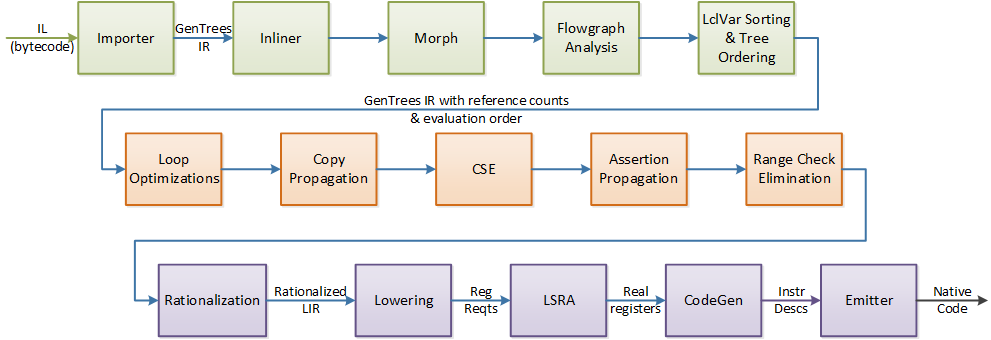

The current JIT (RyuJIT) isn't that old

It replaced JIT64 as the mainline JIT for x64 in .NET Framework 4.6/Core 1.0, and replaced JIT32 for x86 last year .NET Framework 4.7.1/Core 2.0 and is continually evolving.

As I see it the main advantages for the JIT being in C# rather than C++ is C# a more productive language (easier to reason about/get correct, refactor, and much faster compile times) and contributions would be open to more people.

The JIT and GC live in similar spaces, being self-contained components with a well defined api (i.e. they can be changed to a different component); so a 3rd party could currently provide a different implementation.

The advantages are mainly about the language; being in C# (for both JIT and GC) would likely attract more contributors external to the teams that work on them. The disadvantages would be it would likely stop the teams advancing the JIT and GC while it was happening; a long "standing still" period; which is a huge disadvantage.

An endeavor with probably more immediate tangible benefits would moving more of the VM/EE to C#.

That would allow for more platform portability, code sharing (e.g. corert, coreclr, mono?) and it can be done in smaller more manageable chunks. It is also generally managed code anyway (unlike the JIT/GC), so has to worry about interacting safely and reporting correctly to the GC etc; but is written in C++ so has much more complexity and overhead from developer perspective to do this than if it was written in C# when it would be automatically handled. i.e. the advantages are more tangible and the risks lower.

Though it looks like that's already happening to some degree?

@vectorix It's not that hard to build the basics that is still competetive, take a look at this high level virtual machine + generics + shadow stack GC in a few lines of code (~3700) written in F# + LLVM. You could write your own codegen in a few lines of code (eg.: MinCaml: https://esumii.github.io/min-caml/index-e.html). You can even create your own types/abstractions that could be specialized to GPU families (eg.: tensors) using the LLVM AMDGPU/NVPTX or libNVVM backend.

https://github.com/kp-tech/fshlvm

However implementing every CLR detail even on top of a core higher level virtual machine will require lot's of effort (still multiple months or even multiple years) especially if there are no executable semantics for everything (eg.: CLR memory model). Yes, ECMA spec exists, but not always followed, and not executable.

Take a look at LLVM semantics https://github.com/kframework/llvm-semantics or JVM + Java 1.4 executable semantics developed by third parties: https://github.com/kframework/jvm-semantics and https://github.com/kframework/java-semantics http://fslweb.cs.illinois.edu/FSL/presentations/2015/2015-01-16-K-Java-POPL/2015-01-16-K-Java-POPL.pdf This executable semantics ("interpreter") could be even used for a really slow bootstrapping.

In a long run, a reimplementation (even with huge amount of time and money you investing on testing + qa) will never be competetive compared to a "simple" (not easy!) and "correct" implementations something like F a verification-oriented dialect of ML + KreMLin a compiler from a subset of F to C could already provide in Project Everest (https://project-everest.github.io/) or Coq in Project DeepSpec (https://deepspec.org).

The closest target that is similar to C# is Dafny (~ C# + Spec#) that could be usable for this. In fact, several verified systems are already written in Dafny:

"Our modular design limits the scope of verification down to the ExpressOS kernel. The ExpressOS kernel is implemented in C# and Dafny [22], both of which are type-safe languages. Dafny is a research programming language from Microsoft Research that researchers use to teach people about formal methods."

https://web.engr.illinois.edu/~madhu/asplos13.pdf

"We demonstrate the methodology on a complex implementation of a Paxos-based replicated state machine library and a lease-based sharded key-value store. We prove that each obeys a concise safety specification, as well as desirable liveness requirements. Each implementation achieves performance competitive with a reference system. With ourmethodology and lessons learned, we aim to raise the standard for distributed systems from “tested” to “correct.”

http://research.microsoft.com/pubs/255833/IronFleet-twocol.pdf

Correct and fast code is hard, especially for crypto code. Do you really think that you could write fast and correct crypto code manually without introducing bugs? Think again! How about a provably correct by construction JIT/AOT/GC similar to these crypto implementations?

https://www.usenix.org/sites/default/files/conference/protected-files/usenixsecurity17_slides_rane.pdf https://project-everest.github.io/assets/vale2017.pdf

Sorry for being late to this discussion. Someone previously mentioned GPUs in this issue thread, which is how it caught my eye. I've been developing a compiler and runtime for the last two years to compile CIL to NVIDIA GPUs. One of the issues has been the runtime, in that a lot of any NET framework (which every one you want to choose) is written in C. To get C code to run on a GPU, all functions have to be tagged with "device" attributes because that's what CUDA requires. Based on this experience, I'd never recommend attributing a function statically using Attribute or a method modifier in order to denote what runs on a GPU. (Note, see https://github.com/dotnet/coreclr/issues/11869. I recommend you do not attribute GPU code. Let the compiler compute the closure of methods to compile for the GPU.) The framework I chose, 13K lines of C and 26K lines of C#., was written for 32-bit targets, which NVIDIA no longer supports. Some of the C code uses varargs, which was not supported well until CUDA version 9. The meta system itself, also C code, was years out of date, requiring a lot of changes for any NET Framework, Core, or Standard file, e.g., type forwarding. Remarkably, the compiler/runtime is starting to work. However, looking forward, it isn't clear to me what to do with AMD GPUs, because the HCC compiler uses yet another syntax, something like C++/AMP, so more #ifdefs, macros, etc. Porting the runtime to OpenCL 1.2 isn't a great choice, likely requiring a lot of dumbing down to roll back the use of certain features in C. In all likelihood, when I get to that point in the road where I want to run C# on AMD GPUs, I plan on writing a runtime that will be mostly in C#. The compiler I'm writing is in C# but uses LLVM, which is C++ code. So it's not likely I will be able to get the JIT compiler itself to run on a GPU--but not necessarily a good thing to do anyway. Talk about thread divergence! Based on all this work, I really wish the runtime was mostly written in C#.

please open-source midori

Writing a JIT Compiler in C# https://www.mono-project.com/news/2018/09/11/csharp-jit/

Now that I have Campy starting to actually work, I'm beginning a slow, painful process of porting System.Private.CoreLib to a GPU context (https://github.com/dotnet/coreclr/issues/17576 https://github.com/dotnet/coreclr/issues/11869 https://github.com/dotnet/roslyn/issues/14495 https://github.com/dotnet/roslyn/issues/7621 etc etc etc). C# lock () {} statements all have to be redone into non-blocking algorithms (eg CAS). GPUs cannot handle mutex-based algorithms due to spin-lock deadlock of threads in the same warp block. In System.Private.CoreLib, "find . -name '*.cs' -exec grep -l -w -e 'lock' '{}' \; | wc" shows 35 files with lock (){}. Of course, not yet counting the [MethodImplAttribute(MethodImplOptions.InternalCall)] methods that have to be redone as well.

Similar to @kaby76 my personal reason why I'm interested in this is to utilize a managed JIT or GC is to see if we can accelerate them with FPGAs, ultimately creating a "JIT processor".

In response to this problem, we should think about why the introduction of C++ language was used to write JIT and GC. Why not directly use C# and other languages of the .net platform (hereinafter referred to as: CLR--Langs) to achieve. This may be because CLR--Langs do not have the description and interaction capabilities of C++.

I believe that if you switch to CLR--Langs for GC and Jit development, it will be very helpful to make up for many of the deficiencies of CLR--Langs in this regard. We should let some of il's hack technology upgrade and even become the features of CLR-Langs.

After the language conversion between Jit and GC is completed. Then many native-level functions and technologies will also be more flexible. For example: it is more convenient to do CoreRT (even il2c/il2cpp), and it is more convenient to do native compilation.

Similar to the open source work of .net, we should deal with these problems earlier, rather than always postpone the problem. This is something to be done sooner or later, why not now.

Maybe it's time for CLR-Langs to become mature. Let C# become a multi-paradigm, multi-purpose scene, more low-level affinity language.

Just wanted to mention that the MOSA-Project has one but I guess that would not work because the internals are too different. But it shows that it is possible in the end and also gives good results.

MOSA is an open source software project that natively executes .NET applications within a virtual hypervisor or on bare metal hardware!

The MOSA project consists of:

- Compiler - a high quality, multithreaded, cross-platform, optimizing .NET compiler

- Kernel - a small, micro-kernel operating system

- Device Drivers Framework - a modular, device drivers framework and device drivers

- Debugger - QEMU-based debugger.NET 6 was released back in November, and added AOT compilation. I wonder if it'd be possible to have an AOT extension of sorts that would convert P/Invoke calls into native C calls. Then, the C# runtime could use calls to malloc instead of new.

@colejohnson66, currently there is a broader issue with inlining of {reverse} P/invoke calls in JIT: https://github.com/dotnet/runtime/issues/54107; the codegen is suboptimal around those edge for all modes (jit, aot, r2r). It is best to use them sparingly and avoid them in hot paths (especially the reverse p/invoke; methods marked with [UnmanagedCallersOnly]).

@am11 Well, we wouldn't be able to GC as we'd be the runtime. Hence, my suggestion of P/Invoke for (T*)malloc(...) and free(obj). Then the AOT code generator would see a P/Invoke to malloc and inline the invocation to a C-style call.

@am11 Obviously parts of the runtime are included. I'm wondering if it'd be possible to have an AOT compiler that wouldn't bundle the runtime, just startup code. More simply, is it even possible to write a C# application that requires no runtime (like Rust with nostd)?

Currently, (I think) all optimizations are JIT disabled for P/Invokes.

That's not correct. P/Invokes are optimized just like any other calls. The only difference is that P/Invokes have an extra "PInvoke transition" code around the call. This transition is by design and cannot be optimized out.

We have a few issues tracking potential optimizations of these transitions, like https://github.com/dotnet/runtime/issues/54107 that would kick in when there are multiple PInvokes next to each other. The situations when optimizations like this would kick in are likely to be rare and won't move the needle much.

The situations when optimizations like this would kick in are likely to be rare and won't move the needle much.

I think this is a bit dependent. While direct back-to-back P/Invokes are somewhat rare, there are many scenarios where there are back to back P/Invokes with only minimal checking or error handling around them. Even in WinForms/WPF and especially with the move away from the built-in marshaller there exists a lot of code in the form of the following or where there are other minimal checks or non GC interacting logic:

ThrowIfFailed(SomePInvoke1());

ThrowIfFailed(SomePInvoke2());Today these can only be minified by creating your own C library that wraps the logic and invoking into that. However, creating your own C library comes with many complexities and issues; as does compiling it, shipping it, dealing with scenarios the code can't be as "ideal" as what the JIT generates, etc. So ideally the JIT could be a bit smarter here and avoid the transitions when only blittable logic and handling is used. Things like new, fixed, and other code that interacts with the GC would prevent this but if you're just checking a result and throwing in the cold path, it wouldn't be unreasonable for the JIT to elide the transition between PInvoke1 and PInvoke2 and for the cold path to have a transition "back" in the exceptional failure case. There are alternatives here as well, such as having a way for devs to write an UnmanagedCallersOnly function which is "purely unmanaged" and avoids the transition back.

Is it possible?