The thought occurred to me that PerfView is just the tool for tracking down the heap allocations. Indeed, here are the relevant allocating stacks:

| Name | Inc % |

|---|---|

| || + ntdll!RtlpAllocateHeapInternal | 98.6 |

| || + coreclr!EEHeapAllocInProcessHeap | 98.6 |

| || + coreclr!operator new[] | 50.2 |

| || |+ coreclr!EventPipeEvent::EventPipeEvent | 50.2 |

| || ||+ coreclr!EventPipeProvider::AddEvent | 50.2 |

| || || + coreclr!EventPipeInternal::DefineEvent | 50.2 |

| || || + system.private.corelib!IEventProvider.DefineEventHandle | 50.2 |

| || || + system.private.corelib!NameInfo.GetOrCreateEventHandle | 50.2 |

| || || + system.private.corelib!EventSource.WriteImpl | 50.2 |

| || || + ?!? | 50.2 |

| || |+ coreclr!CStructArray::Grow | 0.0 |

| || + coreclr!operator new | 48.4 |

| || + coreclr!EventPipeProvider::AddEvent | 48.4 |

| || + coreclr!EventPipeInternal::DefineEvent | 33.8 |

| || |+ system.private.corelib!IEventProvider.DefineEventHandle | 33.8 |

| || | + system.private.corelib!NameInfo.GetOrCreateEventHandle | 33.8 |

| || | + system.private.corelib!EventSource.WriteImpl | 33.8 |

| || | + ?!? | 33.8 |

| || + coreclr!EventPipeProvider::AddEvent | 14.6 |

| || + coreclr!EventPipeInternal::DefineEvent | 14.6 |

| || + system.private.corelib!IEventProvider.DefineEventHandle | 14.6 |

| || + system.private.corelib!NameInfo.GetOrCreateEventHandle | 14.6 |

| || + system.private.corelib!EventSource.WriteImpl | 14.6 |

| || + ?!? | 14.6 |

Reported originally at https://github.com/Microsoft/ApplicationInsights-dotnet/issues/1102

Repros on .NET Core 2.2, x86 and x64 on Windows.

Build the following application:

Run it on Windows and watch the memory usage of dotnet.exe in Task Manager. It settles down on my machine around 10 MB.

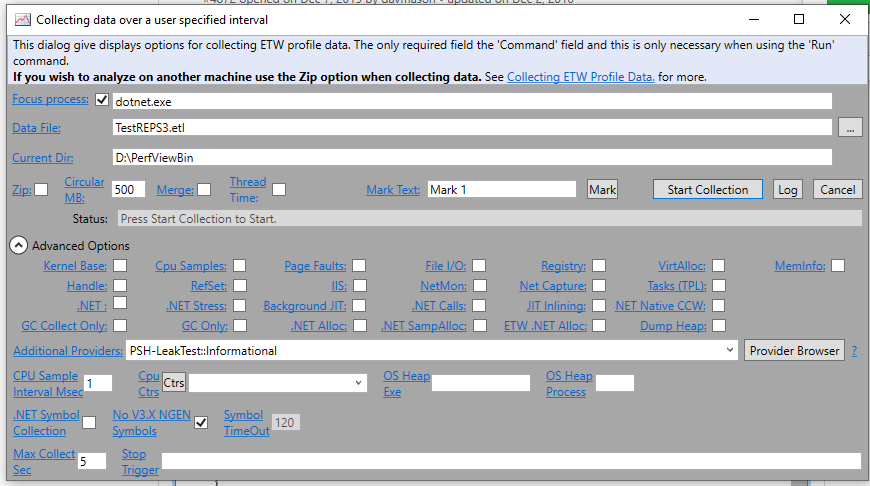

Now enable the ETW event listener for the event source. The easiest way to do that is to run PerfView. Press Alt+C to start a collection and make it look like this:

The important thing is to add this in Additional Providers:

PSH-LeakTest::InformationalYou can turn off all the other options. Now, when you start logging, watch the memory usage in dotnet.exe climb like crazy (over 200 MB in 5 seconds). When you stop logging, the memory isn't freed. If you create a dump of the dotnet.exe process, the GC heap is fine. Most of the additional memory is in native heap and it seems to consist of the event manifests repeated over and over again.This does not reproduce on .NET 4.7.2 I don't know about earlier .NET Core runtimes.

Please let me know if you need any more information, but I think this is a pretty minimal repro with clear instructions. One thing I could do is eliminate PerfView by writing a custom app based around TraceEvent or even a WPR profile.

To be clear how this affects Application Insights customers: The Application Insights Profiler runs in Azure App Services. It captures ETW sessions for 2 minutes at a time about once an hour. One of the providers it enables (listens to), is the Application Insights "Rich Payload Event Source" (see the original bug in the Application Insights repo). Customers are now encountering these memory leaks under load when profiling is running leading to application restarts.