Add option to provide custom start/stop hooks

👍

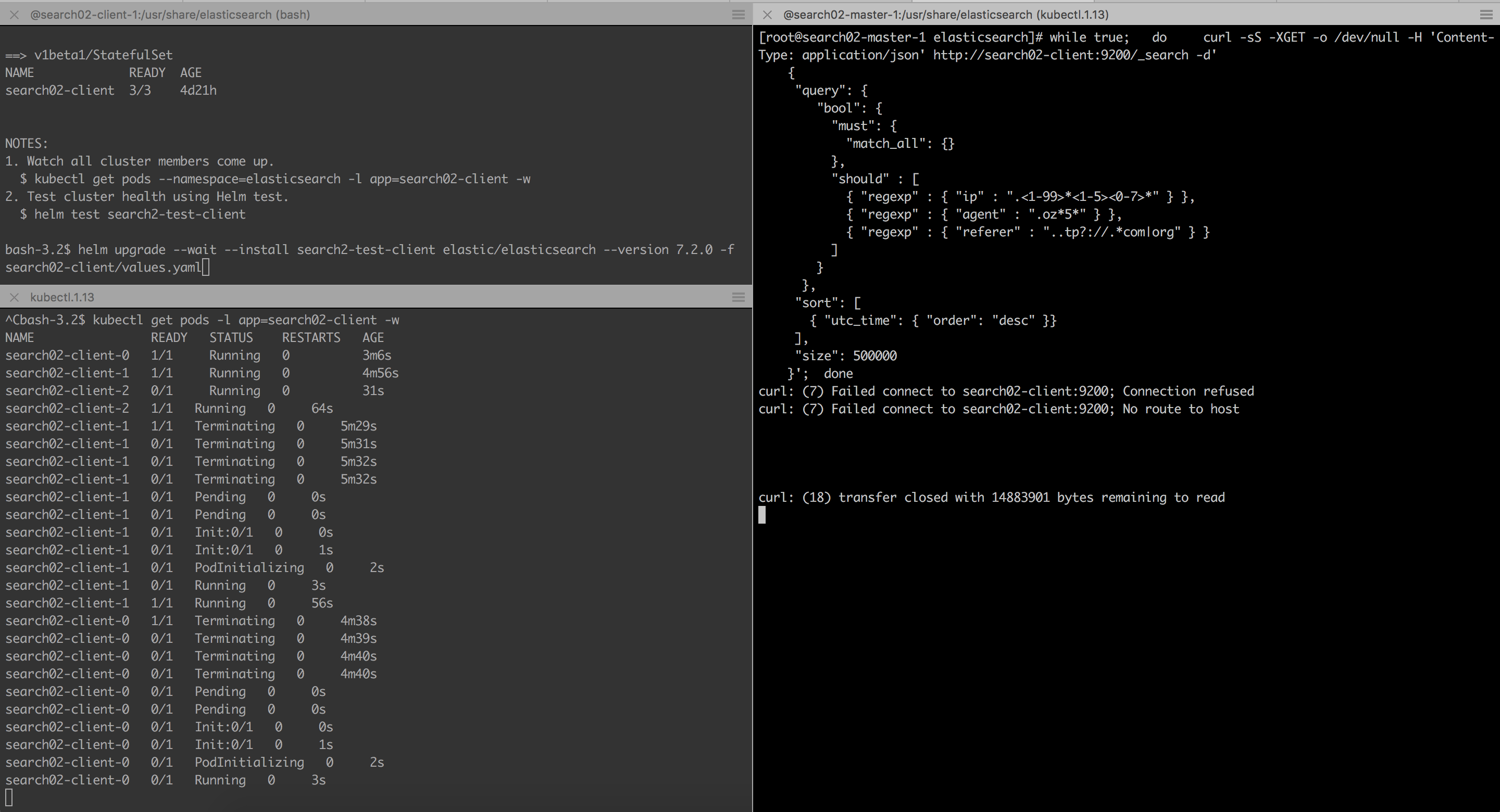

For coordination nodes, I want to add pre-stop hook to wait before sending SIGTERM to complete already open connections. Otherwise, during the rolling restart client pods will be killed and all active connection will be dropped.

Is Elasticsearch really not waiting for these connections to be completed? If the connections are really long running then it is possible you might need to increase the terminationGracePeriod to make sure that Kubernetes doesn't kill the pod too early.

What would you be adding as a preStop hook to prevent this behavior? I'm interested since it sounds like something that maybe should be added into the helm chart by default. Or that it needs to also be fixed in Elasticsearch itself too.

Describe the feature: Add option to provide custom start/stop hooks

Describe a specific use case for the feature: For coordination nodes, I want to add pre-stop hook to wait before sending SIGTERM to complete already open connections. Otherwise, during the rolling restart client pods will be killed and all active connection will be dropped.