Tyler, really nice, succinct discussion here. You all are much better than I am at this, but I have a couple questions. Overall, this seems like it's very helpful and clean. I'm thinking about it from an unusual vantage point, though.

Let me give you a little background:

I. A nested example

I've done a lot of work putting a LinedCodeNode together. One of the lessons is that building nested text layouts in Lexical is very hard. The Override API made this a palatable endeavor — I had the node working before the Override API was available and you would have hated it. It required a lot of command overrides.

Nevertheless, after a lot of work, I can still have trouble conceptualizing nested Lexical layouts.

Take import/exportJSON.

Say you have a top-level node of code. Its immediate children are divs, which are lines, and its grandchildren are spans (text/code). Which node should control import export?

- Should

codehandle all its children? - Or should each div/line handle itself?

I settled on having the top-level node — the shadowRoot or code — control all. I really liked the notion of each nested node controlling its own destiny in an abstract way, but, unsurprisingly, in practice, this caused one of my eyes to pulse.

I bet you can image some of my questions now:

II. Who's destiny?

What kind of node gets a schema — ElementNode, TextNode, or both? and which node wins if the developer has created a nested structure? In some ways, your example is a good example of how I, myself, can become instantly confused.

Your example is of a TableRowNode — what about the TableNode, though? What do I do with that? I see one of the cited "relations" is a parent — so am I checking the schema of the whole Table from the TableRow?

Or, are you saying each node checks a schema of parents and children because they all have to mind their own destiny? That's great, I guess...but, then, what do I do with normalization? Do I handle it on parent or child...or sibling?

And what happens when two normalization functions produce results that conflict with each other in a nested structure?

III. A relative dash

When do the schemas run? On creation? Before or after .append, .splice., etc?

This seems like a dangerously abstract question — so let me work with an example, instead.

When creating the LinedCodeNode, I kick everything off with a $createLinedCodeNode() function. Then, later, I create lines via a $createLinedCodeLineNode() function.

They may be appended or spliced into place, depending. That's all fine.

In the context of a schema, though, I'm not sure what happens when I run either of these functions.

In the case of the top-level node (LinedCodeNode), the lines don't exist yet. Will the schema method run after I've assembled enough of the parts to make it happy? Or will it run too soon and object, even though I'm not done yet?

Same question with the lines. They start out on their own, without a parent. Then they're appended or spliced into place. Will the schema method run after they've been assembled? Or will it run before that happens — and complain?

The answer may be plainly obvious and everything will be great. Probably best to ask now, though.

IV. Let's drive...

So my last thought is a rumination.

When I first heard you all mention schemas, I thought it might be a way to more easily assemble nested structures.

In this fantasy land, I imagined the schema would be somewhat akin to importJSON or importDOM. Basically, I thought it might contain a "node layout" — a "map" — representing the final nested structure. You'd pass it the data needed to assemble said structure. And it would then follow your map and build your nodes and update the selection.

Ish. I'm not half as good a coder or designer of such things as you all are, but I do sorta wonder if the schema could be more of an active participant in the creation of nodes than an enforcer of rules.

If the former, developers could leverage it to make more predictable and complex nodes. If the later, it's mostly for development and we can always console.log our way through it? Maybe you all have other plans for this.

Again, I figured now was a good time to ask.

-j

Lexical Schemas

The Problem

A lot of mistakes with Lexical are caused by developers adding nodes where they shouldn’t.

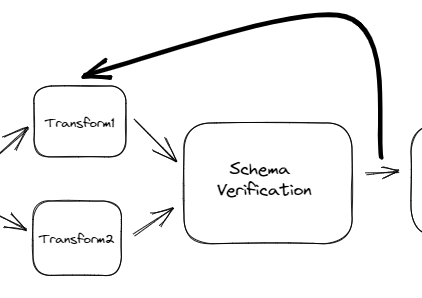

Schemas are an API proposal that’s designed to add guardrails around the insertion of nodes. They'll check if a given relationship (parent, child, sibling) is valid and allow you to normalize it if possible.

Slate.js solves this elegantly with their Schema (https://docs.slatejs.org/v/v0.47/guides/schemas) API and developers find it intuitive, so it makes a lot of sense to propose a similar API that’s familiar to users of Slate and other Rich Text solutions.

The Solution

We’ll stick with our current approach of encapsulating node-related logic on the class itself by introducing a new static method, getSchema, which returns a Lexical Schema.

The schema’s relation methods will be called at different points within the core insertion logic and aim to remove the many çase-specific patches and node methods throughout the codebase while catching bugs before hitting production.

If any of the validations fail it will warn the developer in the console and we’ll provide a normalize method that’ll act as an escape hatch and let you to fix the issue.

Example

Take the following Table Row example A row should never not be a child of a table, it should never have a child that isn’t a cell, and it should never be a sibling that isn’t another row. This is expressed almost verbatim in code below.