Hi,

The origin of the volume is dictated by the data inside pose.txt. I provided an example pose.txt for the dry ice dataset but that's a specific transformation for that particular dataset; you'll have to change it if you're using your own data. See my post here for an explanation of that file: https://github.com/facebookresearch/neuralvolumes/issues/1#issuecomment-591602762

If you know the origin of the scene is [0, 0, 0] then you should be able to just put an identity matrix inside the pose.txt file.

Hi there,

I wonder whether the origin of the volume is (0,0,0)?

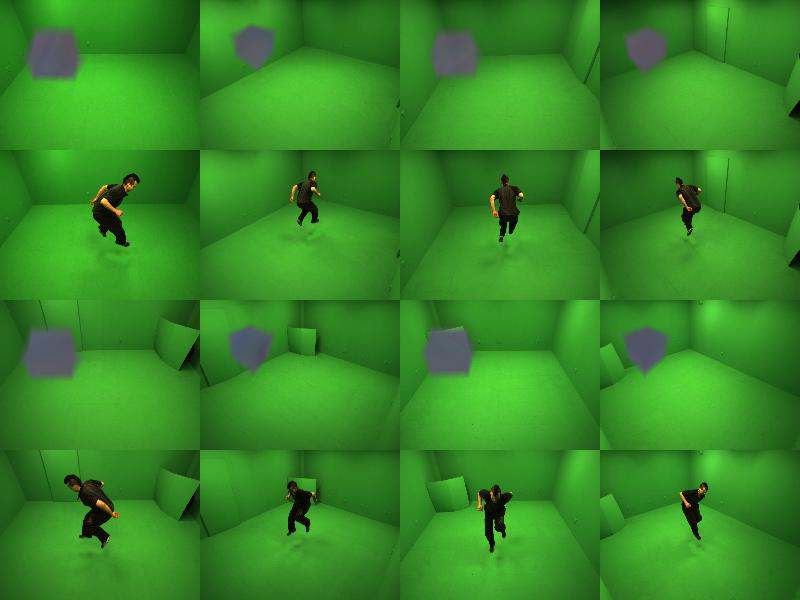

I'm testing the method on a public dataset (http://people.csail.mit.edu/drdaniel/mesh_animation), and I know exactly where (0,0,0) is in the images. But the volume seems to float around the scene. This is the first preview for training process:

Each camera is pointing to the opposite side of the scene, so I expect the same for the volume location in images. But for some reason, they are on the same side in the images. Can you help?

Thank you.