Hi @Muon2. Thanks for issue. It's worth pointing out that we don't yet support line search, which makes direct comparison with Ceres more difficult. In this case, one possibility is that Theseus is jumping around the local optimum because of the large step size. Two easy things to try to start with are:

- Lowering the step size and increasing the number of iterations in the LM constructor call.

- Passing

"adaptive_damping: True"to theoptimizer_kwargswhen you call theforward()method.

Also, can you add "verbose: True" and paste here the log outputs you get?

Thanks.

❓ Questions and Help

I'm currently using Ceres with the following options:

While Ceres is giving me exact solutions, I'm encountering issues when using Theseus as it cannot give me correct solutions. Here's the code I'm using with Theseus:

Do you have any ideas on what could be causing this issue? Is it possible that Theseus is not as efficient as Ceres? I cannot give specific code, but as it can be seen in following pictures, ceres successfully gives the ground-truth solution of 0.2. I have tried my best to align the optimizer hyperparameters (such as iterations or numerical precisions), but I'm still not able to get the correct solution (only approximated) from Theseus. Ceres's solution: Theseus's solution:

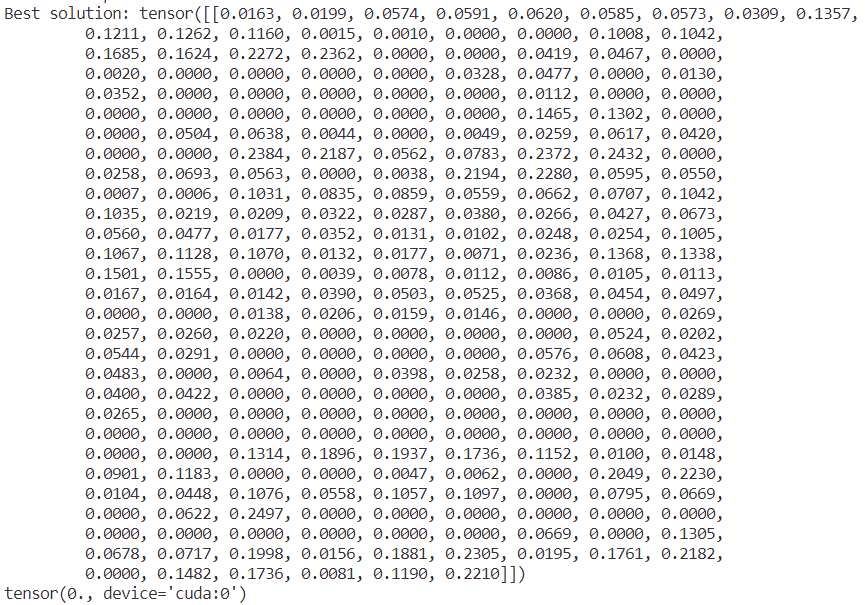

Theseus's solution: