This has reached a reached a very high level of stability and feature-set, it is far better than what is currently in the main branch. Support for some ops is not complete and there are some guards to be added to ensure proper parsing, but these are mostly corner cases that we can address later. So I would propose we merge it in this state and continue with bugfixes as we go. There's significant developments built on top already that I wouldn't like to push as part of this PR.

Refreshed version of https://github.com/fastmachinelearning/hls4ml/pull/723 to leave behind messy git history

Current parsing of pytorch models uses a loop of the

named_modulesof the model (https://github.com/fastmachinelearning/hls4ml/blob/main/hls4ml/converters/pytorch_to_hls.py#L163). This has several disadvantages:forward()method of the modelIn this PR, we propose to fix this by first created a graph representation of the model's

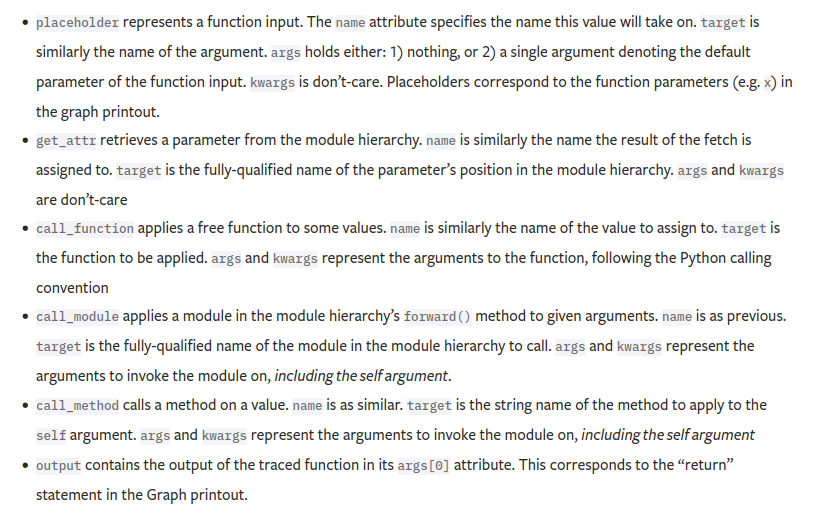

forward()function using the symbolic tracing functionality of https://pytorch.org/docs/stable/fx.html. Each operation in theforward()is represented by a node in the graph. Nodes can be of these types:For example, for this model

the resulting graph representation is

As the nodes in the graph follow the order of operations of the

forward()function, we can then simply loop over them and parse each node into one node in the hls4ml model representation. For the parsing of the individual layers, existing code is used where available without significant changes. Functionality for more types of layers is also added by this PR.The types of layers currently understood by the parser are

This PR also fixes https://github.com/fastmachinelearning/hls4ml/issues/409

Changes are mostly confined to the frontend, but small changes are made to the backend to the templates for pooling layers to add the option that zero-padded entries are included in average pooling operations.

One big difference between pytorch and keras is the data format of the input tensors, which is channels_first by default, instead of the channels_last used by keras. The built-in tools in pytorch to convert a model to channels_last don't work for all dimensions of the input. Therefore the functionality has been added to transpose the inputs within hls4ml so the existing channels_last implementations of layers can be used. By default the inputs are transposed for io_parrallel but not io_stream since we don't have transpose layers for all dimensions in io_stream. The outputs are not transposed by default, but this can be switched on, again only for io_parallel.

Limitations:

Type of change

Tests

The new parsing was tested using 5-6 different pytorch model examples from around the web. In addition, I verified that the two example models for pytroch included with hls4ml get parsed successfully. A test for the API was added in the test/pytest folder, in analogy to the test for the keras parser. All tests pass successfully.

Checklist

pre-commiton the files I edited or added.