Could it be because of missing COOP/COEP headers?

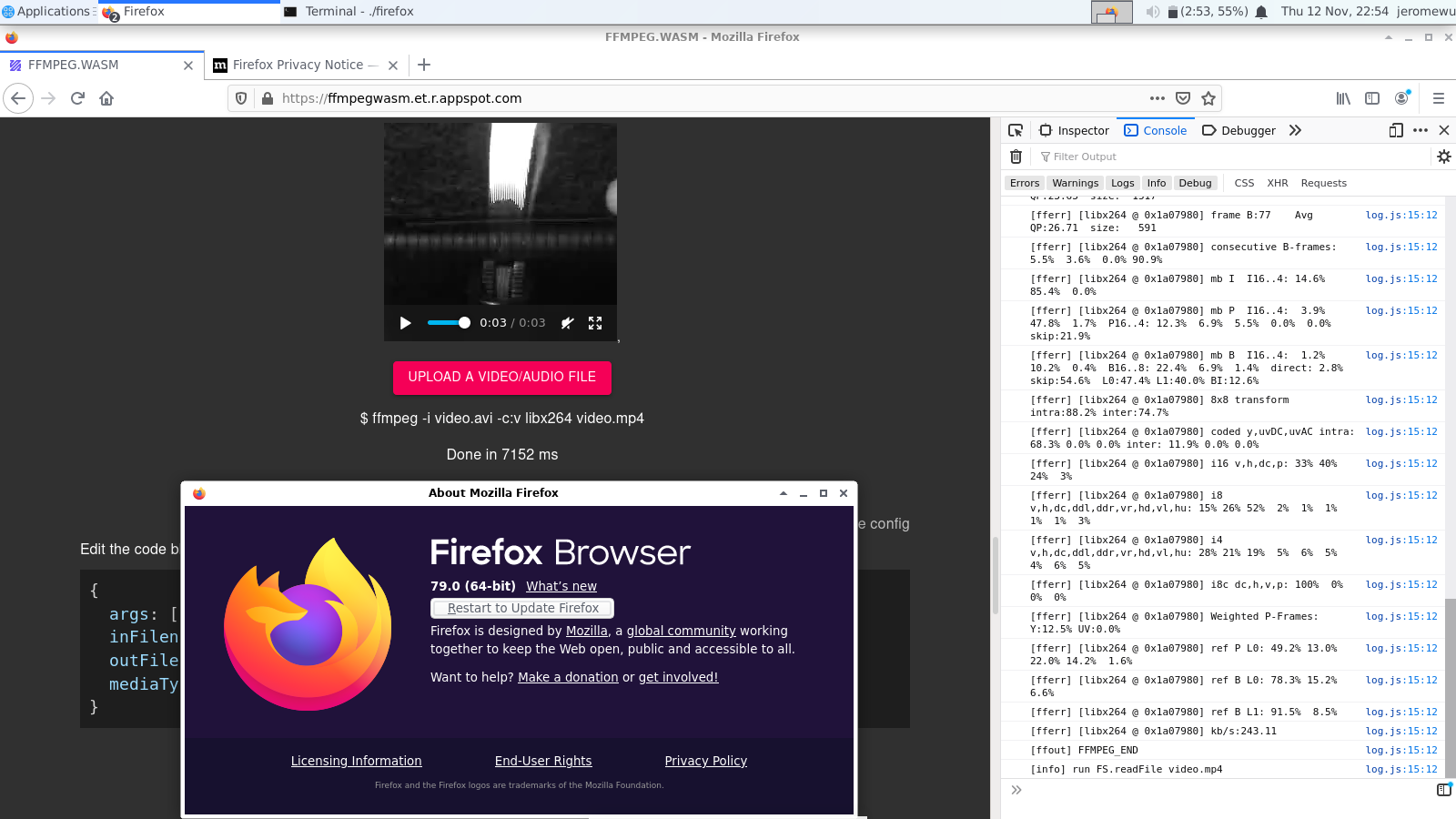

I faced the same issue in the ffmpeg.wasm demo in its Github page. Some casual searching led me to this issue in Bugzilla which led me to this page on web.dev. Also the Firefox 79 for developers page which @amn linked says the same thing.

I guess the Github page needs to be configured to send the correct headers?

Describe the bug The front page says:

To Reproduce Steps to reproduce the behavior:

Expected behavior As

SharedArrayBufferis available in the aforementioned versions of Firefox (on certain conditions), I think it is fair we don't fall into the "this only works in Chrome" bane of Web applications made today, especially if the application may in fact work in Firefox and the latter only refuses to provide the object for security reasons: https://developer.mozilla.org/en-US/docs/Mozilla/Firefox/Releases/79#JavaScriptDesktop (please complete the following information):