Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Closed SiGe-Technology closed 1 year ago

Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Thanks for your request! Everything looks good. :ok_hand:

A Governance Team member will review the information provided and contact you back pretty soon.

Total DataCap requested

5PiB

Expected weekly DataCap usage rate

100TiB

Client address

f1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

f02049625

f1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

50TiB

df8e0066-162e-48ab-9689-0bfdd98d8368

Here are your data samples, can you provide more about it to proof that you need 5PiB Datacap?

Any updates?

About KYC&KYB 1:Could you send an email to filplus-app-review@fil.org ?

The content should include the number of the LDN application. If possible, please attach copies of the business license and other valid certificates

2:Can you provide more detailed information about other storage providers participated in this program, such as you can list SPs you have contacted with at present?

Here are your data samples, can you provide more about it to proof that you need 5PiB Datacap?

Hi,

Thanks for you point out this. And here are some pics that shows our data we store on the server, the videos in the netbook are just a few samples that we take out to show. After all, these large volumes of data are also more difficult to store on the web.

Any updates?

About KYC&KYB 1:Could you send an email to filplus-app-review@fil.org ?

The content should include the number of the LDN application. If possible, please attach copies of the business license and other valid certificates

2:Can you provide more detailed information about other storage providers participated in this program, such as you can list SPs you have contacted with at present? 3. How large is your existing dataset? How much is the data growth per month?

Hi, We apologize for not updating the new data news recently, our colleague has Covid infection and has a high fever for several days. Thx for your understanding!

We’ve just sent the email with business license and our application number.

Our initial plan is to find ten sps and distribute the acquired data evenly, after which the planned distribution of DCs will be in accordance with the differences in packaging speed and size of each sp to make certain changes. We are now more sure to cooperate with the following nodes: f0432773 f01170291 f01032989 f01213126 f01384113 f01270657 f01198966 and our company had cooperation with Interstellar Storage (Shenzhen), and will also continue to communicate with them in this regard

As the picture in the reply above shows, our company's database storage is about to reach the PB level. And we receive a lot of video data every day, so the data will grow around 30T to 50T every month.

Your Datacap Allocation Request has been proposed by the Notary

bafy2bzaceba6vyco2xbumrdovtxuw4xt6crrsfdjghaif5ji4tmu7yfsamste

Address

f1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

Datacap Allocated

50.00TiB

Signer Address

f1mdk7s2vntzm6hu35yuo6vjubtrpfnb2awhgvrri

Id

df8e0066-162e-48ab-9689-0bfdd98d8368

You can check the status of the message here: https://filfox.info/en/message/bafy2bzaceba6vyco2xbumrdovtxuw4xt6crrsfdjghaif5ji4tmu7yfsamste

Your Datacap Allocation Request has been approved by the Notary

bafy2bzacea3i5kkcfpccornowp5g7akhig2v7d45t7yce5vvxahwcoje5p3ti

Address

f1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

Datacap Allocated

50.00TiB

Signer Address

f1e77zuityhvvw6u2t6tb5qlnsegy2s67qs4lbbbq

Id

df8e0066-162e-48ab-9689-0bfdd98d8368

You can check the status of the message here: https://filfox.info/en/message/bafy2bzacea3i5kkcfpccornowp5g7akhig2v7d45t7yce5vvxahwcoje5p3ti

Hi,

From here -> https://github.com/filecoin-project/filecoin-plus-large-datasets/issues/1306#issuecomment-1350523023

It indicates you need 22 TB of datacap to store this photo's. When you replicate those to 10 providers it gives 220 TiB of needed datacap.

@newwebgroup why did you approve this request to be 5 PiB that is around 20 times the amount of data this client needs? @1ane-1 same question?

Hello cryptowhizzard, thank you very much for your question. Regarding the above 22T storage image, this image just shows the data stored on one of our servers. Since our company needs to record a lot of videos and pictures every day and this amount will continue to grow afterwards, our company now has almost ten storage servers. This is the reason why we need such a large storage capacity of 5 PiB.

@cryptowhizzard Hey, Sir Because it was the first round, the Client answered my relevant questions, and the conditions for triggering signature had been met, so I chose to pass. If you have more questions about the dataset size, you can keep an eye on it and check it.

Dear Applicant,

Due to the increased amount of erroneous/wrong Filecoin+ data recently, on behalf of the entire community, we feel compelled to go deeper into datacap requests. Hereby to ensure that the overall value of the Filecoin network and Filecoin+ program increases and is not abused.

Please answer the questions below as comprehensively as possible.

Customer data

We expect that for the onboarding of customers with the scale of an LDN there would have been at least multiple email and perhaps several chat conversations preceding it. A single email with an agreement does not qualify here.

Did the customer specify the amount of data involved in this relevant correspondence?

Why does the customer in question want to use the Filecoin+ program?

Should this only be soley for acquiring datacap this is of course out of the question. The customer must have a legitimate reason for wanting to use the Filecoin+ program which is intended as a program to store useful and public datasets on the network.

(As an intermediate solution Filecoin offers the FIL-E program or the glif.io website for business datasets that do not meet the requirements for a Filecoin+ dataset)

Files and Processing

Hopefully you understand the caution the overall community has for onboarding the wrong data. We understand the increased need for Filecoin+, however, we must not allow the program to be misused. Everything depends on a valuable and useful network, let's do our best to make this happen. Together.

Dear Applicant,

Due to the increased amount of erroneous/wrong Filecoin+ data recently, on behalf of the entire community, we feel compelled to go deeper into datacap requests. Hereby to ensure that the overall value of the Filecoin network and Filecoin+ program increases and is not abused.

Please answer the questions below as comprehensively as possible.

Customer data

- Could you demonstrate exactly how and to what extent customer contact occurred?

We expect that for the onboarding of customers with the scale of an LDN there would have been at least multiple email and perhaps several chat conversations preceding it. A single email with an agreement does not qualify here.

- Did the customer specify the amount of data involved in this relevant correspondence?

- Why does the customer in question want to use the Filecoin+ program?

Should this only be soley for acquiring datacap this is of course out of the question. The customer must have a legitimate reason for wanting to use the Filecoin+ program which is intended as a program to store useful and public datasets on the network.

- Why is the customer data considered Filecoin+ eligible?

(As an intermediate solution Filecoin offers the FIL-E program or the glif.io website for business datasets that do not meet the requirements for a Filecoin+ dataset)

Files and Processing

- Could you please demonstrate to us how you envision processing and transporting the customer data in question to any location for preparation?

- Would you demonstrate to us that the customer, the preparer and the intended storage providers all have adequate bandwidth to process the set with its corresponding size?

- Would you tell us how the data set preparer takes into account the prevention of duplicates in order to prevent data cap abuse?

Hopefully you understand the caution the overall community has for onboarding the wrong data. We understand the increased need for Filecoin+, however, we must not allow the program to be misused. Everything depends on a valuable and useful network, let's do our best to make this happen. Together.

Hi, @herrehesse sorry for reply this so late. We totally understand that your consideratin of erroneous/wrong Filecoin+ data in the community, and we are glad to answer your question.

As the applicant, I am the customer. With this in mind, I will answer all questions in two parts, Customer data and Files and Processing, according to our situation.

Customer data

The SPs in the marketplace approached us saying to go to storage in a safer and cheaper way. So we're making a line of credit application. After that we started to learn about the FIL+ program and its very high security. Our company's development direction is also web3, and we know the value and necessity of data.

We as a customer don't care what way we do the application. Our paths are guided by the SPs that find us. Our business has been in operation for ten years. There is definitely more valuable data in ten years than what I've shown, and I'm sure you can understand the volume of data that an R&D and service business can generate in ten years.

As the data owner, we can ensure that the data is publicly available. Data that is not publicly retrievable we do not have in our storage plan. Nodes like the ones I mentioned earlier at https://github.com/filecoin-project/filecoin-plus-large-datasets/issues/1306#issuecomment-1350593419 are ones we are trying to actively engage with, and while we can't guarantee that we would work together, but we guarantee that we will follow the rules of fil+ and find 4+ SPs to work with.

Files and Processing

Like I said before, our business has been in operation for ten years. The volume of valuable data is also very substantial. And, we serve dozens of large, well-known companies. We have archived the publicly available data generated in the past for local download. The backed up data would sent to different SPs in the form of hard disks (these costs are borne by the SPs). Other transfer methods will be improvised.

The choice of we seletc SPs will of course also take into account their situation such as size and the speed of allocate data. The data we currently have ready is backed up according to the SPs in different regions. The number of backups is controlled by our company as the client and will not exceed ten.

Really appreciate your due diligence, and this brings us greater confidence in the transparency and security of the program.

@SiGe-Technology thank you so much for your detailed response. Here are some follow up questions:

"After that we started to learn about the FIL+ program and its very high security" May i state that Filecoin has a high security, the Filecoin+ program is meant for storing humanities most important information. They are not the same thing. This mistake is often made.

"We as a customer don't care what way we do the application. Our paths are guided by the SPs that find us." You should always care about the application. Business data is not meant to be stored on Filecoin+. Please contact @kevzak and try to start an application for FIL-E.

Since we are still in the first phase of the application, I would like to see how the distribution goes globally of the already accepted datacap, we will also regularly check retrieval and if the files are intact.

However, my opinion remains, this application is business and therefore for FIL-E.

@herrehesse based on the application information, this is a public data application.

E-Fil+ is a program for Private Data owners. Please let me know if you need more background before promoting the program incorrectly. Thank you.

Hi @kevzak,

Thanks for your response, to my understanding the E in E-FIL (FIL-E) stood for "enterprise" and thus the business applications of datacap. In case I have misunderstood this my apologies for the miscommunication.

I remain of the opinion that many of these applications are out of place when applying for datacap and should be prepared to store their business data with relevant storage providers for a fee. The gift of "datacap" should not be given at will to people who simply ask for it. The relevant data should at least contribute to a more valuable network, not solely for miners to grow with a multiplier.

SiGe Technologyf1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

11ane-11newwebgroup

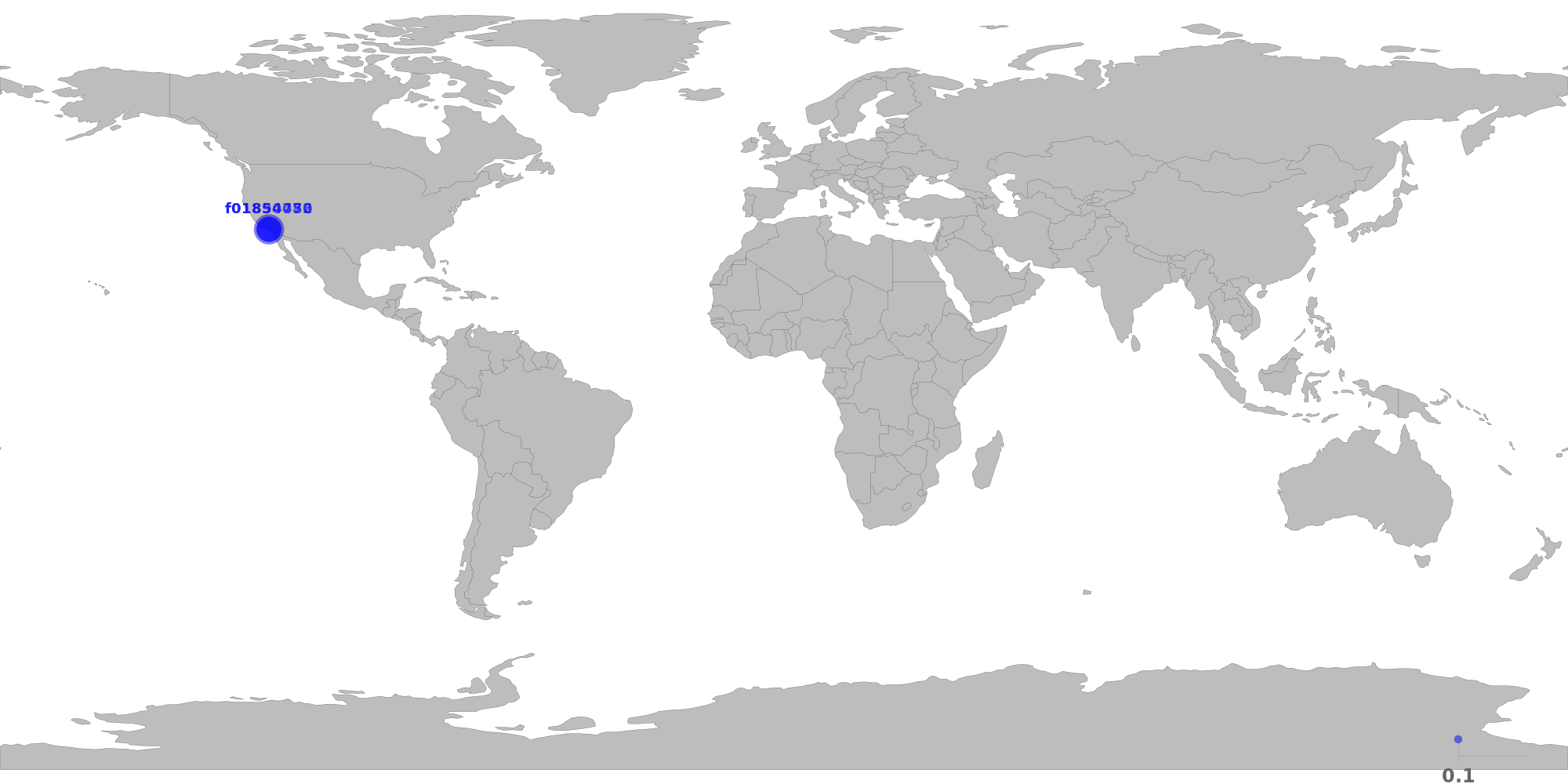

The below table shows the distribution of storage providers that have stored data for this client.

If this is the first time a provider takes verified deal, it will be marked as new.

For most of the datacap application, below restrictions should apply.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ f01985611 has unknown IP location.

⚠️ f01972110 has unknown IP location.

| Provider | Location | Total Deals Sealed | Percentage | Unique Data | Duplicate Deals |

|---|---|---|---|---|---|

| f01985611 | UnknownUnknown |

6.09 TiB | 29.64% | 6.09 TiB | 0.00% |

| f01890456 | Los Angeles, California, USZenlayer Inc |

5.56 TiB | 27.05% | 5.56 TiB | 0.00% |

| f01854772 | Los Angeles, California, USZenlayer Inc |

4.03 TiB | 19.60% | 4.03 TiB | 0.00% |

| f01854080 | Los Angeles, California, USZenlayer Inc |

3.91 TiB | 19.00% | 3.91 TiB | 0.00% |

| f01972110 | UnknownUnknown |

992.00 GiB | 4.71% | 992.00 GiB | 0.00% |

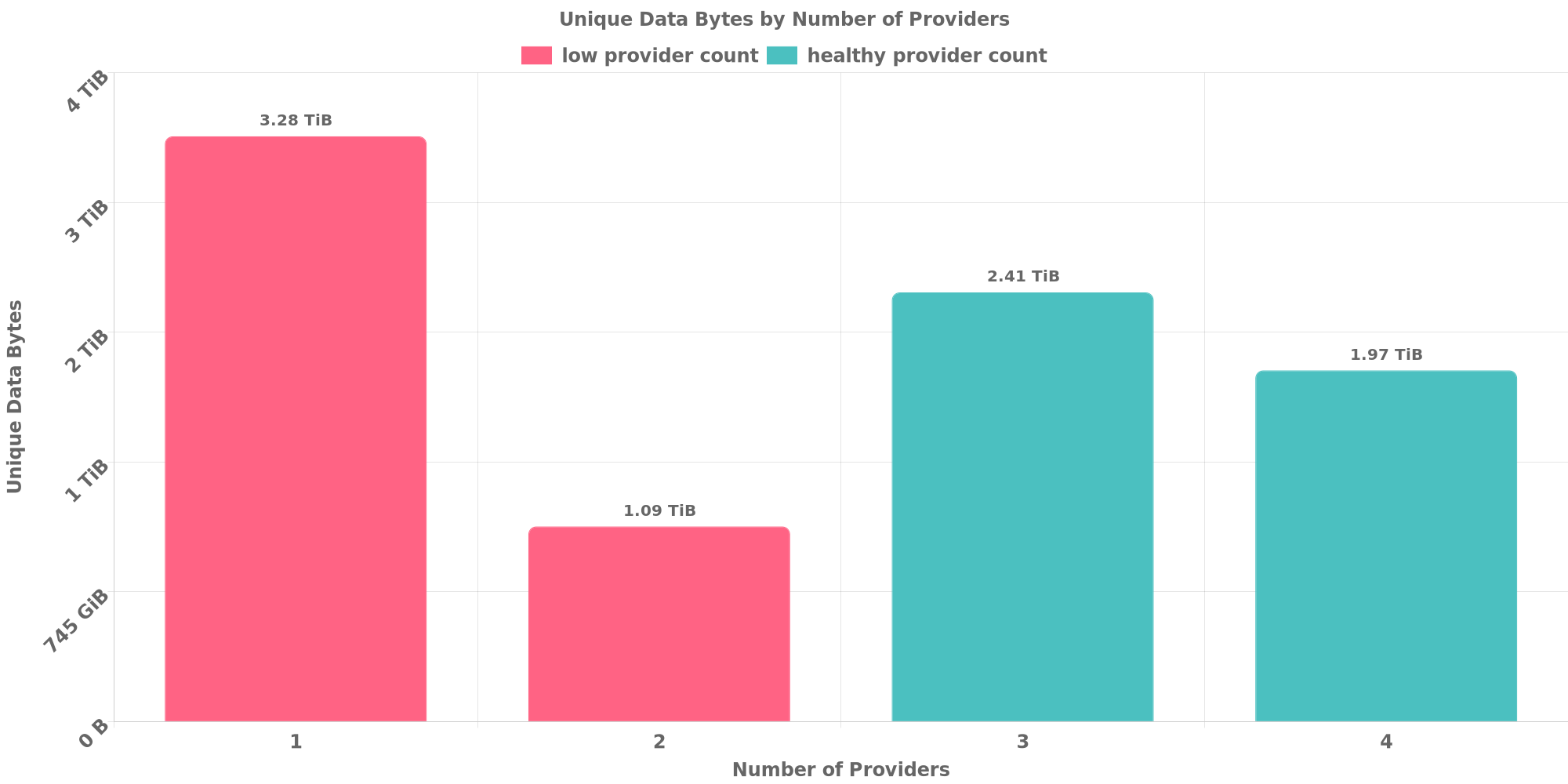

The below table shows how each many unique data are replicated across storage providers.

Since this is the 3rd allocation, the following restrictions have been relaxed:

✔️ Data replication looks healthy.

| Unique Data Size | Total Deals Made | Number of Providers | Deal Percentage |

|---|---|---|---|

| 3.28 TiB | 3.28 TiB | 1 | 15.96% |

| 1.09 TiB | 2.19 TiB | 2 | 10.64% |

| 2.41 TiB | 7.22 TiB | 3 | 35.11% |

| 1.97 TiB | 7.88 TiB | 4 | 38.30% |

The below table shows how many unique data are shared with other clients. Usually different applications owns different data and should not resolve to the same CID.

However, this could be possible if all below clients use same software to prepare for the exact same dataset or they belong to a series of LDN applications for the same dataset.

⚠️ CID sharing has been observed.

| Other Client | Application | Total Deals Affected | Unique CIDs | Approvers |

|---|---|---|---|---|

| f1gmiepn73zoa5gz2oiqyugjmrsecwj5qxd42vmyi | `` | 736.00 GiB | 17 | Unknown |

| f1px4vu4r5nvtiz6y774b7gjshmgy643jgtztw4wa | 2amok | 480.00 GiB | 15 | 1stcouldlisa1xingjitansuo |

| f13fjnwckkgnkbpapcmnpupbdf4ohduomdso4eqga | Asia Blockchain Gaming Alliance | 128.00 GiB | 4 | 3Defil20221GaryGJG2newwebgroup1psh06912stcouldlisa1Tom-OriginStorage |

| f16efumcgpjamyhfrflxsu2brd7wrhniqwqssfpzq | F00TAGE | 128.00 GiB | 4 | 1kernelogic1xingjitansuo |

| f1dob4zdjy6b3iinf6evbqzxf6nwgnftcksewabxq | Dr.ji | 96.00 GiB | 3 | 1newwebgroup1psh06911stcouldlisa1YuanHeHK |

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

Good morning,

It seems we can stop this before we even started. CID sharing observed with some fraudulent applications. Nothing of the data is retrievable.

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzaceawo2eq2dwcamgrmzo54u3o3law3k23rtalbiuicgdtsut275bqtu: getting pieces containing block bafykbzaceawo2eq2dwcamgrmzo54u3o3law3k23rtalbiuicgdtsut275bqtu: failed to lookup index for mh a0e402202ced121a1d84061a2ccbbbca6ddb582db56b71981614510230e72a4f5fe8613a, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzaceawo2eq2dwcamgrmzo54u3o3law3k23rtalbiuicgdtsut275bqtu: getting pieces containing block bafykbzaceawo2eq2dwcamgrmzo54u3o3law3k23rtalbiuicgdtsut275bqtu: failed to lookup index for mh a0e402202ced121a1d84061a2ccbbbca6ddb582db56b71981614510230e72a4f5fe8613a, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacedayasv72h4nh3jjm5p633v2gshnwamvjuhcuhosygigo2o2ut4hw: getting pieces containing block bafykbzacedayasv72h4nh3jjm5p633v2gshnwamvjuhcuhosygigo2o2ut4hw: failed to lookup index for mh a0e40220c1804abfd1f8d3ed29675fedeeba348edb01954d0e2a1dd2c1906769daa4f87b, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacecqfsh2nac5pbcnskiarzma2rseajtopqm76f47i3hd2mubenqwu6: getting pieces containing block bafykbzacecqfsh2nac5pbcnskiarzma2rseajtopqm76f47i3hd2mubenqwu6: failed to lookup index for mh a0e40220a0591f4d00baf089b252011cb01a8c8804cdcf833fe2f3e8d9c7a650246c2d4f, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzaceb4nbitgt6ujnhnlq5qe74ihd52szsc3i2zeyaztlmrfhvgzvhyfe: getting pieces containing block bafykbzaceb4nbitgt6ujnhnlq5qe74ihd52szsc3i2zeyaztlmrfhvgzvhyfe: failed to lookup index for mh a0e4022078d0a2669fa8969dab87604ff1071f752cc85b46b24c03335b2253d4d9a9f052, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacec4onybu7pwgxmznwdzrgfrhjhds5bmhautim4pxsw2t4txzaqozk: getting pieces containing block bafykbzacec4onybu7pwgxmznwdzrgfrhjhds5bmhautim4pxsw2t4txzaqozk: failed to lookup index for mh a0e40220b8e6e034fbec6bb32db0f313162749c72e858705268671f795b53e4ef9041d95, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacec3sl7szfvoyvhl3b2e6ot36m24lodrpddrg4xt7ri57u2g2x2f2k: getting pieces containing block bafykbzacec3sl7szfvoyvhl3b2e6ot36m24lodrpddrg4xt7ri57u2g2x2f2k: failed to lookup index for mh a0e40220b725fe592d5d8a9d7b0e89e74f7e66b8b70e2f18e26e5e7f8a3bfa68dabe8ba5, err: datastore: key not found

ERROR: offer error: retrieval query offer errored: failed to fetch piece to retrieve from: getting pieces for cid bafykbzacec3sl7szfvoyvhl3b2e6ot36m24lodrpddrg4xt7ri57u2g2x2f2k: getting pieces containing block bafykbzacec3sl7szfvoyvhl3b2e6ot36m24lodrpddrg4xt7ri57u2g2x2f2k: failed to lookup index for mh a0e40220b725fe592d5d8a9d7b0e89e74f7e66b8b70e2f18e26e5e7f8a3bfa68dabe8ba5, err: datastore: key not found

f02049625

f1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

100TiB

7c53db48-7889-4174-8dc3-ecf40dd3a7c6

f01858410

f1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

newwebgroup & 1ane-1

100% of weekly dc amount requested

100TiB

50TiB

4.95PiB

| Number of deals | Number of storage providers | Previous DC Allocated | Top provider | Remaining DC |

|---|---|---|---|---|

| 1108 | 6 | 50TiB | 35.02 | 11.81TiB |

SiGe Technologyf1kh7vqg4dcdtkwugc5wgd4vuitixiy7e6ugnalmy

11ane-11newwebgroup

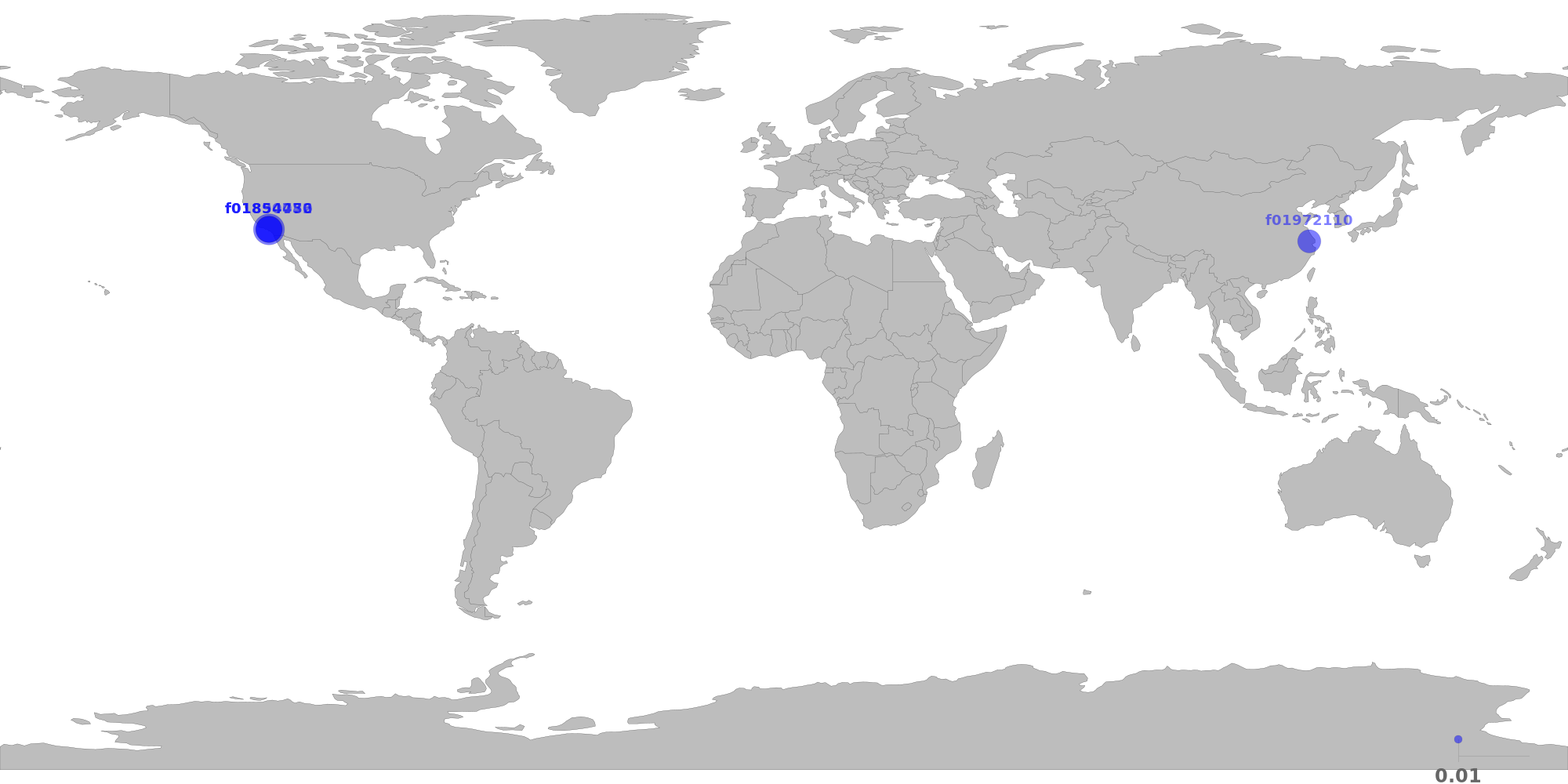

The below table shows the distribution of storage providers that have stored data for this client.

If this is the first time a provider takes verified deal, it will be marked as new.

For most of the datacap application, below restrictions should apply.

Since this is the 3rd allocation, the following restrictions have been relaxed:

⚠️ f01985611 has unknown IP location.

| Provider | Location | Total Deals Sealed | Percentage | Unique Data | Duplicate Deals |

|---|---|---|---|---|---|

| f01890456 | Los Angeles, California, USZenlayer Inc |

11.91 TiB | 37.65% | 11.91 TiB | 0.00% |

| f01985611 | UnknownUnknown |

9.63 TiB | 30.43% | 9.63 TiB | 0.00% |

| f01972110 | Suzhou, Jiangsu, CNCHINA UNICOM China169 Backbone |

2.16 TiB | 6.82% | 2.16 TiB | 0.00% |

| f01854772 | Los Angeles, California, USZenlayer Inc |

4.03 TiB | 12.75% | 4.03 TiB | 0.00% |

| f01854080 | Los Angeles, California, USZenlayer Inc |

3.91 TiB | 12.35% | 3.91 TiB | 0.00% |

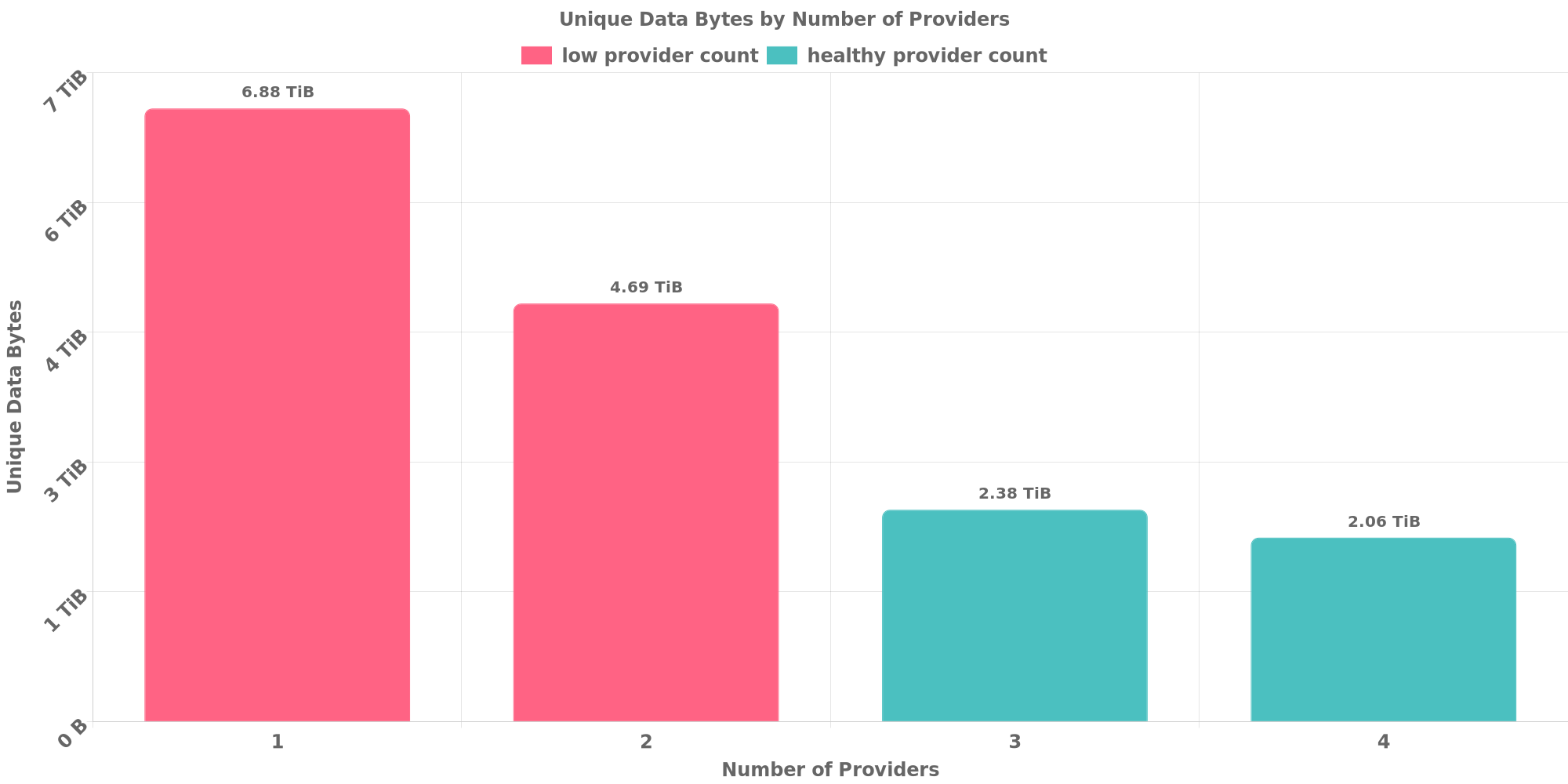

The below table shows how each many unique data are replicated across storage providers.

Since this is the 3rd allocation, the following restrictions have been relaxed:

✔️ Data replication looks healthy.

| Unique Data Size | Total Deals Made | Number of Providers | Deal Percentage |

|---|---|---|---|

| 6.88 TiB | 6.88 TiB | 1 | 21.74% |

| 4.69 TiB | 9.38 TiB | 2 | 29.64% |

| 2.38 TiB | 7.13 TiB | 3 | 22.53% |

| 2.06 TiB | 8.25 TiB | 4 | 26.09% |

The below table shows how many unique data are shared with other clients. Usually different applications owns different data and should not resolve to the same CID.

However, this could be possible if all below clients use same software to prepare for the exact same dataset or they belong to a series of LDN applications for the same dataset.

⚠️ CID sharing has been observed.

| Other Client | Application | Total Deals Affected | Unique CIDs | Approvers |

|---|---|---|---|---|

| f1gmiepn73zoa5gz2oiqyugjmrsecwj5qxd42vmyi | `` | 896.00 GiB | 22 | Unknown |

| f1px4vu4r5nvtiz6y774b7gjshmgy643jgtztw4wa | 2amok | 480.00 GiB | 15 | 1stcouldlisa1xingjitansuo |

| f1dob4zdjy6b3iinf6evbqzxf6nwgnftcksewabxq | Dr.ji | 288.00 GiB | 9 | 1newwebgroup1psh06911stcouldlisa1YuanHeHK |

| f13fjnwckkgnkbpapcmnpupbdf4ohduomdso4eqga | Asia Blockchain Gaming Alliance | 192.00 GiB | 6 | 3Defil20221GaryGJG2newwebgroup1psh06912stcouldlisa1Tom-OriginStorage |

| f16efumcgpjamyhfrflxsu2brd7wrhniqwqssfpzq | F00TAGE | 128.00 GiB | 4 | 1kernelogic1xingjitansuo |

| f13wvg4xve7nz3nev4bi2c7imod7lelstxfwwzjgi | We Doctor | 32.00 GiB | 1 | 1Alex118011Defil20223kernelogic1liyunzhi-6661NDLABS-OFFICE1stcouldlisa |

[^1]: To manually trigger this report, add a comment with text checker:manualTrigger

This application has not seen any responses in the last 10 days. This issue will be marked with Stale label and will be closed in 4 days. Comment if you want to keep this application open.

This application has not seen any responses in the last 14 days, so for now it is being closed. Please feel free to contact the Fil+ Gov team to re-open the application if it is still being processed. Thank you!

name: Large Dataset Notary application about: Clients should use this application form to request a DataCap allocation via a LDN for a dataset title: "SiGe-Technology" labels: 'application, Phase: Diligence' assignees: ''

Large Dataset Notary Application

To apply for DataCap to onboard your dataset to Filecoin, please fill out the following.

Core Information

Please respond to the questions below by replacing the text saying "Please answer here". Include as much detail as you can in your answer.

Project details

Share a brief history of your project and organization.

What is the primary source of funding for this project?

What other projects/ecosystem stakeholders is this project associated with?

Use-case details

Describe the data being stored onto Filecoin

Where was the data in this dataset sourced from?

Can you share a sample of the data? A link to a file, an image, a table, etc., are good ways to do this.

Confirm that this is a public dataset that can be retrieved by anyone on the Network (i.e., no specific permissions or access rights are required to view the data).

What is the expected retrieval frequency for this data?

For how long do you plan to keep this dataset stored on Filecoin?

DataCap allocation plan

In which geographies (countries, regions) do you plan on making storage deals?

How will you be distributing your data to storage providers? Is there an offline data transfer process?

How do you plan on choosing the storage providers with whom you will be making deals? This should include a plan to ensure the data is retrievable in the future both by you and others.

How will you be distributing deals across storage providers?

Do you have the resources/funding to start making deals as soon as you receive DataCap? What support from the community would help you onboard onto Filecoin?